INTENTS

Intents are the machine learning component of conversation.

They work best when you train the system on the representative language of the end user. Representative can not only mean the end users language, but the medium used to capture that question.

It is important to understand that questions drive the answers/intents and not the other way around.

People often go in thinking you need to the define the intents first. Collecting the questions first allows you to see what your users will be asking and focus on actions your intents take.

Pre-defined intents are more prone to manufactured questions, and you find that not everyone asks the intents you think they will. So you waste time training on areas you don't need to.

Manufactured Questions

A manufactured question isn't always a bad thing. They can be handy to bootstrap your system to capture more questions. But you have to be mindful when creating them.

First, what you may believe is a common term or phrase might not be to the general public. They do not have domain experience. So avoid domain terms or phrases that would only be said if they have read the material.

Second, you will find that even if you go out of your way to try and vary things, you will still duplicate patterns.

Take this example:

how do I get a credit card?

Where do I get a credit card?

I want to get a credit card, how do I go about it?

When can I have a credit card?

The core term here credit card is not varied. They could say visa, master card, gold card, plastic or even just card. Having said that, intents can be quite intelligent with this. But when dealing with a large number of questions, it's better to vary.

Clustering

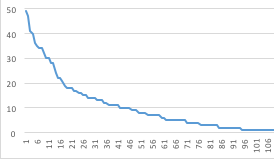

For a properly trained cluster you will need a minimum of 5 questions. Optimum is 10. If you collected questions rather than manufacturing you will find clusters that do not have enough to train. This is fine as long as you have a long tail with a similar pattern like this:

![example long tail graph]()

(Horizontal = Number of questions, Vertical = Cluster ID sorted by size)

If you find that there are too many unique questions (graph = flat line), then the intents component is not best at solving this.

The other thing to look for when clustering is clusters that are very close to each other. If your "intent" is give an answer, you can improve performance by just shaping your answer for both and merging the clusters. This can be a good way of enforcing weak clusters.

Testing

Once you do have everything clustered, remove a random 10%-20% (depend on number of question). Do not look at these questions. You use these as your blind test. Testing these will give you a reasonable expectation of how it will likely perform in the real world (assuming questions are not manufactured).

In earlier versions of WEA, we had what was called an experiment (k-fold validation). The system would remove a single question from training, and then ask it back. It would do this for all questions. The purpose was to test each cluster, and see what clusters are impacting others.

NLC/Conversation doesn't do this. To do it would take forever. You can use instead a monte-carlo cross fold. To do this, you take a random 10% from your train set, train on the 90% and then test on the 10% removed. You should do this a few times and average the results (at least 3 times).

In conjunction with your blind test they should fall relatively close to each other. If they are say outside of 5% range of each other, then you have a problem with your training. Use the monte-carlo results to examine why (not your blind set).

Another factor for this testing, is confidence. If you plan not to take action under a certain confidence level, then also use this in your testing to see how the end user experience will be.

ENTITIES

At the moment entities are pretty basic, but is likely to change. You would use entities where you have a narrow explicit scope of what it is you are trying to trap. There is no machine learning component to it at this time, so it can only detect what you tell it.

It also allows you to pass back a keyword your system can take action on. For example someone may say "cats and dogs" but you want to return @Weather:rain

REGEX

The last form of determining user intent is the conditional section. This can be pretty powerful as well, as you can create nested regular expressions. for example:

input.text.matches('fish|.*?\b[0-9]{4,6}\b.*?')

This example will trigger if they say just "fish" or a 4-6 digit number in their question.