Could someone please give me a mathematical correct explanation why a Multilayer Perceptron can solve the XOR problem?

My interpretation of the perceptron is as follows:

A perceptron with two inputs  and

and  has following linear function and is hence able to solve linear separateable problems such as AND and OR.

has following linear function and is hence able to solve linear separateable problems such as AND and OR.

The way I think of it is that I substitute the two parts within  separated by the + sign as

separated by the + sign as  and

and  and I get

and I get  which is a line.

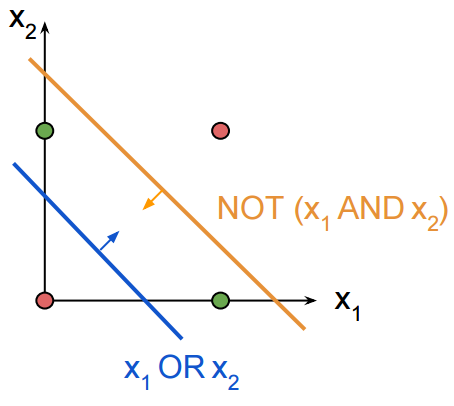

By applying the step function I get one of the the clusters in respect to the input. Which I interpret as one of the spaces separated by that line.

which is a line.

By applying the step function I get one of the the clusters in respect to the input. Which I interpret as one of the spaces separated by that line.

Because the function of an MLP is still linear, how do I interpret this in a mathematical way and more important: Why is it able to solve the XOR problem when it's still linear? Is it because its interpolating a polynomial?