There are lots of optimisations you can make to this code before you start thinking about numba et. al. (I managed to get a 25x speed up on this code only by being smart with the algorithm's implementation)

Firstly, there's an error in your implementation of the Metropolis--Hastings algorithm. You need to keep every iteration of the scheme, regardless of whether your chain moves or not. That is, you need to remove posterior = posterior[np.where(posterior > 0)] from your code and at the end of each loop have posterior[t] = x_t.

Secondly, this example seems odd. Typically, with these kinds of inference problems we're looking to infer the parameters of a distribution given some observations. Here, though, the parameters of the distribution are known and instead you're sampling observations? Anyway, whatever, regardless of this I'm happy to roll with your example and show you how to speed it up.

Speed-up

To get started, remove anything which is not dependent on the value of t from the main for loop. Start by removing the generation of the random walk innovation from the for loop:

x_t = stats.uniform(0,1).rvs()

innov = stats.norm(loc=0).rvs(size=n)

for t in range(n):

x_prime = x_t + innov[t]

Of course it is also possible to move the random generation of u from the for loop:

x_t = stats.uniform(0,1).rvs()

innov = stats.norm(loc=0).rvs(size=n)

u = np.random.uniform(size=n)

for t in range(n):

x_prime = x_t + innov[t]

...

if u[t] <= alpha:

Another issue is that you're computing the current posterior p2 in every loop, which isn't necessary. In each loop you need to calculate the proposed posterior p1, and when the proposal is accepted you can update p2 to equal p1:

x_t = stats.uniform(0,1).rvs()

innov = stats.norm(loc=0).rvs(size=n)

u = np.random.uniform(size=n)

p2 = stats.beta(a=2,b=5).pdf(x_t)*stats.norm(loc=0,scale=2).pdf(x_t)

for t in range(n):

x_prime = x_t + innov[t]

p1 = stats.beta(a=2,b=5).pdf(x_prime)*stats.norm(loc=0,scale=2).pdf(x_prime)

...

if u[t] <= alpha:

x_t = x_prime # accept

p2 = p1

posterior[t] = x_t

A very minor improvement could be in importing the scipy stats functions directly into the name space:

from scipy.stats import norm, beta

Another very minor improvement is in noticing that the elif statement in your code doesn't do anything and so can be removed.

Putting this altogether and using more sensible variable names I came up with:

from scipy.stats import norm, beta

import numpy as np

def my_get_samples(n, sigma=1):

x_cur = np.random.uniform()

innov = norm.rvs(size=n, scale=sigma)

u = np.random.uniform(size=n)

post_cur = beta.pdf(x_cur, a=2, b=5) * norm.pdf(x_cur, loc=0, scale=2)

posterior = np.zeros(n)

for t in range(n):

x_prop = x_cur + innov[t]

post_prop = beta.pdf(x_prop, a=2, b=5) * norm.pdf(x_prop, loc=0, scale=2)

alpha = post_prop / post_cur

if u[t] <= alpha:

x_cur = x_prop

post_cur = post_prop

posterior[t] = x_cur

return posterior

Now, for a speed comparison:

%timeit get_samples(1000)

3.19 s ± 5.28 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

%timeit my_get_samples(1000)

127 ms ± 484 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

That's a speed up of 25x

ESS

It's worth noting that brute speed isn't everything when it comes to MCMC algorithms. Really, what you're interested in is the number of independent(ish) draws you can make from the posterior per second. Typically, this is assessed with the ESS (effective sample size). You can improve the efficiency of your algorithm (and hence increase your effective samples drawn per second) by tuning your random walk.

To do so it is typical to make an initial trial run, i.e. samples = my_get_samples(1000). From this output calculate sigma = 2.38**2 * np.var(samples). This value should then be used to tune the random walk in your scheme as innov = norm.rvs(size=n, scale=sigma). The seemingly arbitrary occurrence of 2.38^2 has it's origin in:

Weak convergence and optimal scaling of random walk Metropolis

algorithms (1997). A. Gelman, W. R. Gilks, and G. O. Roberts.

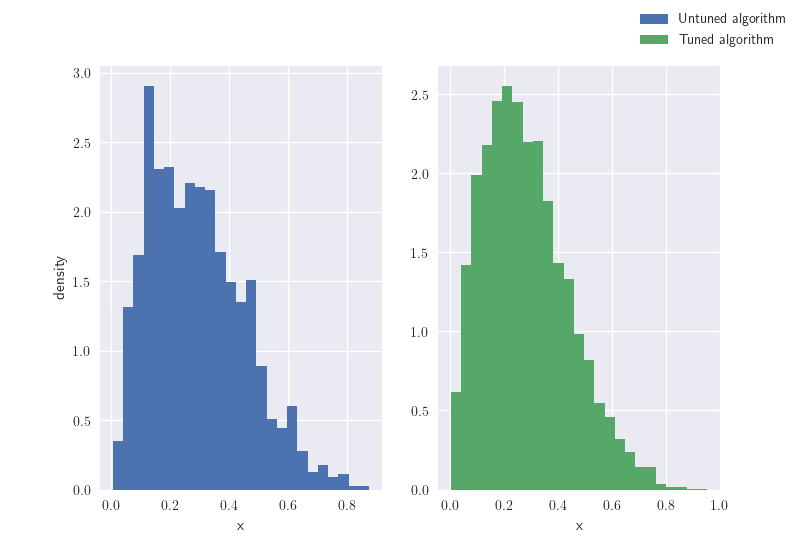

To illustrate the improvements tuning can make let's make two runs of my algorithm, one tuned and the other untuned, both using 10000 iterations:

x = my_get_samples(10000)

y = my_get_samples(10000, sigma=0.12)

fig, ax = plt.subplots(1, 2)

ax[0].hist(x, density=True, bins=25, label='Untuned algorithm', color='C0')

ax[1].hist(y, density=True, bins=25, label='Tuned algorithm', color='C1')

ax[0].set_ylabel('density')

ax[0].set_xlabel('x'), ax[1].set_xlabel('x')

fig.legend()

You can immediately see the improvements tuning has made to our algorithm's efficiency. Remember, both runs were made for the same number of iterations.

![enter image description here]()

Final thoughts

If your algorithm is taking a very long time to converge, or if your samples have large amounts of autocorrelation, I'd consider looking into Cython to squeeze out further speed optimisations.

I'd also recommend checking out the PyStan project. It takes a bit of getting used to, but its NUTS HMC algorithm will likely outperform any Metropolis--Hastings algorithm you can write by hand.

stats.beta().pdf()andstats.norm().pdf()are not generating random numbers. They're evaluating the density of the probability distributions. – Aam