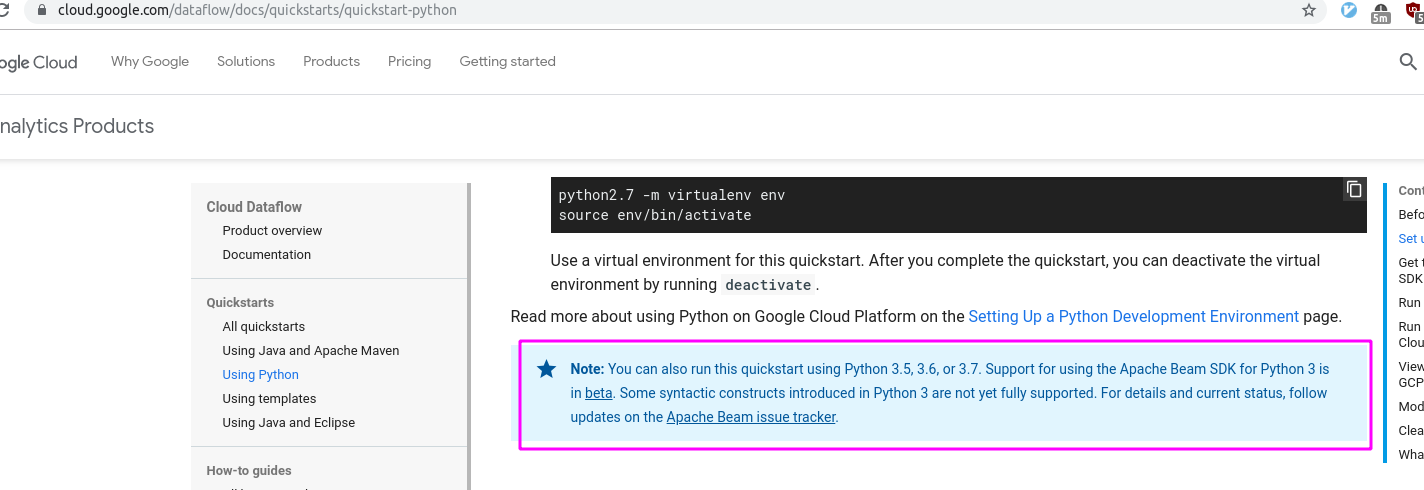

I'm very newby with GCP and dataflow. However , I would like to start to test and deploy few flows harnessing dataflow on GCP. According to the documentation and everything around dataflow is imperative use the Apache project BEAM. Therefore and following the official documentation here the supported version of python is 2.7

Honestly this is fairly disappointed due to the fact that Python version 2.x will vanish due not official support and everybody is working with version 3.x. Nevertheless, I want to know if someone knows how to get ready beam and GCP dataflow running in python version.

I saw this video and some how this parson complete this wonderful milestone and apparently it runs on Python 3.5.

Update:

Guys I want just raise a thought that has crossed my minds since I’m struggling with dataflow. I really feel highly disappointed in the sense how challenging is start hands on with this tool either version Java or Python. From python there are constrains about the version 3 which is pretty much the current standard. In the other hand, java has issues running on version 11 and I have to tweak a bit to run over version 8 my code and then I start to struggle with many incompatibilities on the code. Briefly , if really GCP wants to move forward and become the #1 there is so much much to improve. :disappointed:

Workaround:

I downgraded my java version to jdk 8 , install maven and now my eclipse version is working for Apache Beam.

I finally solved but, GCP really please consider enhance and span the support for the most recent versions of Java/Python.

Thanks so much