If Moore's Law holds true, and CPUs/GPUs become increasingly fast, will software (and, by association, you software developers) still push the boundaries to the extent that you still need to optimize your code? Or will a naive factorial solution be good enough for your code (etc)?

Poor code can always overcome CPU speed.

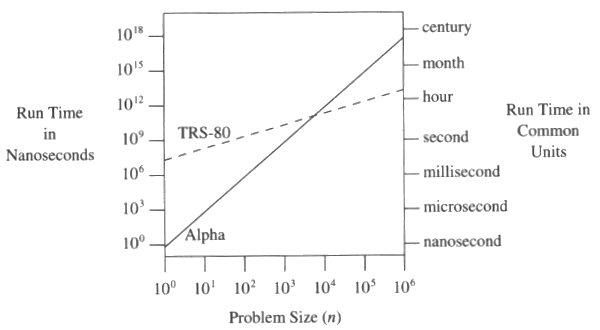

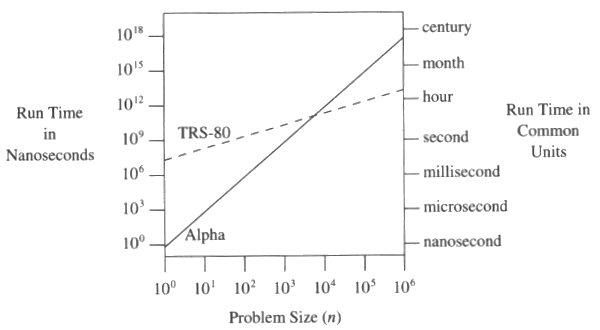

For an excellent example, go to this Coding Horror column and scroll down to the section describing the book Programming Pearls. Reproduced there is a graph showing how, for a certain algorithm, a TRS-80 with a 4.77MHz 8-bit processor can beat a 32-bit Alpha chip.

(source: typepad.com)

The current trend in speedups is to add more cores, 'cause making individual cores go faster is hard. So aggregate speed goes up, but linear tasks don't always benefit.

The saying "there is no problem that brute force and ignorance cannot overcome" is not always true.

2x the processing power doesn't do much to ameliorate the awfulness of your lousy n^2 search.

Poor code can always overcome CPU speed.

For an excellent example, go to this Coding Horror column and scroll down to the section describing the book Programming Pearls. Reproduced there is a graph showing how, for a certain algorithm, a TRS-80 with a 4.77MHz 8-bit processor can beat a 32-bit Alpha chip.

(source: typepad.com)

The current trend in speedups is to add more cores, 'cause making individual cores go faster is hard. So aggregate speed goes up, but linear tasks don't always benefit.

The saying "there is no problem that brute force and ignorance cannot overcome" is not always true.

The faster computers get, the more we expect them to do.

Whether it's faster code for more polygons in a videogame, or faster algorithms for trading in financial markets, if there's a competitive advantage to being faster, optimization will still be important. You don't have to outrace the lion that's chasing you and your buddy--you just have to outrace your buddy.

Until all programmers write optimal code the first time around, there will always be a place for optimization. Meanwhile, the real question is this: what should we optimize for first?

Moore's Law speaks about how many transistors we can pack on a chip -- it has nothing to say about those transistors being able to switch as increasingly fast speed. Indeed, in the last few years clock speeds have more or less stagnated - we just keep getting more and more "cores" (complete CPUs, essentially) per chip. To take advantage of that requires parallelization of the code, so if you're writing "naively" the magical optimizer of the future will be busy finding out hidden parallelism in your code so it can farm it out to multiple cores (more realistically, for the foreseeable future, you'll have to be helping out your compiler a lot;-).

Software is getting slower more rapidly than hardware becomes faster.

P.S. On a more serious note: as the computing model moves to parallel processing, code optimization becomes more important. If you optimize your code 2x and it runs 5 min instead of 10 min on 1 single box, it may be not that impressive. The next computer with 2x speed will compensate this. But imagine of you run your program on 1000 CPUs. Then any optimization saves A LOT of machine time. And electricity. Optimize and save the Earth! :)

Computational tasks seem to be divided into roughly two broad groups.

- Problems with bounded computational needs.

- Problems with unbounded computational needs.

Most problems fit in that first category. For example, real-time 3d rasterisation. For a good long time, this problem was out of reach of typical consumer electronics. No convincing 3d games or other programs existed that could produce real-time worlds on an Apple ][. Eventually, though, technology caught up, and now this problem is achievable. A similar problem is simulation of protein folding. Until quite recently, it was impossible to transform a know peptide sequence into the resulting protein molecule, but modern hardware makes this possible in a few hours or minutes of processing.

There are a few problems though, that by their nature can absorb all of the computational resources available. Most of these are dynamic physical simulations. Obviously its possible to perform a computational model of, say, the weather. We've been doing this almost as long as we've had computers. However, such a complex system benefits from increased accuracy. simulation at ever finer space and time resolution improves the predictions bit-by-bit. But no matter how much accuracy any given simulation has, there's room for more accuracy with benefit that follows.

Both types of problems have a very major use for optimization of all sorts. The second type is fairly obvious. If the program doing the simulation is improved a bit, then it runs a bit faster, giving results a bit sooner or with a bit more accuracy.

The first one is a bit more subtle, though. For a certain period, no amount of optimization is worthwhile, since no computer exists that is fast enough for it. After a while, optimization is somewhat pointless, since hardware that runs it is many times faster than needed. But there is a narrow window during which an optimal solution will run acceptably on current hardware but a suboptimal solution wont. during this period, carefully considered optimization can be the difference between a first-to-market winning product and an also ran.

There is more to optimization than speed. Moore's law doesn't apply to computer memory. Also optimization is often the process of compiling your code to take advantage of CPU-specific instructions. These are just a few of the optimizations I can think of that will not be solved by faster CPUs.

Optimization will always be necessary, because the mitigating factor to Moore's Law is bloatware.

Other answers seem to be concentrating on the speed side of the issue. That's fine. The real issue I can see is that if you optimise your code, it'll take less energy to run it. Your datacenter runs cooler, your laptop lasts longer, your phone goes for more than a day on a charge. There's a real selection pressure at this end of the market.

Optimisation will continue be needed in many situations, particularly:

Real time systems, where cpu time is at a premium

Embedded systems, where memory is memory is at a premium

Servers, where many processes are demanding attention simultaneously

Games, where 3-D ray tracing, audio, AI, and networking can make for a very demanding program

The world changes, and we need to change with it. When I first began, being a good programmer was all about knowing all of the little micro-optimizations you could do to squeeze another 0.2% out of a routine by manipulating pointers in C, and other things like that. Now, I spend much more of my time working on making algorithms more understandable, since in the long run, that's more valuable. But - there are always things to optimize, and always bottlenecks. More resources means people expect more from their systems, so being sloppy isn't a valid option to a professional.

Optimization strategies change as you add more speed/memory/resources to work with, though.

Some optimization has nothing to do with speed. For example, when optimizing multithreaded algorithms, you may be optimizing a reduction in the total number of shared locks. Adding more processing power in the form of speed (or worse, processors) may not have any effect if your current processing power is spent waiting on locks.... Adding processors can even make your overall performance drop if you're doing things incorrectly. Optimization in this case means trying to reduce the number of locks, and keep them as fine grained as possible instead of trying to reduce the number of instructions.

As long as some people write slow code that uses excessive resources, others will have to optimize their code to provide those resources faster and get the speed back.

I find it amazing how creative some developers can get writing suboptimal code. At my previous job, one guy wrote a function to compute the time between two dates by continuning to increment one date and comparing, for example.

Computer speed can't always overcome human error. The questions might be phrased, "Will CPU's become sufficiently fast that compilers can take the time to catch (and fix) implementation problems." Obviously, code optimization will be needed (for the foreseeable future) to fix Shlemiel the painter-type problems.

Software development is still a matter of telling the computer exactly what to do. What "increasingly fast" CPUs will give us is the ability to design increasingly abstract and natural programming languages, eventually to the the point where computers take our intentions and implement all the low-level details... someday.

A computer is like a teenager's room.

It will never be big enough to hold all the junk.

I think that result of all this is that computing power is getting cheaper so that the programmer can spend less time to accomplish a given task. For example, higher level languages, like Java or Python, are almost always slower than lower level languages like Assembly. But it is so much easier for the programmer that new things are possible. I think the end destination will be that computers will be able to directly communicate with humans, and compile human speech into byte-code. Then programmers will cease to exist. (And computers might take over the world)

Right or wrong, it's happening already in my opinion, and it's not always necessarily a bad thing. Better hardware does present opportunity for the developer to focus more energy on solving the problem at hand than worrying about the extra 10% of memory utilization.

Optimization is inarguable, but only when it's needed. I think the additional hardware power is simply decreasing the instances where it is truly needed. However, whoever is writing the software to launch the space shuttle to the moon better have his code optimized :)

Given that computers are about a thousand times faster than they were a few decades ago, but don't generally appear much faster, I'd say that we have a LONG way to go before we stop worrying about optimization. The problem is that as computers become more powerful, we have the computers do more and more work for us so that we can work at higher levels of abstraction. Optimization at each level of abstraction remains important.

Yes, computers do many things a lot faster: You can draw a Mandelbrot in minutes that used to require days of computer time. A GIF loads near-instantaneously, rather than taking visible seconds to be drawn on the screen. Many things are faster. But browsing, for example, is not that much faster. Word processing is not that much faster. As computers get more powerful, we just expect more, and we make computers do more.

Optimization will be important for the forseeable future. However, micro-optimizations are far less important than they used to be. The most important optimization these days may be choice of algorithm. Do you choose O(n log n) or O(n^2) .... etc.

The cost of optimization is very low, so I doubt it will become necessary to drop it. The real problem is finding tasks to utilize all the computing power that's out there -- so rather than drop optimization, we will be optimizing our ability to do things in parallel.

Eventually we wont be able to get faster, eventually we will be limited by space hence why you see newer processors under 3GHZ and multi core.. So yes optimization is still a neccessity.

Optimizing code will always be required to some degree and not just to speed up execution speed and lower memory usage. Finding the optimal energy-efficient method of processing information will be a major requirement in data-centres for example. Profiling skills are going to become a lot more important!

Yes, we are at the point where optimization matters and will be there in foreseeable future. Because:

- RAM speeds increase at lower pace than CPU speeds. Thus there is a still-widening performance gap between CPU and RAM, and, if your program accesses RAM a lot, you have to optimize access patterns to exploit cache efficiently. Otherwise the super-fast CPU will be idle 90% of time, just waiting for the data to arrive.

- Number of cores increases and increases. Does your code benefit from each added core or does it run on a single core? Here optimization means parallelization, and, depending on the task at hand, it may be hard.

- CPU speeds will never ever catch up with exponential algorithms and other brute force kinds of things. As nicely illustrated by this answer.

Let's hope network speeds keep up so we can shovel enough data over the wire to keep up with the CPUs...

As mentioned, there will always be bottlenecks

Suppose your CPU has as many transistors as the number of subatomic particles in the universe, and its clock runs at the frequency of hard cosmic rays, you can still beat it.

If you want to stay ahead of the latest CPU clock, just add another level of nesting in your loops, or add another level of nesting in your subroutine calls.

Or if you want to be really professional, add another layer of abstraction.

It's not hard :-)

Even though CPUs get faster and faster, you can always optimize

- network throughput,

- disk seeks,

- disk usage,

- memory usage,

- database transactions,

- number of system calls,

- scheduling and locking granularity,

- garbage collection.

(that's real world examples I've seen during last half a year).

Different parts of complex computer systems are considered expensive at different points of computing history. You have to measure the bottlenecks and judge where to put the effort.

© 2022 - 2024 — McMap. All rights reserved.