Thanks to Olivia for this great and detailed post!

I recently started to program a streaming app on iPad Pro with Xamarin forms and this article helped a lot and I found many references to it throughout the web.

I suppose many people re-wrote Olivia's example in Xamarin already and I don't claim to be the best programmer in the world. But as nobody posted a C#/Xamarin version here yet and I would like to give something back to the community for the great post above, here is my C# / Xamarin version. Maybe it helps someone to to speed up progress in her or his project.

I kept close to Olivia's example, I even kept most of her comments.

First, for I prefer dealing with enums rather than numbers, I declared this NALU enum.

For the sake of completeness I also added some "exotic" NALU types I found on the internet:

public enum NALUnitType : byte

{

NALU_TYPE_UNKNOWN = 0,

NALU_TYPE_SLICE = 1,

NALU_TYPE_DPA = 2,

NALU_TYPE_DPB = 3,

NALU_TYPE_DPC = 4,

NALU_TYPE_IDR = 5,

NALU_TYPE_SEI = 6,

NALU_TYPE_SPS = 7,

NALU_TYPE_PPS = 8,

NALU_TYPE_AUD = 9,

NALU_TYPE_EOSEQ = 10,

NALU_TYPE_EOSTREAM = 11,

NALU_TYPE_FILL = 12,

NALU_TYPE_13 = 13,

NALU_TYPE_14 = 14,

NALU_TYPE_15 = 15,

NALU_TYPE_16 = 16,

NALU_TYPE_17 = 17,

NALU_TYPE_18 = 18,

NALU_TYPE_19 = 19,

NALU_TYPE_20 = 20,

NALU_TYPE_21 = 21,

NALU_TYPE_22 = 22,

NALU_TYPE_23 = 23,

NALU_TYPE_STAP_A = 24,

NALU_TYPE_STAP_B = 25,

NALU_TYPE_MTAP16 = 26,

NALU_TYPE_MTAP24 = 27,

NALU_TYPE_FU_A = 28,

NALU_TYPE_FU_B = 29,

}

More or less for convenience reasons I also defined an additional dictionary for the NALU descriptions:

public static Dictionary<NALUnitType, string> GetDescription { get; } =

new Dictionary<NALUnitType, string>()

{

{ NALUnitType.NALU_TYPE_UNKNOWN, "Unspecified (non-VCL)" },

{ NALUnitType.NALU_TYPE_SLICE, "Coded slice of a non-IDR picture (VCL) [P-frame]" },

{ NALUnitType.NALU_TYPE_DPA, "Coded slice data partition A (VCL)" },

{ NALUnitType.NALU_TYPE_DPB, "Coded slice data partition B (VCL)" },

{ NALUnitType.NALU_TYPE_DPC, "Coded slice data partition C (VCL)" },

{ NALUnitType.NALU_TYPE_IDR, "Coded slice of an IDR picture (VCL) [I-frame]" },

{ NALUnitType.NALU_TYPE_SEI, "Supplemental Enhancement Information [SEI] (non-VCL)" },

{ NALUnitType.NALU_TYPE_SPS, "Sequence Parameter Set [SPS] (non-VCL)" },

{ NALUnitType.NALU_TYPE_PPS, "Picture Parameter Set [PPS] (non-VCL)" },

{ NALUnitType.NALU_TYPE_AUD, "Access Unit Delimiter [AUD] (non-VCL)" },

{ NALUnitType.NALU_TYPE_EOSEQ, "End of Sequence (non-VCL)" },

{ NALUnitType.NALU_TYPE_EOSTREAM, "End of Stream (non-VCL)" },

{ NALUnitType.NALU_TYPE_FILL, "Filler data (non-VCL)" },

{ NALUnitType.NALU_TYPE_13, "Sequence Parameter Set Extension (non-VCL)" },

{ NALUnitType.NALU_TYPE_14, "Prefix NAL Unit (non-VCL)" },

{ NALUnitType.NALU_TYPE_15, "Subset Sequence Parameter Set (non-VCL)" },

{ NALUnitType.NALU_TYPE_16, "Reserved (non-VCL)" },

{ NALUnitType.NALU_TYPE_17, "Reserved (non-VCL)" },

{ NALUnitType.NALU_TYPE_18, "Reserved (non-VCL)" },

{ NALUnitType.NALU_TYPE_19, "Coded slice of an auxiliary coded picture without partitioning (non-VCL)" },

{ NALUnitType.NALU_TYPE_20, "Coded Slice Extension (non-VCL)" },

{ NALUnitType.NALU_TYPE_21, "Coded Slice Extension for Depth View Components (non-VCL)" },

{ NALUnitType.NALU_TYPE_22, "Reserved (non-VCL)" },

{ NALUnitType.NALU_TYPE_23, "Reserved (non-VCL)" },

{ NALUnitType.NALU_TYPE_STAP_A, "STAP-A Single-time Aggregation Packet (non-VCL)" },

{ NALUnitType.NALU_TYPE_STAP_B, "STAP-B Single-time Aggregation Packet (non-VCL)" },

{ NALUnitType.NALU_TYPE_MTAP16, "MTAP16 Multi-time Aggregation Packet (non-VCL)" },

{ NALUnitType.NALU_TYPE_MTAP24, "MTAP24 Multi-time Aggregation Packet (non-VCL)" },

{ NALUnitType.NALU_TYPE_FU_A, "FU-A Fragmentation Unit (non-VCL)" },

{ NALUnitType.NALU_TYPE_FU_B, "FU-B Fragmentation Unit (non-VCL)" }

};

Here comes my main decoding procedure. I assume the received frame as raw byte array:

public void Decode(byte[] frame)

{

uint frameSize = (uint)frame.Length;

SendDebugMessage($"Received frame of {frameSize} bytes.");

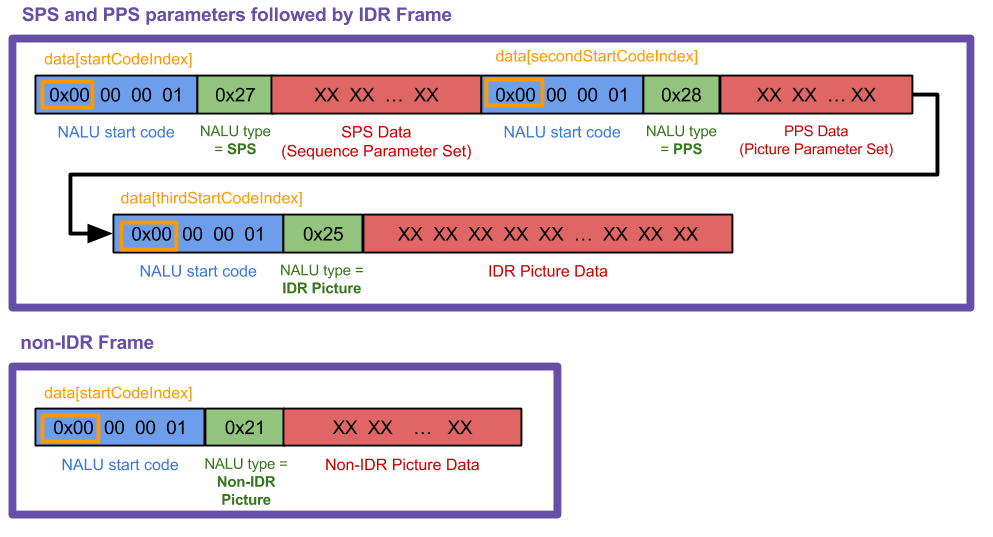

// I know how my H.264 data source's NALUs looks like so I know start code index is always 0.

// if you don't know where it starts, you can use a for loop similar to how I find the 2nd and 3rd start codes

uint firstStartCodeIndex = 0;

uint secondStartCodeIndex = 0;

uint thirdStartCodeIndex = 0;

// length of NALU start code in bytes.

// for h.264 the start code is 4 bytes and looks like this: 0 x 00 00 00 01

const uint naluHeaderLength = 4;

// check the first 8bits after the NALU start code, mask out bits 0-2, the NALU type ID is in bits 3-7

uint startNaluIndex = firstStartCodeIndex + naluHeaderLength;

byte startByte = frame[startNaluIndex];

int naluTypeId = startByte & 0x1F; // 0001 1111

NALUnitType naluType = (NALUnitType)naluTypeId;

SendDebugMessage($"1st Start Code Index: {firstStartCodeIndex}");

SendDebugMessage($"1st NALU Type: '{NALUnit.GetDescription[naluType]}' ({(int)naluType})");

// bits 1 and 2 are the NRI

int nalRefIdc = startByte & 0x60; // 0110 0000

SendDebugMessage($"1st NRI (NAL Ref Idc): {nalRefIdc}");

// IF the very first NALU type is an IDR -> handle it like a slice frame (-> re-cast it to type 1 [Slice])

if (naluType == NALUnitType.NALU_TYPE_IDR)

{

naluType = NALUnitType.NALU_TYPE_SLICE;

}

// if we haven't already set up our format description with our SPS PPS parameters,

// we can't process any frames except type 7 that has our parameters

if (naluType != NALUnitType.NALU_TYPE_SPS && this.FormatDescription == null)

{

SendDebugMessage("Video Error: Frame is not an I-Frame and format description is null.");

return;

}

// NALU type 7 is the SPS parameter NALU

if (naluType == NALUnitType.NALU_TYPE_SPS)

{

// find where the second PPS 4byte start code begins (0x00 00 00 01)

// from which we also get the length of the first SPS code

for (uint i = firstStartCodeIndex + naluHeaderLength; i < firstStartCodeIndex + 40; i++)

{

if (frame[i] == 0x00 && frame[i + 1] == 0x00 && frame[i + 2] == 0x00 && frame[i + 3] == 0x01)

{

secondStartCodeIndex = i;

this.SpsSize = secondStartCodeIndex; // includes the header in the size

SendDebugMessage($"2nd Start Code Index: {secondStartCodeIndex} -> SPS Size: {this.SpsSize}");

break;

}

}

// find what the second NALU type is

startByte = frame[secondStartCodeIndex + naluHeaderLength];

naluType = (NALUnitType)(startByte & 0x1F);

SendDebugMessage($"2nd NALU Type: '{NALUnit.GetDescription[naluType]}' ({(int)naluType})");

// bits 1 and 2 are the NRI

nalRefIdc = startByte & 0x60; // 0110 0000

SendDebugMessage($"2nd NRI (NAL Ref Idc): {nalRefIdc}");

}

// type 8 is the PPS parameter NALU

if (naluType == NALUnitType.NALU_TYPE_PPS)

{

// find where the NALU after this one starts so we know how long the PPS parameter is

for (uint i = this.SpsSize + naluHeaderLength; i < this.SpsSize + 30; i++)

{

if (frame[i] == 0x00 && frame[i + 1] == 0x00 && frame[i + 2] == 0x00 && frame[i + 3] == 0x01)

{

thirdStartCodeIndex = i;

this.PpsSize = thirdStartCodeIndex - this.SpsSize;

SendDebugMessage($"3rd Start Code Index: {thirdStartCodeIndex} -> PPS Size: {this.PpsSize}");

break;

}

}

// allocate enough data to fit the SPS and PPS parameters into our data objects.

// VTD doesn't want you to include the start code header (4 bytes long) so we subtract 4 here

byte[] sps = new byte[this.SpsSize - naluHeaderLength];

byte[] pps = new byte[this.PpsSize - naluHeaderLength];

// copy in the actual sps and pps values, again ignoring the 4 byte header

Array.Copy(frame, naluHeaderLength, sps, 0, sps.Length);

Array.Copy(frame, this.SpsSize + naluHeaderLength, pps,0, pps.Length);

// create video format description

List<byte[]> parameterSets = new List<byte[]> { sps, pps };

this.FormatDescription = CMVideoFormatDescription.FromH264ParameterSets(parameterSets, (int)naluHeaderLength, out CMFormatDescriptionError formatDescriptionError);

SendDebugMessage($"Creation of CMVideoFormatDescription: {((formatDescriptionError == CMFormatDescriptionError.None)? $"Successful! (Video Codec = {this.FormatDescription.VideoCodecType}, Dimension = {this.FormatDescription.Dimensions.Height} x {this.FormatDescription.Dimensions.Width}px, Type = {this.FormatDescription.MediaType})" : $"Failed ({formatDescriptionError})")}");

// re-create the decompression session whenever new PPS data was received

this.DecompressionSession = this.CreateDecompressionSession(this.FormatDescription);

// now lets handle the IDR frame that (should) come after the parameter sets

// I say "should" because that's how I expect my H264 stream to work, YMMV

startByte = frame[thirdStartCodeIndex + naluHeaderLength];

naluType = (NALUnitType)(startByte & 0x1F);

SendDebugMessage($"3rd NALU Type: '{NALUnit.GetDescription[naluType]}' ({(int)naluType})");

// bits 1 and 2 are the NRI

nalRefIdc = startByte & 0x60; // 0110 0000

SendDebugMessage($"3rd NRI (NAL Ref Idc): {nalRefIdc}");

}

// type 5 is an IDR frame NALU.

// The SPS and PPS NALUs should always be followed by an IDR (or IFrame) NALU, as far as I know.

if (naluType == NALUnitType.NALU_TYPE_IDR || naluType == NALUnitType.NALU_TYPE_SLICE)

{

// find the offset or where IDR frame NALU begins (after the SPS and PPS NALUs end)

uint offset = (naluType == NALUnitType.NALU_TYPE_SLICE)? 0 : this.SpsSize + this.PpsSize;

uint blockLength = frameSize - offset;

SendDebugMessage($"Block Length (NALU type '{naluType}'): {blockLength}");

var blockData = new byte[blockLength];

Array.Copy(frame, offset, blockData, 0, blockLength);

// write the size of the block length (IDR picture data) at the beginning of the IDR block.

// this means we replace the start code header (0 x 00 00 00 01) of the IDR NALU with the block size.

// AVCC format requires that you do this.

// This next block is very specific to my application and wasn't in Olivia's example:

// For my stream is encoded by NVIDEA NVEC I had to deal with additional 3-byte start codes within my IDR/SLICE frame.

// These start codes must be replaced by 4 byte start codes adding the block length as big endian.

// ======================================================================================================================================================

// find all 3 byte start code indices (0x00 00 01) within the block data (including the first 4 bytes of NALU header)

uint startCodeLength = 3;

List<uint> foundStartCodeIndices = new List<uint>();

for (uint i = 0; i < blockData.Length; i++)

{

if (blockData[i] == 0x00 && blockData[i + 1] == 0x00 && blockData[i + 2] == 0x01)

{

foundStartCodeIndices.Add(i);

byte naluByte = blockData[i + startCodeLength];

var tmpNaluType = (NALUnitType)(naluByte & 0x1F);

SendDebugMessage($"3-Byte Start Code (0x000001) found at index: {i} (NALU type {(int)tmpNaluType} '{NALUnit.GetDescription[tmpNaluType]}'");

}

}

// determine the byte length of each slice

uint totalLength = 0;

List<uint> sliceLengths = new List<uint>();

for (int i = 0; i < foundStartCodeIndices.Count; i++)

{

// for convenience only

bool isLastValue = (i == foundStartCodeIndices.Count-1);

// start-index to bit right after the start code

uint startIndex = foundStartCodeIndices[i] + startCodeLength;

// set end-index to bit right before beginning of next start code or end of frame

uint endIndex = isLastValue ? (uint) blockData.Length : foundStartCodeIndices[i + 1];

// now determine slice length including NALU header

uint sliceLength = (endIndex - startIndex) + naluHeaderLength;

// add length to list

sliceLengths.Add(sliceLength);

// sum up total length of all slices (including NALU header)

totalLength += sliceLength;

}

// Arrange slices like this:

// [4byte slice1 size][slice1 data][4byte slice2 size][slice2 data]...[4byte slice4 size][slice4 data]

// Replace 3-Byte Start Code with 4-Byte start code, then replace the 4-Byte start codes with the length of the following data block (big endian).

// https://mcmap.net/q/138954/-nvidia-nvenc-media-foundation-encoded-h-264-frames-not-decoded-properly-using-videotoolbox

byte[] finalBuffer = new byte[totalLength];

uint destinationIndex = 0;

// create a buffer for each slice and append it to the final block buffer

for (int i = 0; i < sliceLengths.Count; i++)

{

// create byte vector of size of current slice, add additional bytes for NALU start code length

byte[] sliceData = new byte[sliceLengths[i]];

// now copy the data of current slice into the byte vector,

// start reading data after the 3-byte start code

// start writing data after NALU start code,

uint sourceIndex = foundStartCodeIndices[i] + startCodeLength;

long dataLength = sliceLengths[i] - naluHeaderLength;

Array.Copy(blockData, sourceIndex, sliceData, naluHeaderLength, dataLength);

// replace the NALU start code with data length as big endian

byte[] sliceLengthInBytes = BitConverter.GetBytes(sliceLengths[i] - naluHeaderLength);

Array.Reverse(sliceLengthInBytes);

Array.Copy(sliceLengthInBytes, 0, sliceData, 0, naluHeaderLength);

// add the slice data to final buffer

Array.Copy(sliceData, 0, finalBuffer, destinationIndex, sliceData.Length);

destinationIndex += sliceLengths[i];

}

// ======================================================================================================================================================

// from here we are back on track with Olivia's code:

// now create block buffer from final byte[] buffer

CMBlockBufferFlags flags = CMBlockBufferFlags.AssureMemoryNow | CMBlockBufferFlags.AlwaysCopyData;

var finalBlockBuffer = CMBlockBuffer.FromMemoryBlock(finalBuffer, 0, flags, out CMBlockBufferError blockBufferError);

SendDebugMessage($"Creation of Final Block Buffer: {(blockBufferError == CMBlockBufferError.None ? "Successful!" : $"Failed ({blockBufferError})")}");

if (blockBufferError != CMBlockBufferError.None) return;

// now create the sample buffer

nuint[] sampleSizeArray = new nuint[] { totalLength };

CMSampleBuffer sampleBuffer = CMSampleBuffer.CreateReady(finalBlockBuffer, this.FormatDescription, 1, null, sampleSizeArray, out CMSampleBufferError sampleBufferError);

SendDebugMessage($"Creation of Final Sample Buffer: {(sampleBufferError == CMSampleBufferError.None ? "Successful!" : $"Failed ({sampleBufferError})")}");

if (sampleBufferError != CMSampleBufferError.None) return;

// if sample buffer was successfully created -> pass sample to decoder

// set sample attachments

CMSampleBufferAttachmentSettings[] attachments = sampleBuffer.GetSampleAttachments(true);

var attachmentSetting = attachments[0];

attachmentSetting.DisplayImmediately = true;

// enable async decoding

VTDecodeFrameFlags decodeFrameFlags = VTDecodeFrameFlags.EnableAsynchronousDecompression;

// add time stamp

var currentTime = DateTime.Now;

var currentTimePtr = new IntPtr(currentTime.Ticks);

// send the sample buffer to a VTDecompressionSession

var result = DecompressionSession.DecodeFrame(sampleBuffer, decodeFrameFlags, currentTimePtr, out VTDecodeInfoFlags decodeInfoFlags);

if (result == VTStatus.Ok)

{

SendDebugMessage($"Executing DecodeFrame(..): Successful! (Info: {decodeInfoFlags})");

}

else

{

NSError error = new NSError(CFErrorDomain.OSStatus, (int)result);

SendDebugMessage($"Executing DecodeFrame(..): Failed ({(VtStatusEx)result} [0x{(int)result:X8}] - {error}) - Info: {decodeInfoFlags}");

}

}

}

My function to create the decompression session looks like this:

private VTDecompressionSession CreateDecompressionSession(CMVideoFormatDescription formatDescription)

{

VTDecompressionSession.VTDecompressionOutputCallback callBackRecord = this.DecompressionSessionDecodeFrameCallback;

VTVideoDecoderSpecification decoderSpecification = new VTVideoDecoderSpecification

{

EnableHardwareAcceleratedVideoDecoder = true

};

CVPixelBufferAttributes destinationImageBufferAttributes = new CVPixelBufferAttributes();

try

{

var decompressionSession = VTDecompressionSession.Create(callBackRecord, formatDescription, decoderSpecification, destinationImageBufferAttributes);

SendDebugMessage("Video Decompression Session Creation: Successful!");

return decompressionSession;

}

catch (Exception e)

{

SendDebugMessage($"Video Decompression Session Creation: Failed ({e.Message})");

return null;

}

}

The decompression session callback routine:

private void DecompressionSessionDecodeFrameCallback(

IntPtr sourceFrame,

VTStatus status,

VTDecodeInfoFlags infoFlags,

CVImageBuffer imageBuffer,

CMTime presentationTimeStamp,

CMTime presentationDuration)

{

if (status != VTStatus.Ok)

{

NSError error = new NSError(CFErrorDomain.OSStatus, (int)status);

SendDebugMessage($"Decompression: Failed ({(VtStatusEx)status} [0x{(int)status:X8}] - {error})");

}

else

{

SendDebugMessage("Decompression: Successful!");

try

{

var image = GetImageFromImageBuffer(imageBuffer);

// In my application I do not use a display layer but send the decoded image directly by an event:

ImageSource imgSource = ImageSource.FromStream(() => image.AsPNG().AsStream());

OnImageFrameReady?.Invoke(imgSource);

}

catch (Exception e)

{

SendDebugMessage(e.ToString());

}

}

}

I use this function to convert the CVImageBuffer to an UIImage. It also refers to one of Olivia's posts mentioned above (how to convert a CVImageBufferRef to UIImage):

private UIImage GetImageFromImageBuffer(CVImageBuffer imageBuffer)

{

if (!(imageBuffer is CVPixelBuffer pixelBuffer)) return null;

var ciImage = CIImage.FromImageBuffer(pixelBuffer);

var temporaryContext = new CIContext();

var rect = CGRect.FromLTRB(0, 0, pixelBuffer.Width, pixelBuffer.Height);

CGImage cgImage = temporaryContext.CreateCGImage(ciImage, rect);

if (cgImage == null) return null;

var uiImage = UIImage.FromImage(cgImage);

cgImage.Dispose();

return uiImage;

}

Last but not least my tiny little function for debug output, feel free to pimp it as needed for your purpose ;-)

private void SendDebugMessage(string msg)

{

Debug.WriteLine($"VideoDecoder (iOS) - {msg}");

}

Finally, let's have a look at the namespaces used for the code above:

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.IO;

using System.Net;

using AvcLibrary;

using CoreFoundation;

using CoreGraphics;

using CoreImage;

using CoreMedia;

using CoreVideo;

using Foundation;

using UIKit;

using VideoToolbox;

using Xamarin.Forms;