I am implementing minimax in Python 2.7.11 in a basic game of Pacman. Pacman is the maximizing agent, and one or more ghosts (depending on the test layout) is/are the minimizing agent(s).

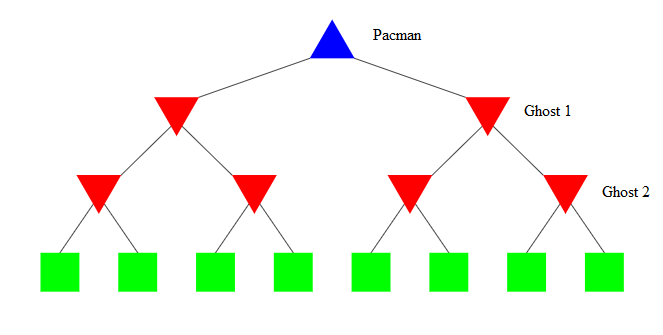

I must implement minimax so that there can be potentially more than one minimizing agent, and so that it can create a tree of n plies (depth). Ply 1, for example, would be each ghost taking a turn minimizing the terminal state utilities of their possible moves, as well as pacman taking his turn maximizing what the ghosts have already minimized. Graphically, ply 1 looks like this:

If we had the following arbitrary utilities assigned to the green terminal states (left to right):

-10, 5, 8, 4, -4, 20, -7, 17

Pacman should return -4 and then move in that direction, creating an entirely new minimax tree based on that decision.

First, a list of variables and functions needed for my implementation to make sense:

# Stores everything about the current state of the game

gameState

# A globally defined depth that varies depending on the test cases.

# It could be as little as 1 or arbitrarily large

self.depth

# A locally defined depth that keeps track of how many plies deep I've gone in the tree

self.myDepth

# A function that assigns a numeric value as a utility for the current state

# How this is calculated is moot

self.evaluationFunction(gameState)

# Returns a list of legal actions for an agent

# agentIndex = 0 means Pacman, ghosts are >= 1

gameState.getLegalActions(agentIndex)

# Returns the successor game state after an agent takes an action

gameState.generateSuccessor(agentIndex, action)

# Returns the total number of agents in the game

gameState.getNumAgents()

# Returns whether or not the game state is a winning (terminal) state

gameState.isWin()

# Returns whether or not the game state is a losing (terminal) state

gameState.isLose()

This is my implementation:

"""

getAction takes a gameState and returns the optimal move for pacman,

assuming that the ghosts are optimal at minimizing his possibilities

"""

def getAction(self, gameState):

self.myDepth = 0

def miniMax(gameState):

if gameState.isWin() or gameState.isLose() or self.myDepth == self.depth:

return self.evaluationFunction(gameState)

numAgents = gameState.getNumAgents()

for i in range(0, numAgents, 1):

legalMoves = gameState.getLegalActions(i)

successors = [gameState.generateSuccessor(j, legalMoves[j]) for j, move

in enumerate(legalMoves)]

for successor in successors:

if i == 0:

return maxValue(successor, i)

else:

return minValue(successor, i)

def minValue(gameState, agentIndex):

minUtility = float('inf')

legalMoves = gameState.getLegalActions(agentIndex)

succesors = [gameState.generateSuccessor(i, legalMoves[i]) for i, move

in enumerate(legalMoves)]

for successor in successors:

minUtility = min(minUtility, miniMax(successor))

return minUtility

def maxValue(gameState, agentIndex)

self.myDepth += 1

maxUtility = float('-inf')

legalMoves = gameState.getLegalActions(agentIndex)

successors = [gameState.generateSuccessor(i, legalMoves[i]) for i, move

in enumerate(legalMoves)]

for successor in successors:

maxUtility = max(maxUtility, miniMax(successor))

return maxUtility

return miniMax(gameState)

Does anyone have any ideas why my code is doing this? I'm hoping there are a few Minimax/Artificial Intelligence experts out there that can identify my issues. Thanks in advance.

UPDATE: by instantiating my self.myDepth value as 0 instead of 1, I have irradicated the exception throwing issue. However, the overall incorrectness of my implementation still remains.

getScore()defined anywhere in your code. Where is it supposed to be? – Hanseaticself.evaluationFunction(gameState)is being called before terminal states or maximum depth have been achieved. – Allegroif i == 0line inminiMaxseems very sketchy to me. That condition is always going to beTrueon the first pass of the outer loop, so the following line willreturnand the other agents will never be considered. Is the indentation in your code correct? – Micadef miniMax(gameState)says: "1) If state is win/lose/terminal then return utility. 2) If next agent is pacman then return maxValue(gameState). 3) If next agent is ghost then return minValue(gameState)". I saw no other way to keep track of the agents than to usefor i in range(0, numAgents, 1):– Allegroreturnonce per run of the function, so having an unconditionalreturninside a loop makes the later iterations never occur. Perhaps the next agent to act should be part of thegameState? – Micaself.myDepthto0instead of1, which eliminates the exception throwing issue at least. – Allegroself.myDepth == self.depthWhat is going on here? Is this the max depth check? Where you are incrementing/decrementing self.depth? – Labouriteself.depthis globally defined, and varies based on the test cases I use to test if my implementation of minimax is generalized enough that it can be passed any amount of agents with any amount of plies.self.myDepthis a local variable I instantiate that gets incremented within thedef maxValue(gameAgent, agentIndex, depth)function, sincemaxValuecan only be called once per depth (for Pacman). The lineself.myDepth == self.depthis checking to see if I have reached the maximum depth defined in my test case. – Allegroself?selfis usually what you use for the self reference in class, but it doesn't look like you're doing that here. Is getAction a part of a class? 2)depth += 1in themaxValueisn't doing anything, you're updating a function parameter and that's not going to be reflected elsewhere – Labouriteselfinself.depthandself.evaluateFunction(gameState)are part of a class that extends a long hierarchy of agents and objects. They are in the same class asdef getAction(gameState), but they are correct. The program is written so that my implementation of Minimax is all that matters in my test cases succeeding. 2) You are right, I meant to change that part of the code, as I caught it earlier. – Allegro