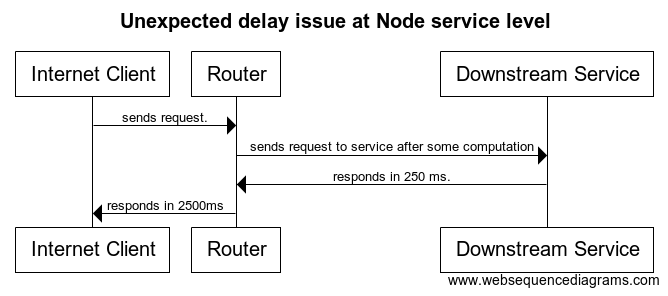

Problem statement-

We are using router between internet client and downstream service. Router(service) is written in Node.js. Its responsiiblty is to pass internet client's request to corresponding downstream service, and return back the response to internet client. We are facing some delay at router level.

Library used for http-proxy-

https://github.com/nodejitsu/node-http-proxy

node-http-proxy Example-

Explaining with sample example. We are facing issue in 99 percentile case -

We did load/performance testing with 100 concurrency.

As per result, till 95 percentile, response time looks great to internet client.

But, in 99 percentile, Downstream service responds in expected time (~250ms). But router is taking 10 times more time than expected(~2500 ms).

Service information-

Both router, and downstream service are in same region, and same subnet. So, this delay is not because of network.

Possibilities of this delay-

- Some threads are blocked at node service level. Thats why, not able to listen downstream service responses.

- Taking more time in dns lookup.

- Node level thread counts are less, thats why, not able to listen for all incoming response from downstream service side.

To analyse this-

We twicked below configurations -

keepAlive, maxSockets, maxFreeSockets, keepAliveMsecs, log level. PLease check Node configurations for http/https agent - http agent configuration

Code snippet of node service -

var httpProxy = require('http-proxy');

var http = require('http');

var https = require('https');

var agent = new https.Agent({

maxSockets: nconf.get(25),

keepAlive: true,

maxFreeSockets: nconf.get(10),

keepAliveMsecs : nconf.get(5000)

});

var proxy = httpProxy.createServer({ agent: agent });

var domain = require('domain');

var requestTimeout = parseInt(nconf.get('REQUEST_TIMEOUT'));

process.env.UV_THREADPOOL_SIZE = nconf.get(4);

Questions-

- I am new in node services. It would be great, if you help me to tune above configurations with right values. If I missed any configuration, please let me know ?

- Is there any way to intercept network traffic on router machine, that will help me to analyse above mentioned 10 times delay in router response time ?

- If you know any profiling tool(network level), that can help me to dive-deep more, please share with me ?

[Update 1]

Found one interesting link - war-story.

If I missed any required information here, please ask me to add here.