In this question I asked how to implement a chain of blurs in one single step.

Then I found out from the gaussian blur page of Wikipedia that:

Applying multiple, successive gaussian blurs to an image has the same effect as applying a single, larger gaussian blur, whose radius is the square root of the sum of the squares of the blur radii that were actually applied. For example, applying successive gaussian blurs with radii of 6 and 8 gives the same results as applying a single gaussian blur of radius 10, since sqrt {6^{2}+8^{2}}=10.

So I thought that blur and singleBlur were the same in the following code:

cv::Mat firstLevel;

float sigma1, sigma2;

//intialize firstLevel, sigma1 and sigma2

cv::Mat blur = gaussianBlur(firstLevel, sigma1);

blur = gaussianBlur(blur, sigma2);

float singleSigma = std::sqrt(std::pow(sigma1,2)+std::pow(sigma2,2));

cv::Mat singleBlur = gaussianBlur(firstLevel, singleSigma);

cv::Mat diff = blur != singleBLur;

// Equal if no elements disagree

assert( cv::countNonZero(diff) == 0);

But this assert fails (and actually, for example, the first row of blur is different from the first one of singleBlur).

Why?

UPDATE:

After different comments asking for more information, I'll update the answer.

What I'm trying to do is to parallelize this code. In particular, I'm focusing now on computing all the blurs at all levels in advance. The serial code (which works correctly) is the following:

vector<Mat> blurs ((par.numberOfScales+3)*levels, Mat());

cv::Mat octaveLayer = firstLevel;

int scaleCycles = par.numberOfScales+2;

//compute blurs at all layers (not parallelizable)

for(int i=0; i<levels; i++){

blurs[i*scaleCycles+1] = octaveLayer.clone();

for (int j = 1; j < scaleCycles; j++){

float sigma = par.sigmas[j]* sqrt(sigmaStep * sigmaStep - 1.0f);

blurs[j+1+i*scaleCycles] = gaussianBlur(blurs[j+i*scaleCycles], sigma);

if(j == par.numberOfScales)

octaveLayer = halfImage(blurs[j+1+i*scaleCycles]);

}

}

Where:

Mat halfImage(const Mat &input)

{

Mat n(input.rows/2, input.cols/2, input.type());

float *out = n.ptr<float>(0);

for (int r = 0, ri = 0; r < n.rows; r++, ri += 2)

for (int c = 0, ci = 0; c < n.cols; c++, ci += 2)

*out++ = input.at<float>(ri,ci);

return n;

}

Mat gaussianBlur(const Mat input, const float sigma)

{

Mat ret(input.rows, input.cols, input.type());

int size = (int)(2.0 * 3.0 * sigma + 1.0); if (size % 2 == 0) size++;

GaussianBlur(input, ret, Size(size, size), sigma, sigma, BORDER_REPLICATE);

return ret;

}

I'm sorry for the horrible indexes above, but I tried to respect the original code system (which is horrible, like starting counting from 1 instead of 0). The code above has scaleCycles=5 and levels=6, so 30 blurs are generated in total.

This is the "single blur" version, where first I compute the sigmas for each blur that has to be computed (following Wikipedia's formula) and then I apply the blur (notice that this is still serial and not parallelizable):

vector<Mat> singleBlurs ((par.numberOfScales+3)*levels, Mat());

vector<float> singleSigmas(scaleCycles);

float acc = 0;

for (int j = 1; j < scaleCycles; j++){

float sigma = par.sigmas[j]* sqrt(sigmaStep * sigmaStep - 1.0f);

acc += pow(sigma, 2);

singleSigmas[j] = sqrt(acc);

}

octaveLayer = firstLevel;

for(int i=0; i<levels; i++){

singleBlurs[i*scaleCycles+1] = octaveLayer.clone();

for (int j = 1; j < scaleCycles; j++){

float sigma = singleSigmas[j];

std::cout<<"j="<<j<<" sigma="<<sigma<<std::endl;

singleBlurs[j+1+i*scaleCycles] = gaussianBlur(singleBlurs[j+i*scaleCycles], sigma);

if(j == par.numberOfScales)

octaveLayer = halfImage(singleBlurs[j+1+i*scaleCycles]);

}

}

Of course the code above generates 30 blurs also with the same parameters of the previous version.

And then this is the code to see the difference between each signgleBlurs and blurs:

assert(blurs.size() == singleBlurs.size());

vector<Mat> blurDiffs(blurs.size());

for(int i=1; i<levels*scaleCycles; i++){

cv::Mat diff;

absdiff(blurs[i], singleBlurs[i], diff);

std::cout<<"i="<<i<<"diff rows="<<diff.rows<<" cols="<<diff.cols<<std::endl;

blurDiffs[i] = diff;

std::cout<<"blurs rows="<<blurs[i].rows<<" cols="<<blurs[i].cols<<std::endl;

std::cout<<"singleBlurs rows="<<singleBlurs[i].rows<<" cols="<<singleBlurs[i].cols<<std::endl;

std::cout<<"blurDiffs rows="<<blurDiffs[i].rows<<" cols="<<blurDiffs[i].cols<<std::endl;

namedWindow( "blueDiffs["+std::to_string(i)+"]", WINDOW_AUTOSIZE );// Create a window for display.

//imshow( "blueDiffs["+std::to_string(i)+"]", blurDiffs[i] ); // Show our image inside it.

//waitKey(0); // Wait for a keystroke in the window

Mat imageF_8UC3;

std::cout<<"converting..."<<std::endl;

blurDiffs[i].convertTo(imageF_8UC3, CV_8U, 255);

std::cout<<"converted"<<std::endl;

imwrite( "blurDiffs_"+std::to_string(i)+".jpg", imageF_8UC3);

}

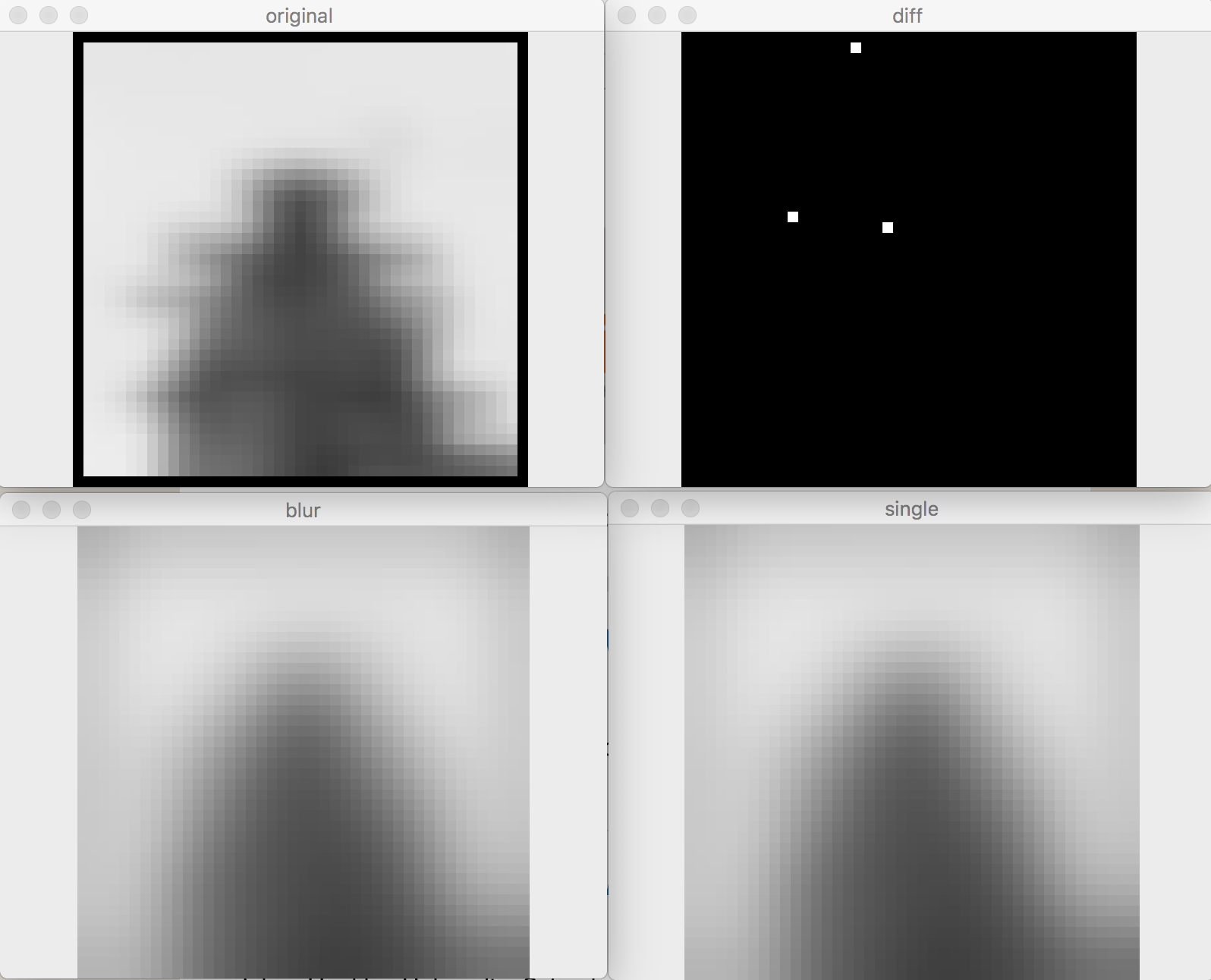

Now, what I've seen is that blurDiffs_1.jpg and blurDiffs_2.jpg are black, but suddendly from blurDiffs_3.jpg until the blurDiffs_29.jpg becomes whiter and whiter. For some reason, blurDiffs_30.jpg is almost completely black.

The first (correct) version generates 1761 descriptors. The second (uncorrect) version generates >2.3k descriptors.

I can't post the blurDiffs matrices because (especially the first ones) are very big and post's space is limited. I'll post some samples. I'll not post blurDiffs_1.jpg and blurDiffs_2.jpg because they're totally blacks. Notice that because of halfImage the images become smaller and smaller (as expected).

blurDiffs_3.jpg:

blurDiffs_6.jpg:

blurDiffs_15.jpg:

blurDiffs_29.jpg:

How the image is read:

Mat tmp = imread(argv[1]);

Mat image(tmp.rows, tmp.cols, CV_32FC1, Scalar(0));

float *out = image.ptr<float>(0);

unsigned char *in = tmp.ptr<unsigned char>(0);

for (size_t i=tmp.rows*tmp.cols; i > 0; i--)

{

*out = (float(in[0]) + in[1] + in[2])/3.0f;

out++;

in+=3;

}

Someone here suggested to divide diff by 255 to see the real difference, but I don't understand why of I understood him correctly.

If you need any more details, please let me know.

cv::Matas used in the code? If it's a floating point type then the probability of the diff being precisely zero is very small due to the way floating point values are represented inc++. – Tonnesondiff(a bunch of255with some occasional0) and the original code (andgaussianBlur) – Slimy