I'm performing POS tagging with the Stanford POS Tagger. The tagger only returns one possible tagging for the input sentence. For instance, when provided with the input sentence "The clown weeps.", the POS tagger produces the (erroneous) "The_DT clown_NN weeps_NNS ._.".

However, my application will try to parse the result, and may reject a POS tagging because there is no way to parse it. Hence, in this example, it would reject "The_DT clown_NN weeps_NNS ._." but would accept "The_DT clown_NN weeps_VBZ ._." which I assume is a lower-confidence tagging for the parser.

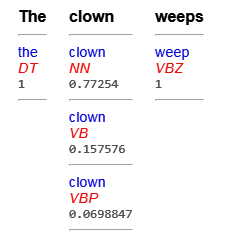

I would therefore like the POS tagger to provide multiple hypotheses for the tagging of each word, annotated by some kind of confidence value. In this way, my application could choose the POS tagging with highest confidence that achieves a valid parsing for its purposes.

I have found no way to ask the Stanford POS Tagger to produce multiple (n-best) tagging hypotheses for each word (or even for the whole sentence). Is there a way to do this? (Alternatively, I am also OK with using another POS tagger with comparable performance that would have support for this.)