I'm trying to get 3D coordinates with a stereo camera.

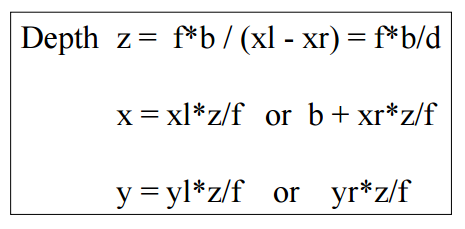

The first method is to calculate directly using this formula.

The second method is to use reprojectImageTo3D in opencv.

But I do not know the principle of this method.

The result is not in millimeters, so it's hard to match the size.

Please tell me the difference between the two methods.

(The first of these codes is to convert Point Feature to 3D coordinates after matching.) (The second code is to calculate the disparity of the entire stereo image using SGBM and calculate the 3d coordinates of the point feature using reprojectImageTo3D.)

*First method

cv::Mat depth(m_input.m_leftImg.size(), CV_32FC3, cv::Scalar::all(0));

int size = feOutput.m_leftKp.size();

for (int i = 0; i < size; i++)

{

cv::Point pt = cv::Point((int)(feOutput.m_leftKp.at(i).pt.x + 0.5f), (int)(feOutput.m_leftKp.at(i).pt.y + 0.5f));

depth.at<cv::Vec3f>(pt)[2] = fX * baseLine / (feOutput.m_leftKp.at(i).pt.x - feOutput.m_rightKp.at(i).pt.x); // Z

depth.at<cv::Vec3f>(pt)[0] = (feOutput.m_leftKp.at(i).pt.x - cX) * depth.at<cv::Vec3f>(pt)[2] / fX; // X

depth.at<cv::Vec3f>(pt)[1] = (feOutput.m_leftKp.at(i).pt.y - cY) * depth.at<cv::Vec3f>(pt)[2] / fY; // Y

}

depth /= 1000.f; //milli-meter to meter

*Second method

cv::Mat disparity16S(m_input.m_leftImg.size(), CV_16S);

sgbm->compute(m_input.m_leftImg, m_input.m_rightImg, disparity16S);

cv::Mat xyz;

cv::Matx44d Q = cv::Matx44d(

1.0, 0.0, 0.0, -cX,

0.0, 1.0, 0.0, -cY,

0.0, 0.0, 0.0, fX,

0.0, 0.0, -1.0 / baseLine, 0/*(CX - CX) / baseLine*/

);

cv::reprojectImageTo3D(disparity16S, xyz, Q, true);

cv::Mat pointXYZ(xyz.size(), xyz.type(), cv::Scalar::all(0));

for (int i = 0; i < size; i++)

{

cv::Point pt = cv::Point((int)(feOutput.m_leftKp.at(i).pt.x + 0.5f), (int)(feOutput.m_leftKp.at(i).pt.y + 0.5f));

pointXYZ.at<cv::Vec3f>(pt) = xyz.at<cv::Vec3f>(pt) / 1000.f;

}

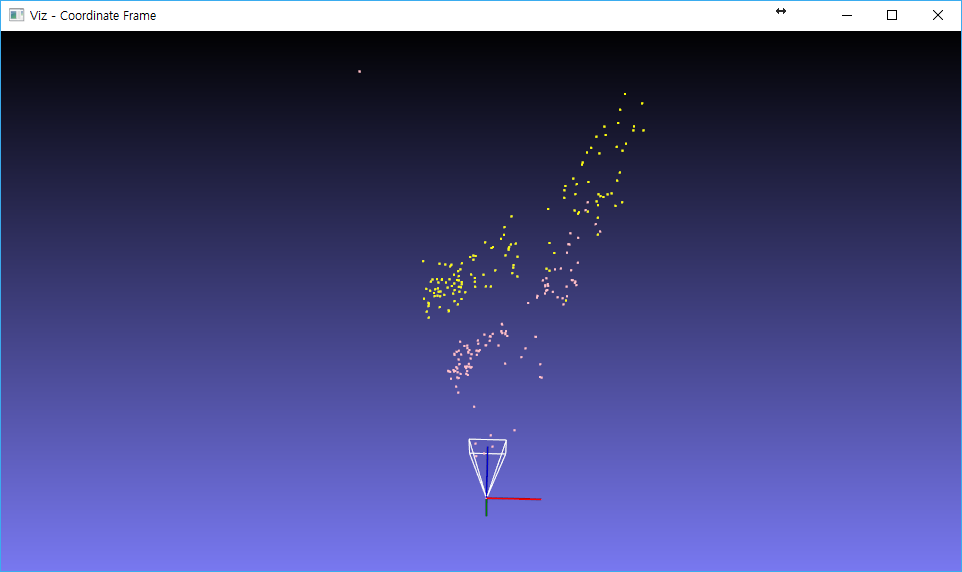

Add+ Pink is the size of the reprojectImageTo3D method scaled to 1/100, and yellow is the size of 1/1000(mm 2 meter) in the first method. If the two methods are the same, why is there a difference in scale?