I'm trying to use OpenCV to detect and extract ORB features from images.

However, the images I'm getting are not normalized (different size, different resolutions, etc...).

I was wondering if I need to normalize my images before extracting ORB features to be able to match them across images?

I know the feature detection is scale invariant, but I'm not sure about what it means for images resolution (for example, 2 images of the same size, with 1 object close, and far in the other should result in a match, even if they have a different scale on the images, but what if the images don't have the same size?).

Should I adapt the patchSize from ORB based on the image size to have (for example if I have an image of 800px and take a patchSize of 20px, should I take a patchSize of 10px for an image of 400px?).

Thank you.

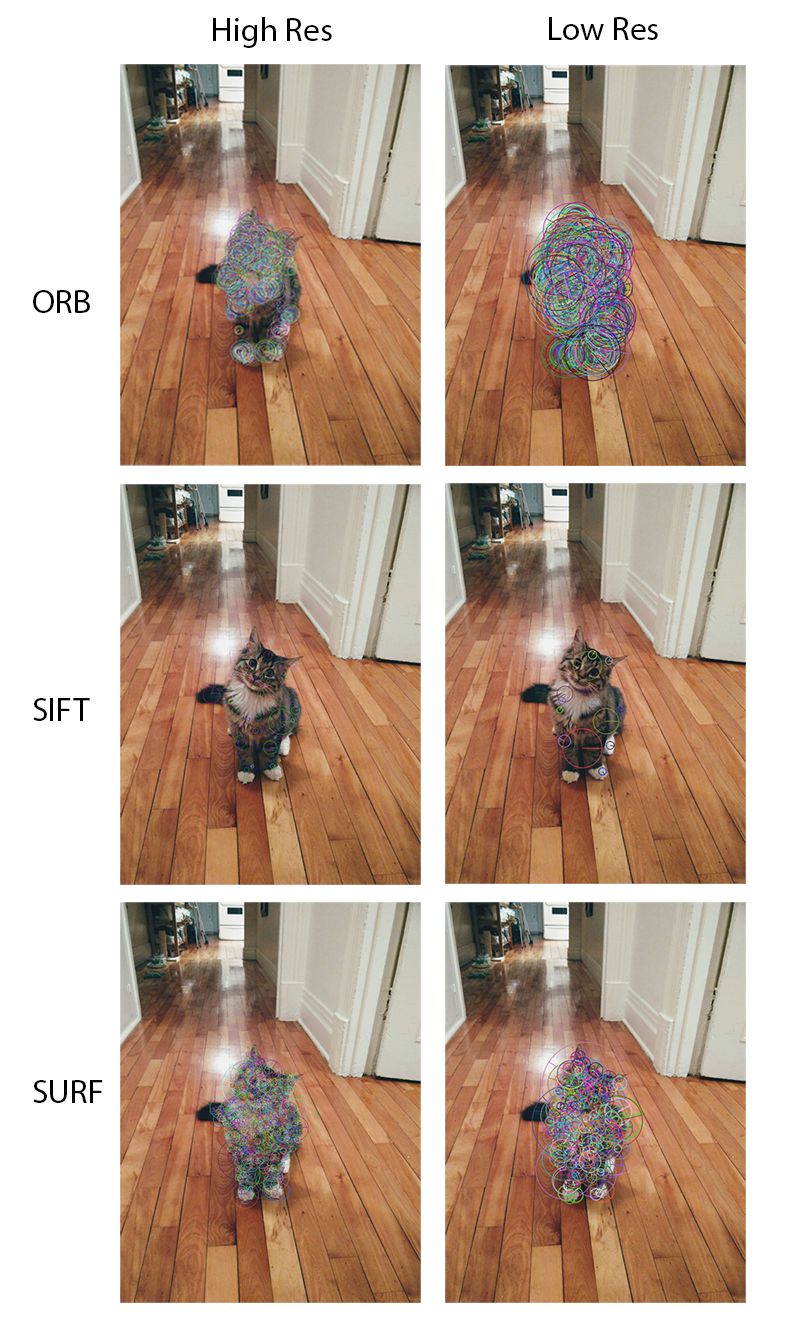

Update: I tested different algorithms (ORB, SURF and SIFT) with high and low resolution images to see how they behave. In this image, objects are the same size, but image resolution is different:

We can see that SIFT is pretty stable, although it has few features. SURF is also pretty stable in terms of keypoints and feature scale. So My guess is that feature matching between a low res and high res images with SIFT and SURF would work, but ORB has much larger feature in low res, so descriptors won't match those in the high res image.

(Same parameters have been used between high and low res feature extraction).

So my guess is that it would be better to SIFT or SURF if we want to do matching between images with different resolutions.