I have a Cloud Function that is being triggered from a Pub/Sub topic.

I want to rate limit my Cloud Function, so I set the max instances to 5. In my case, there will be a lot more produced messages than Cloud Functions (and I want to limit the number of running Cloud Functions).

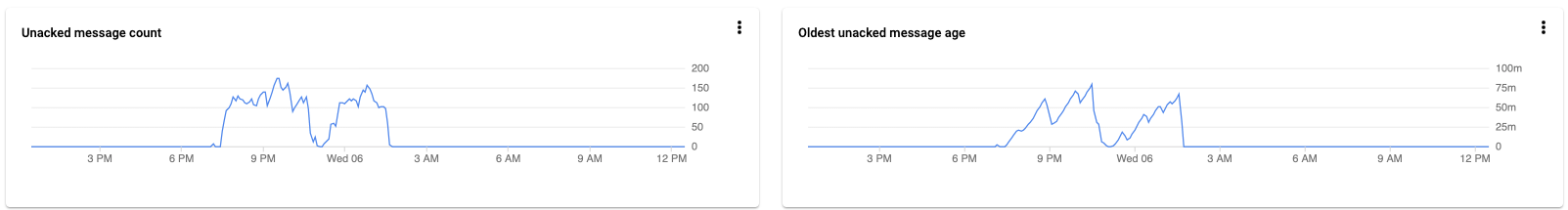

I expected this process to behave like Kafka/queue - the topic messages will be accumulated, and the Cloud Function will slowly consume messages until the topic will be empty.

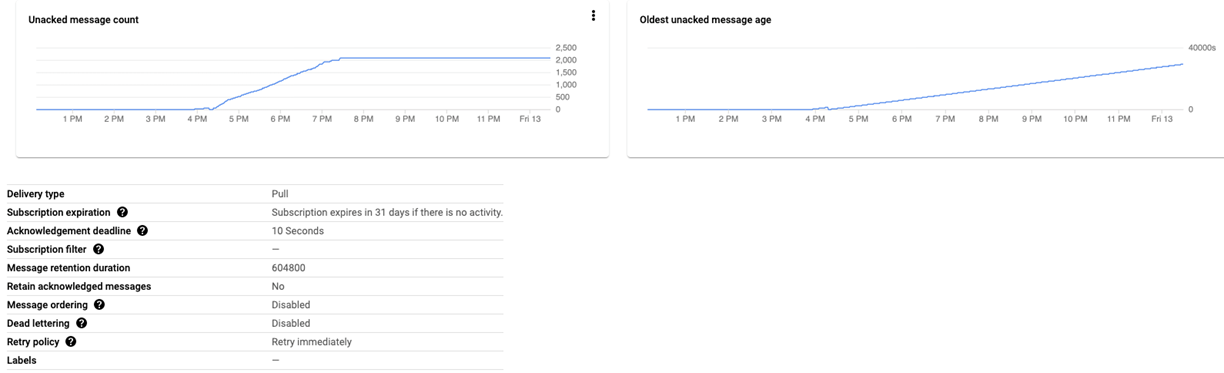

But it seems that all the messages that did not trigger cloud function (ack), simply sent a UNACK - and left behind. My subscription details:

The ack deadline max value is too low for me (it may take a few hours until the Cloud Function will get to messages due to the rate-limiting).

Anything I can change in the Pub/Sub to fit my needs? Or I'll need to add a queue? (Pub/Sub to send to a Task Queue, and Cloud Function consumes the Task Queue?).

BTW, The pub/sub data is actually GCS events. If this was AWS, I would simply send S3 file-created events to SQS and have Lambdas on the other side of the queue to consume.

Any help would be appreciated.