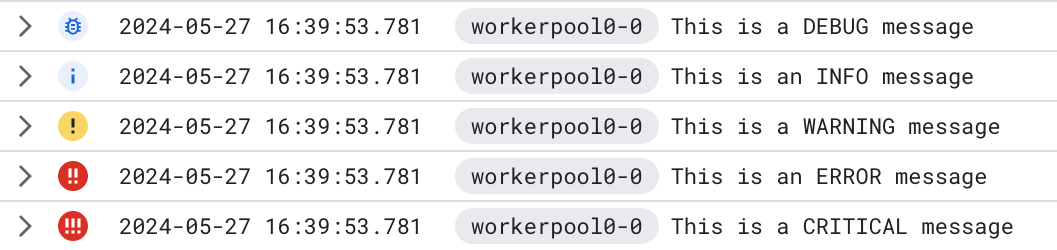

I have an application running in Kubernetes in google cloud. The application is written in python using fastapi. Logs from that application are visible via google cloud logging, however their "serverity" appears to be incorrectly translated:

While fastapi's access logs are correctly written with "INFO" severity, any messages written from custom loggers appear as errors, even if they were written by a logger.info call.

I pass the following logging config to uvicorn via the --log-config command line option:

{

"version": 1,

"disable_existing_loggers": false,

"formatters": {

"simple": {

"format": "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

},

"default": {

"()": "uvicorn.logging.DefaultFormatter",

"datefmt": "%Y-%m-%dT%H:%M:%S",

"format": "[%(asctime)s.%(msecs)04dZ] %(name)s %(levelprefix)s %(message).400s"

},

"access": {

"()": "uvicorn.logging.AccessFormatter",

"datefmt": "%Y-%m-%dT%H:%M:%S",

"format": "[%(asctime)s.%(msecs)04dZ] %(name)s %(levelprefix)s %(message)s"

}

},

"handlers": {

"default": {

"formatter": "default",

"class": "logging.StreamHandler",

"stream": "ext://sys.stderr"

},

"access": {

"formatter": "access",

"class": "logging.StreamHandler",

"stream": "ext://sys.stdout"

}

},

"loggers": {

"uvicorn.error": {

"level": "INFO",

"handlers": ["default"],

"propagate": false

},

"uvicorn.access": {

"level": "INFO",

"handlers": ["access"],

"propagate": false

},

"uvicorn": {

"level": "INFO",

"handlers": ["default"],

"propagate": false

},

"jsonrpc": {

"level": "INFO",

"handlers": ["default"],

"propagate": false

},

"api": {

"level": "INFO",

"handlers": ["default"],

"propagate": false

}

},

"root": {

"level": "INFO",

"handlers": ["default"]

}

}

All the uvicorn* loggers are correctly handled, but the jsonrpc and api loggers always show as "ERROR" in google cloud.

Following the documentation for google-cloud-logging, I use the following to setup cloud logging:

import google.cloud.logging

client = google.cloud.logging.Client()

client.setup_logging()

What am I missing? Is anything wrong with my configuration file? Is this expected or known behavior?

Edit: It appears that part of the issue here is the streams that the console handlers go to. Switching the default handler to go to stdout and adding an error handler that prints to stderr seems to solve the problem.