I'm building an RNN LSTM network to classify texts based on the writers' age (binary classification - young / adult).

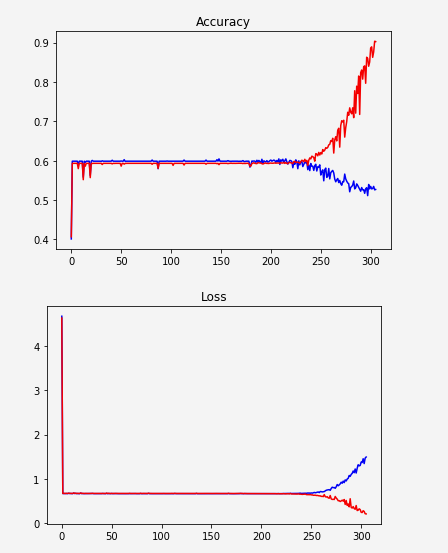

Seems like the network does not learn and suddenly starts overfitting:

One possibility could be that the data representation is not good enough. I just sorted the unique words by their frequency and gave them indices. E.g.:

unknown -> 0

the -> 1

a -> 2

. -> 3

to -> 4

So I'm trying to replace that with word embedding. I saw a couple of examples but I'm not able to implement it in my code. Most of the examples look like this:

embedding = tf.Variable(tf.random_uniform([vocab_size, hidden_size], -1, 1))

inputs = tf.nn.embedding_lookup(embedding, input_data)

Does this mean we're building a layer that learns the embedding? I thought that one should download some Word2Vec or Glove and just use that.

Anyway let's say I want to build this embedding layer...

If I use these 2 lines in my code I get an error:

TypeError: Value passed to parameter 'indices' has DataType float32 not in list of allowed values: int32, int64

So I guess I have to change the input_data type to int32. So I do that (it's all indices after all), and I get this:

TypeError: inputs must be a sequence

I tried wrapping inputs (argument to tf.contrib.rnn.static_rnn) with a list: [inputs] as suggested in this answer, but that produced another error:

ValueError: Input size (dimension 0 of inputs) must be accessible via shape inference, but saw value None.

Update:

I was unstacking the tensor x before passing it to embedding_lookup. I moved the unstacking after the embedding.

Updated code:

MIN_TOKENS = 10

MAX_TOKENS = 30

x = tf.placeholder("int32", [None, MAX_TOKENS, 1])

y = tf.placeholder("float", [None, N_CLASSES]) # 0.0 / 1.0

...

seqlen = tf.placeholder(tf.int32, [None]) #list of each sequence length*

embedding = tf.Variable(tf.random_uniform([VOCAB_SIZE, HIDDEN_SIZE], -1, 1))

inputs = tf.nn.embedding_lookup(embedding, x) #x is the text after converting to indices

inputs = tf.unstack(inputs, MAX_POST_LENGTH, 1)

outputs, states = tf.contrib.rnn.static_rnn(lstm_cell, inputs, dtype=tf.float32, sequence_length=seqlen) #---> Produces error

*seqlen: I zero-padded the sequences so all of them have the same list size, but since the actual size differ, I prepared a list describing the length without the padding.

New error:

ValueError: Input 0 of layer basic_lstm_cell_1 is incompatible with the layer: expected ndim=2, found ndim=3. Full shape received: [None, 1, 64]

64 is the size of each hidden layer.

It's obvious that I have a problem with the dimensions... How can I make the inputs fit the network after embedding?

ValueErrorproblem (+ adjusting the input accordingly - reshaping the batches from (BATCH_SIZE, MAX_TOKENS, 1) to (BATCH_SIZE, MAX_TOKENS) to match with the newxshape). This however didn't solve the learning problem. The graphs now look like: this. I guess you deserve the bounty though (and +1). – Stowers