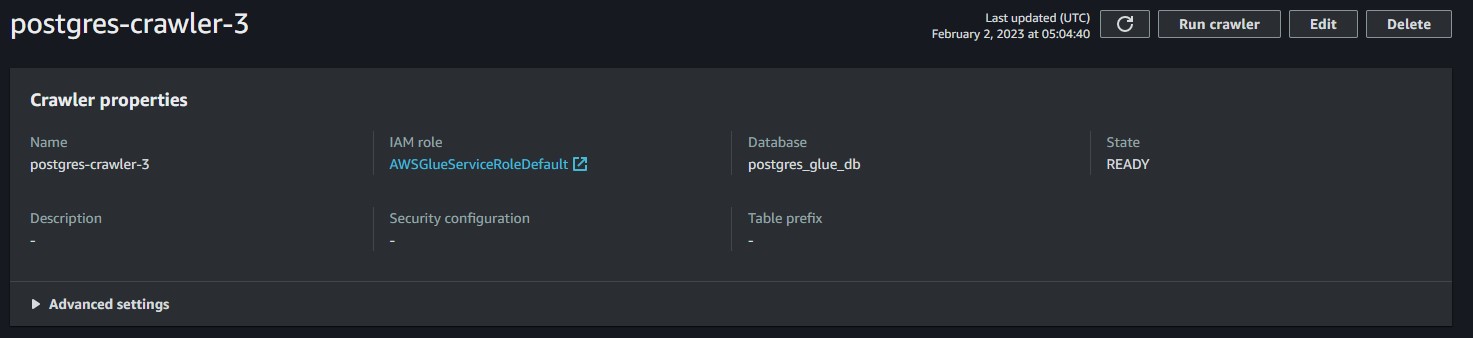

I am unable to run newly created AWS Glue Crawler. I followed IAM Role guide at https://docs.aws.amazon.com/glue/latest/dg/create-an-iam-role.html?icmpid=docs_glue_console

- Created new Crawler Role

AWSGlueServiceRoleDefaultwithAWSGlueServiceRoleandAmazonS3FullAccessmanaged policies - Trust Relationship contains:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "glue.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

- User executing crawler signs via SSO and inheriths

arn:aws:iam::aws:policy/AdministratorAccess - I even tried to create new AWS user with all permissions

![AWS Permissions]()

After executing Crawler it fails within 8 seconds with following error:

Crawler cannot be started. Verify the permissions in the policies attached to the IAM role defined in the crawler

What other IAM permissions are needed?