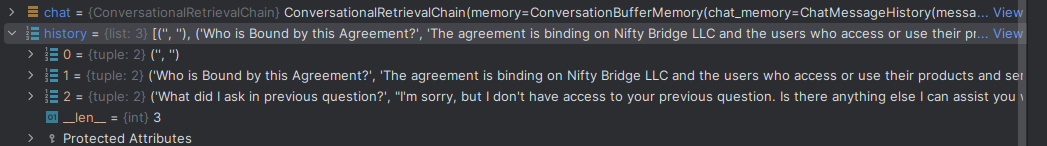

ConversationalRetrievalChain are performing few steps:

- Rephrasing input to standalone question

- Retrieving documents

- Asking question with provided context

if you pass memory to config it will also update it with questions and answers.

As i didn't find anything about used prompts in docs I was looking for them in repo and there are two crucial ones:

const question_generator_template = `Given the following conversation and

a follow up question, rephrase the follow up question to be a standalone

question.

Chat History:

{chat_history}

Follow Up Input: {question}

Standalone question:`;

and

export const DEFAULT_QA_PROMPT = /*#__PURE__*/ new PromptTemplate({

template:

"Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to

make up an answer.\n\n{context}\n\nQuestion: {question}\nHelpful

Answer:,

inputVariables: ["context", "question"],

});

As you can see, only question_generator_template has chat_history context.

I run into same issue as you and I changed prompt for qaChain, as in chains every part of it has access to all input variables you can just modify prompt and add chat_history input like this:

const QA_PROMPT = new PromptTemplate({

template:

"Use the following pieces of context and chat history to answer the

question at the end.\n" +

"If you don't know the answer, just say that you don't know, " +

"don't try to make up an answer.\n\n" +

"{context}\n\nChat history: {chat_history}\n\nQuestion: {question}

\nHelpful Answer:",

inputVariables: ["context", "question", "chat_history"],

});

and then pass it to fromLLM() function:

chat = ConversationalRetrievalChain.from_llm(llm,

vectorstore.as_retriever(), memory=memory, qaChainOptions: {type:

"stuff", prompt: QA_PROMPT})

Now final prompt which actually asks question has chat_history available, and should work as you expect.

You can also pass verbose: true to config so it will log all calls with prompts, so it's easier do debug.

Let me know if it helped you.