According to the K8s documentation, to avoid flapping of replicas property stabilizationWindowSeconds can be used

The stabilization window is used to restrict the flapping of replicas when the metrics used for scaling keep fluctuating. The stabilization window is used by the autoscaling algorithm to consider the computed desired state from the past to prevent scaling.

When the metrics indicate that the target should be scaled down the algorithm looks into previously computed desired states and uses the highest value from the specified interval.

From what I understand from documentation, with the following hpa configuration:

horizontalPodAutoscaler:

enabled: true

minReplicas: 2

maxReplicas: 14

targetCPUUtilizationPercentage: 70

behavior:

scaleDown:

stabilizationWindowSeconds: 1800

policies:

- type: Pods

value: 1

periodSeconds: 300

scaleUp:

stabilizationWindowSeconds: 60

policies:

- type: Pods

value: 2

periodSeconds: 60

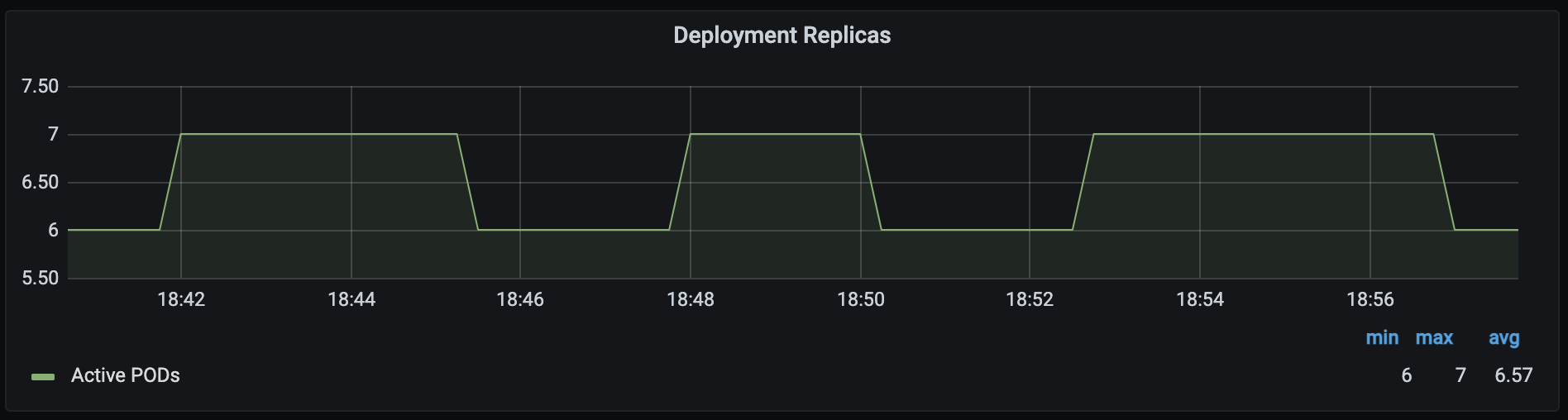

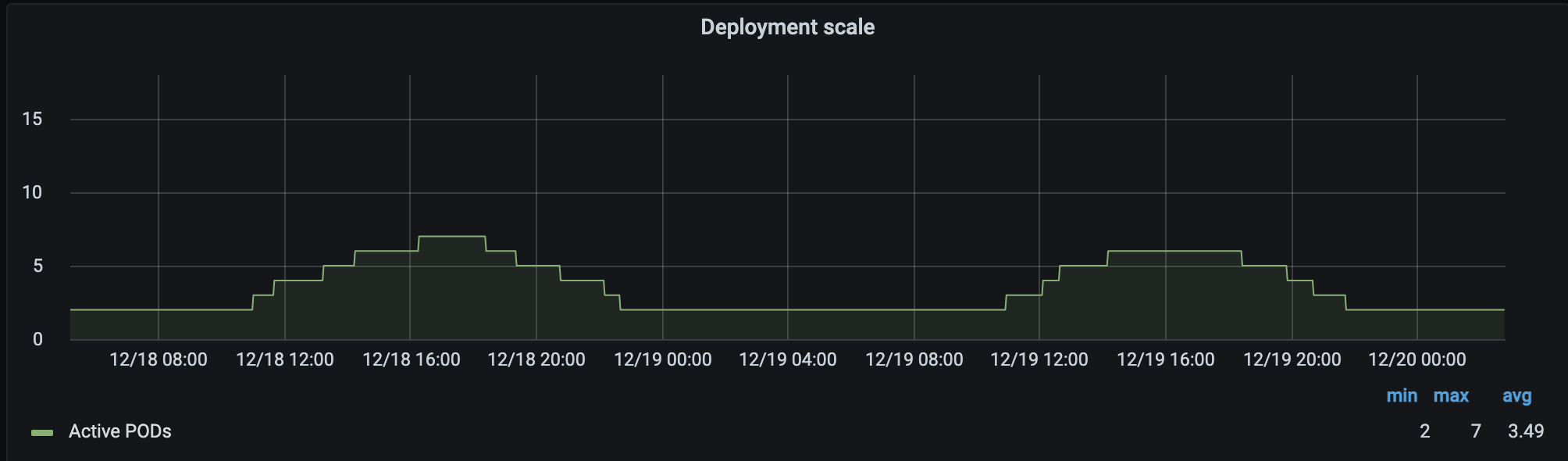

Scaling down of my deployment (let's say from 7 pods to 6) shouldn't happen, if at any time during the last 1800 seconds (30 minutes) hpa calculated target pods number equal to 7 pods. But I'm still observing the flapping of replicas in the deployment.

What I misunderstood in the documentation and how to avoid continuous scaling up/down of 1 pod?

Kubernetes v1.20

HPA description:

CreationTimestamp: Thu, 14 Oct 2021 12:14:37 +0200

Reference: Deployment/my-deployment

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): 64% (1621m) / 70%

Min replicas: 2

Max replicas: 14

Behavior:

Scale Up:

Stabilization Window: 60 seconds

Select Policy: Max

Policies:

- Type: Pods Value: 2 Period: 60 seconds

Scale Down:

Stabilization Window: 1800 seconds

Select Policy: Max

Policies:

- Type: Pods Value: 1 Period: 300 seconds

Deployment pods: 3 current / 3 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited False DesiredWithinRange the desired count is within the acceptable range

Events: <none>

kubectl describe hpacommand? Could you check that all pods from this deployment are inRunningstates, and there are no pods that are stuck somehow (for example inTerminatingstate)? – SamuelsonapiVersionare you using in HPA definition? There are some differences between them, some fields are replaced with different ones. – Samuelson