I have basic understanding of both JSOR and jVerbs.

Both handle limitations of JNI and use fast path to reduce latency. Both of them use user Verbs RDMA interface for avoiding context switch and providing fast path access. Both also have options for zero-copy transfer.

The difference is that JSOR still uses the Java Socket interface. jVerbs provides a new interface. jVerbs also has something called Stateful Verbs Call to avoid repeat serialization of RDMA requests which they say reduces latency. jVerbs provides a more native interface and applications can directly use these. I read the jVerbs SoCC 2013 paper where they build jverbsRPC on top of jVerbs and show that it reduces latency of zookeeper and memcache operations significantly.

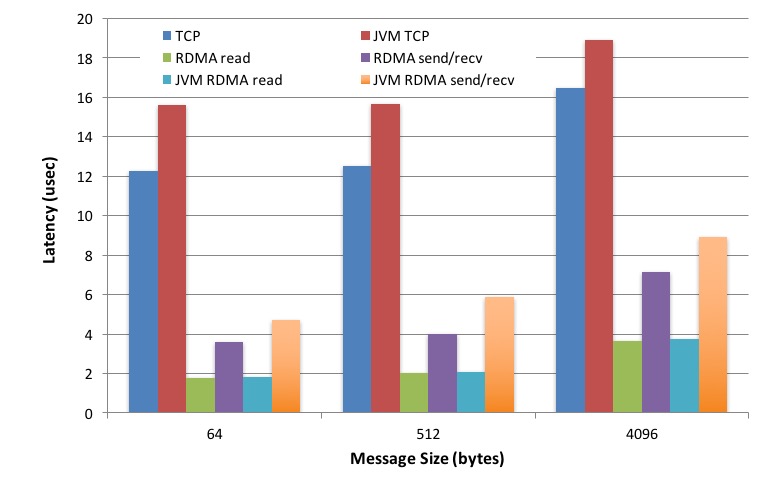

Documentations for both show that they perform better than regular Java sockets based on TCP/IP, SDP and IPoIB.

I don't have any performance comparison between JSOR and jVerbs. I think jVerbs may perform better than JSOR. But, with JSOR, I don't have to change my existing code because it still uses the same java socket interface. My question is what may be the performance gain of using jVerbs relative to JSOR. Does anyone know or have experience in dealing with the two? If you have any comparison data that will be great. I could not find any.