while we can install multiple process without supervisor, only one process can be run when docker run is issued and when container is stopped only the PID 1 will be sent signals and other running process will not be stopped gracefully.

Yes, although it depends on how your main process runs (foreground or background), and how it collects child processes.

That is what is detailed in "Trapping signals in Docker containers"

docker stop stops a running container by sending it SIGTERM signal, let the main process process it, and after a grace period uses SIGKILL to terminate the application.

The signal sent to container is handled by the main process that is running (PID 1).

If the application is in the foreground, meaning the application is the main process in a container (PID1), it could handle signals directly.

But:

The process to be signaled could be the background one and you cannot send any signals directly. In this case one solution is to set up a shell-script as the entrypoint and orchestrate all signal processing in that script.

The issue is further detailed in "Docker and the PID 1 zombie reaping problem"

Unix is designed in such a way that parent processes must explicitly "wait" for child process termination, in order to collect its exit status. The zombie process exists until the parent process has performed this action, using the waitpid() family of system calls.

The action of calling waitpid() on a child process in order to eliminate its zombie, is called "reaping".

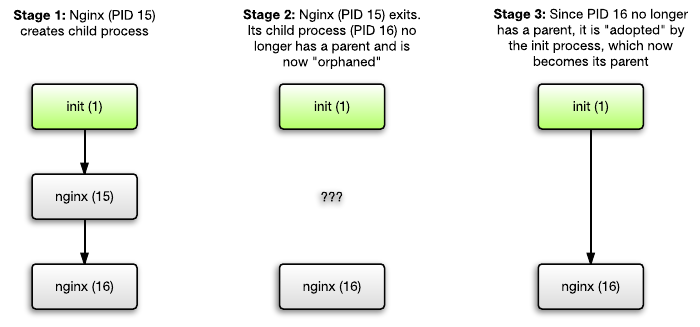

The init process -- PID 1 -- has a special task. Its task is to "adopt" orphaned child processes.

![https://static.mcmap.net/file/mcmap/ZG-AbGLDKwfiaFfnKnBoc7MpaRPQame/wp-content/uploads/2015/01/adoption.png]()

The operating system expects the init process to reap adopted children too.

Problem with Docker:

We see that a lot of people run only one process in their container, and they think that when they run this single process, they're done.

But most likely, this process is not written to behave like a proper init process.

That is, instead of properly reaping adopted processes, it's probably expecting another init process to do that job, and rightly so.

Using an image like phusion/baseimage-docker help managing one (or several) process(es) while keeping a main process init-compliant.

It uses runit instead of supervisord, for multi-process management:

Runit is not there to solve the reaping problem. Rather, it's to support multiple processes. Multiple processes are encouraged for security (through process and user isolation).

Runit uses less memory than Supervisord because Runit is written in C and Supervisord in Python.

And in some use cases, process restarts in the container are preferable over whole-container restarts.

That image includes a my_init script which takes care of the "reaping" issue.

In baseimage-docker, we encourage running multiple processes in a single container. Not necessarily multiple services though.

A logical service can consist of multiple OS processes, and we provide the facilities to easily do that.