I think there is a memory leak in the ndb library but I can not find where.

Is there a way to avoid the problem described below?

Do you have a more accurate idea of testing to figure out where the problem is?

That's how I reproduced the problem :

I created a minimalist Google App Engine application with 2 files.

app.yaml:

application: myapplicationid

version: demo

runtime: python27

api_version: 1

threadsafe: yes

handlers:

- url: /.*

script: main.APP

libraries:

- name: webapp2

version: latest

main.py:

# -*- coding: utf-8 -*-

"""Memory leak demo."""

from google.appengine.ext import ndb

import webapp2

class DummyModel(ndb.Model):

content = ndb.TextProperty()

class CreatePage(webapp2.RequestHandler):

def get(self):

value = str(102**100000)

entities = (DummyModel(content=value) for _ in xrange(100))

ndb.put_multi(entities)

class MainPage(webapp2.RequestHandler):

def get(self):

"""Use of `query().iter()` was suggested here:

https://code.google.com/p/googleappengine/issues/detail?id=9610

Same result can be reproduced without decorator and a "classic"

`query().fetch()`.

"""

for _ in range(10):

for entity in DummyModel.query().iter():

pass # Do whatever you want

self.response.headers['Content-Type'] = 'text/plain'

self.response.write('Hello, World!')

APP = webapp2.WSGIApplication([

('/', MainPage),

('/create', CreatePage),

])

I uploaded the application, called /create once.

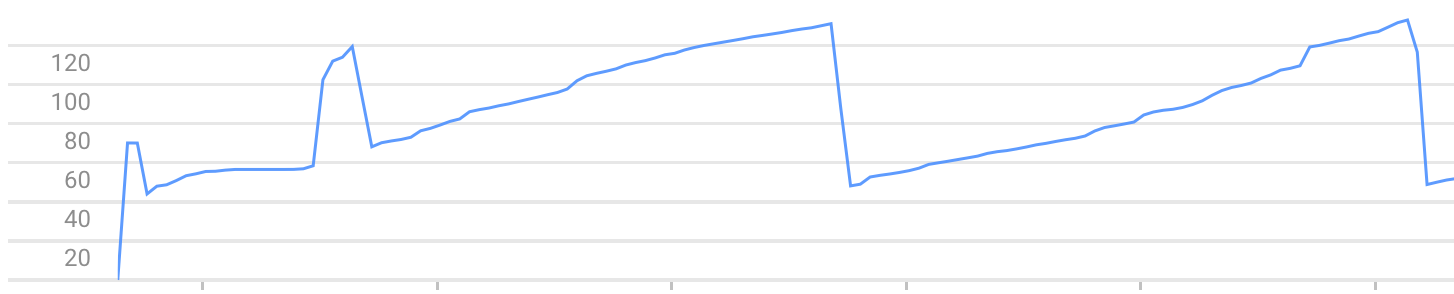

After that, each call to / increases the memory used by the instance. Until it stops due to the error Exceeded soft private memory limit of 128 MB with 143 MB after servicing 5 requests total.

Exemple of memory usage graph (you can see the memory growth and crashes) :

Note: The problem can be reproduced with another framework than webapp2, like web.py

ndb.put_multitries to insert 100 entities in a single transaction. That is probably what causes that much memory being allocated. Exceeding the soft private memory limit is probably because your transactions are still running when your next request comes in adding to the memory load. This should not occur if you wait a while between the calls (respectively wait until the transaction is done). Also App Engine should start an additional instance if response times drastically increase. – SheilasheilahMainPage, notCreatePage. – Ezmeralda