If you really only need to generate combinations - where the order of elements does not matter - you may use combinadics as they are implemented e.g. here by James McCaffrey.

Contrast this with k-permutations, where the order of elements does matter.

In the first case (1,2,3), (1,3,2), (2,1,3), (2,3,1), (3,1,2), (3,2,1) are considered the same - in the latter, they are considered distinct, though they contain the same elements.

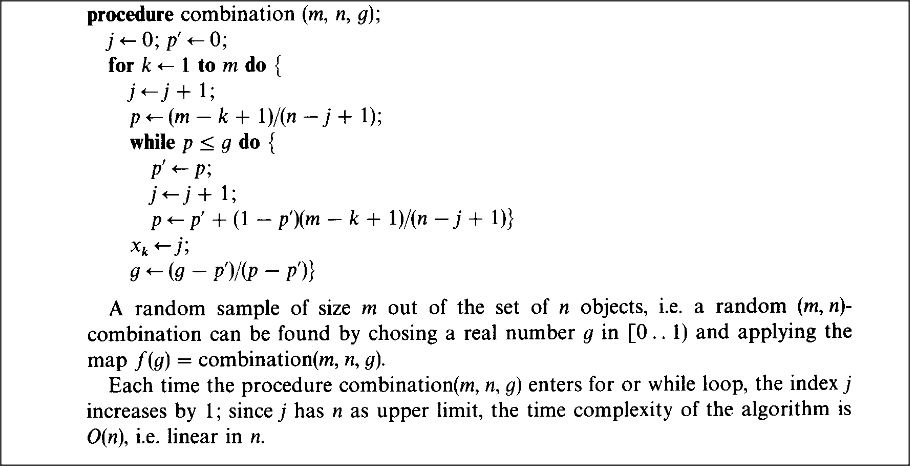

In case you need combinations, you may really only need to generate one random number (albeit it can be a bit large) - that can be used directly to find the m th combination.

Since this random number represents the index of a particular combination, it follows that your random number should be between 0 and C(n,k).

Calculating combinadics might take some time as well.

It might just not worth the trouble - besides Jerry's and Federico's answer is certainly simpler than implementing combinadics.

However if you really only need a combination and you are bugged about generating the exact number of random bits that are needed and none more... ;-)

While it is not clear whether you want combinations or k-permutations, here is a C# code for the latter (yes, we could generate only a complement if x > y/2, but then we would have been left with a combination that must be shuffled to get a real k-permutation):

static class TakeHelper

{

public static IEnumerable<T> TakeRandom<T>(

this IEnumerable<T> source, Random rng, int count)

{

T[] items = source.ToArray();

count = count < items.Length ? count : items.Length;

for (int i = items.Length - 1 ; count-- > 0; i--)

{

int p = rng.Next(i + 1);

yield return items[p];

items[p] = items[i];

}

}

}

class Program

{

static void Main(string[] args)

{

Random rnd = new Random(Environment.TickCount);

int[] numbers = new int[] { 1, 2, 3, 4, 5, 6, 7 };

foreach (int number in numbers.TakeRandom(rnd, 3))

{

Console.WriteLine(number);

}

}

}

Another, more elaborate implementation that generates k-permutations, that I had lying around and I believe is in a way an improvement over existing algorithms if you only need to iterate over the results. While it also needs to generate x random numbers, it only uses O(min(y/2, x)) memory in the process:

/// <summary>

/// Generates unique random numbers

/// <remarks>

/// Worst case memory usage is O(min((emax-imin)/2, num))

/// </remarks>

/// </summary>

/// <param name="random">Random source</param>

/// <param name="imin">Inclusive lower bound</param>

/// <param name="emax">Exclusive upper bound</param>

/// <param name="num">Number of integers to generate</param>

/// <returns>Sequence of unique random numbers</returns>

public static IEnumerable<int> UniqueRandoms(

Random random, int imin, int emax, int num)

{

int dictsize = num;

long half = (emax - (long)imin + 1) / 2;

if (half < dictsize)

dictsize = (int)half;

Dictionary<int, int> trans = new Dictionary<int, int>(dictsize);

for (int i = 0; i < num; i++)

{

int current = imin + i;

int r = random.Next(current, emax);

int right;

if (!trans.TryGetValue(r, out right))

{

right = r;

}

int left;

if (trans.TryGetValue(current, out left))

{

trans.Remove(current);

}

else

{

left = current;

}

if (r > current)

{

trans[r] = left;

}

yield return right;

}

}

The general idea is to do a Fisher-Yates shuffle and memorize the transpositions in the permutation.

It was not published anywhere nor has it received any peer-review whatsoever. I believe it is a curiosity rather than having some practical value. Nonetheless I am very open to criticism and would generally like to know if you find anything wrong with it - please consider this (and adding a comment before downvoting).