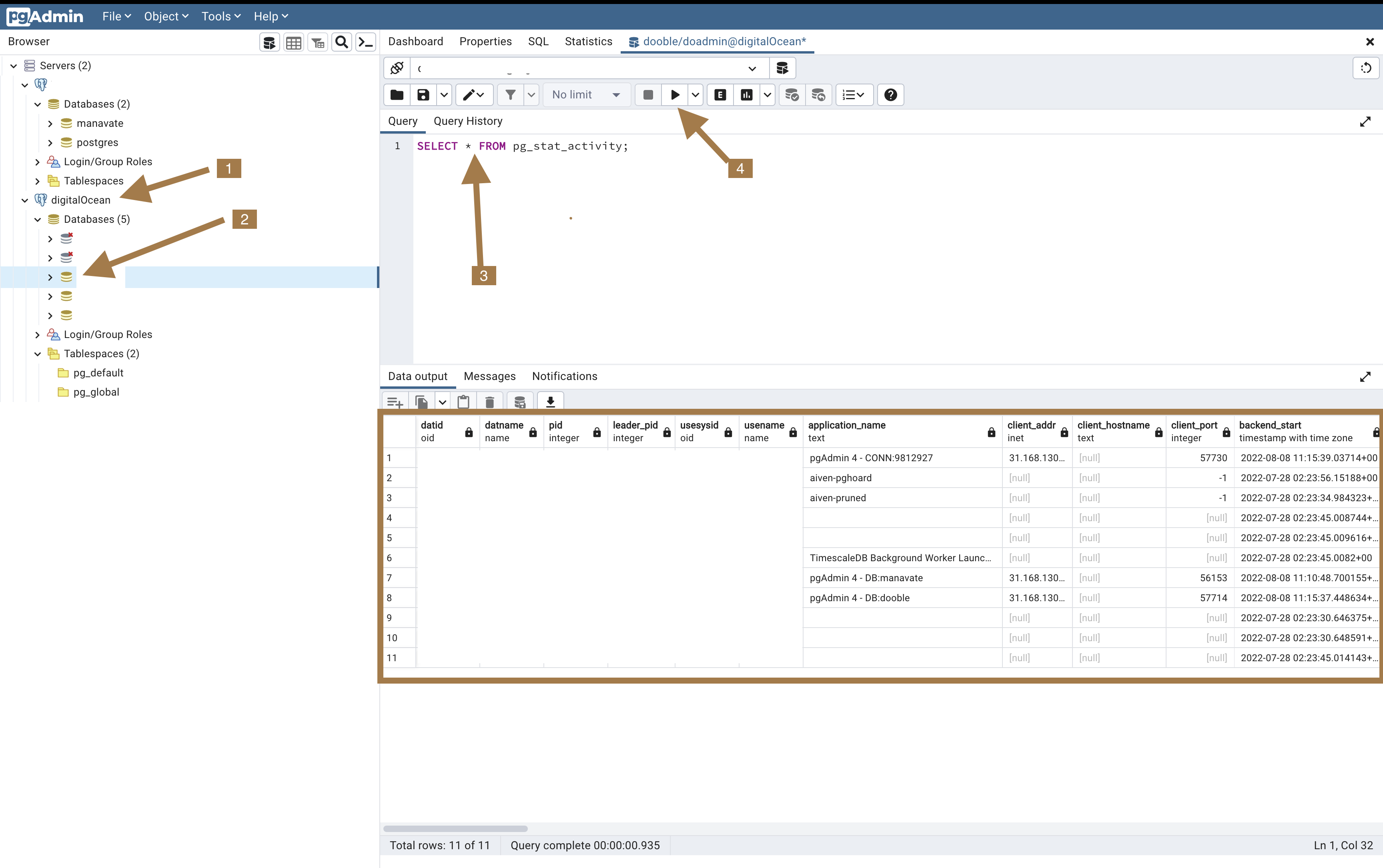

I am using PostgreSQL in my NodeJS backend service. All of sudden, when I start the service I am facing below error

connection error error: sorry, too many clients already.

PostgresSQL connection config

const pg = require(“pg”);

const client = new pg.Client({

host: “txslmxxxda6z”,

user: “mom”,

password: “mom”,

db: “mom”,

port: 5025

});

I am unable to query database because of the above error. I am unable to fix this issue. Can you please suggest the solution

max_connections. – Monition