I run Airflow in a managed Cloud-composer environment (version 1.9.0), whic runs on a Kubernetes 1.10.9-gke.5 cluster.

All my DAGs run daily at 3:00 AM or 4:00 AM. But sometime in the morning, I see a few Tasks failed without a reason during the night.

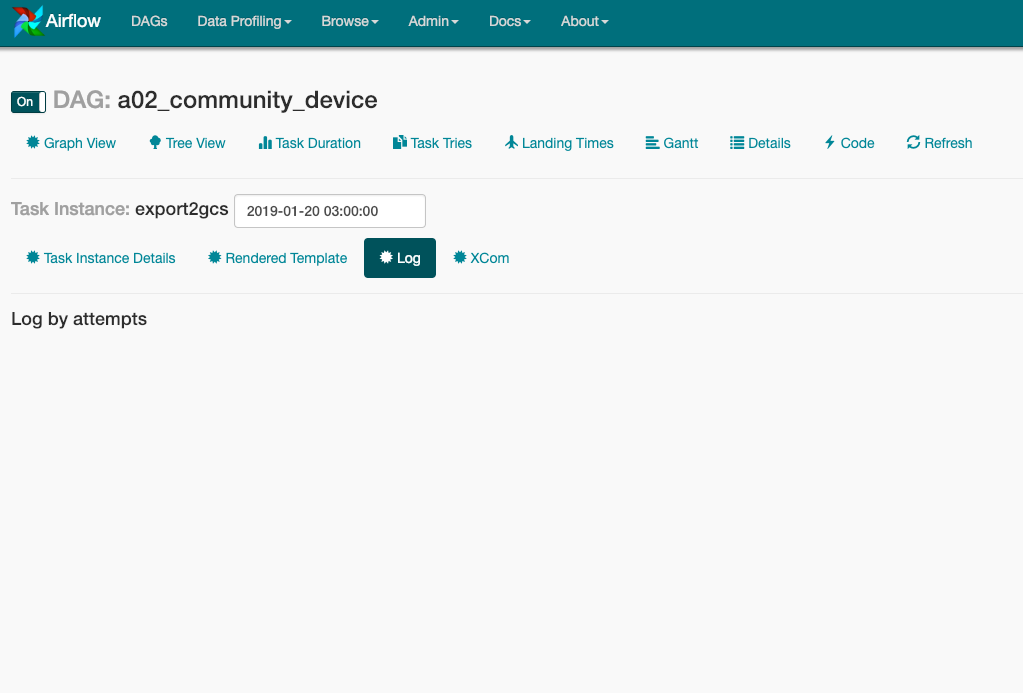

When checking the log using the UI - I see no log and I see no log either when I check the log folder in the GCS bucket

![enter image description here]()

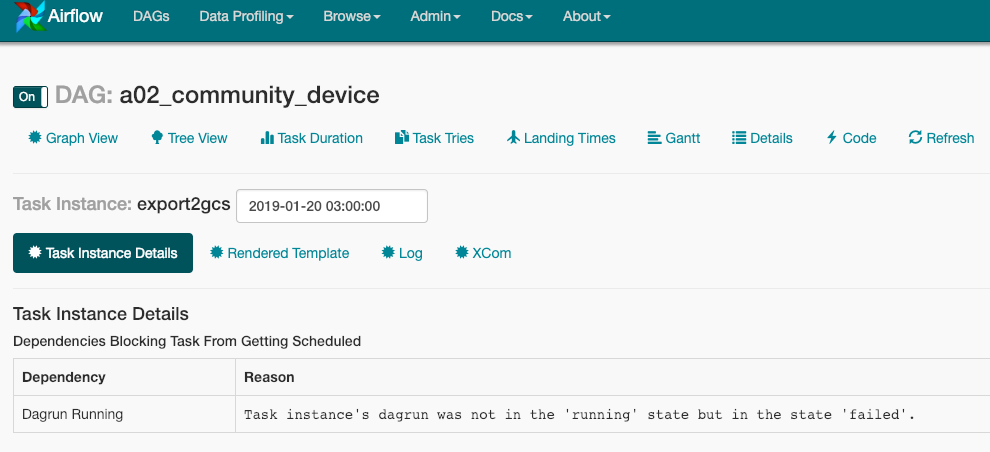

In the instance details, it reads "Dependencies Blocking Task From Getting Scheduled" but the dependency is the dagrun itself.

![enter image description here]()

Although the DAG is set with 5 retries and an email message it does not look as if any retry took place and I haven't received an email about the failure.

I usually just clear the task instance and it run successfully on the first try.

Has anyone encountered a similar problem?