I am struggling to find a way to use S3DistCp in my AWS EMR Cluster.

Some old examples which show how to add s3distcp as an EMR step use elastic-mapreduce command which is not used anymore.

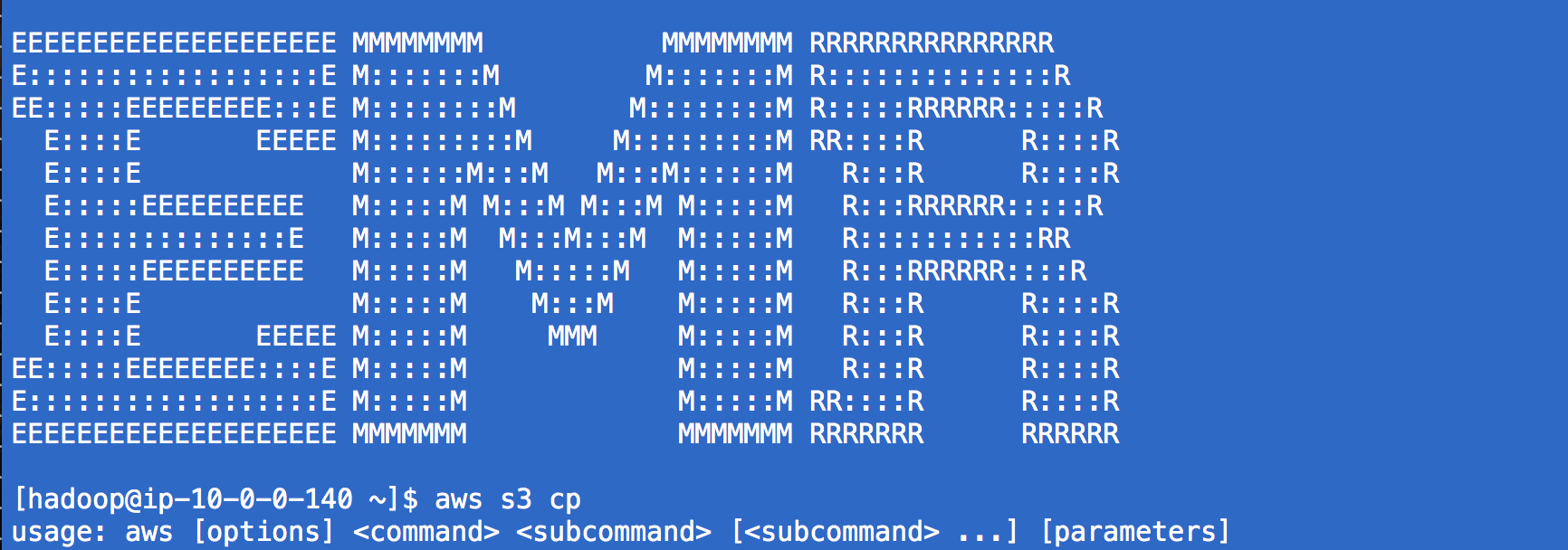

Some other sources suggest to use s3-dist-cp command, which is not found in current EMR clusters. Even official documentation (online and EMR developer guide 2016 pdf) present an example like this:

aws emr add-steps --cluster-id j-3GYXXXXXX9IOK --steps Type=CUSTOM_JAR,Name="S3DistCp step",Jar=/home/hadoop/lib/emr-s3distcp-1.0.jar,Args=["--s3Endpoint,s3-eu-west-1.amazonaws.com","--src,s3://mybucket/logs/j-3GYXXXXXX9IOJ/node/","--dest,hdfs:///output","--srcPattern,.*[azA-Z,]+"]

But there is no lib folder in the /home/hadoop path. I found some hadoop libraries in this folder: /usr/lib/hadoop/lib, but I cannot find s3distcp from anywhere.

Then I found that there are some libraries available in some S3 buckets. For example, from this question, I found this path: s3://us-east-1.elasticmapreduce/libs/s3distcp/1.latest/s3distcp.jar. This seemed to be a step in the right direction, as adding a new step to a running EMR cluster from the AWS interface with these parameters started the step (which it didn't with previous attempts) but failed after ~15seconds:

JAR location: s3://us-east-1.elasticmapreduce/libs/s3distcp/1.latest/s3distcp.jar

Main class: None

Arguments: --s3Endpoint s3-eu-west-1.amazonaws.com --src s3://source-bucket/scripts/ --dest hdfs:///output

Action on failure: Continue

This resulted in the following error:

Exception in thread "main" java.lang.RuntimeException: Unable to retrieve Hadoop configuration for key fs.s3n.awsAccessKeyId

at com.amazon.external.elasticmapreduce.s3distcp.ConfigurationCredentials.getConfigOrThrow(ConfigurationCredentials.java:29)

at com.amazon.external.elasticmapreduce.s3distcp.ConfigurationCredentials.<init>(ConfigurationCredentials.java:35)

at com.amazon.external.elasticmapreduce.s3distcp.S3DistCp.createInputFileListS3(S3DistCp.java:85)

at com.amazon.external.elasticmapreduce.s3distcp.S3DistCp.createInputFileList(S3DistCp.java:60)

at com.amazon.external.elasticmapreduce.s3distcp.S3DistCp.run(S3DistCp.java:529)

at com.amazon.external.elasticmapreduce.s3distcp.S3DistCp.run(S3DistCp.java:216)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at com.amazon.external.elasticmapreduce.s3distcp.Main.main(Main.java:12)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

I thought this may have been caused by the incompatibility of my S3 location (same as the endpoint) and the location of the s3distcp script, which was from us-east. I replaced it with eu-west-1 and still got the same error about the authentication. I have used a similar setup to run my scala scripts (Custom jar type with "command-runner.jar" script with the first argument "spark-submit" to run a spark job and this works, I have not had this problem with the authentication before.

What is the simplest way to copy a file from S3 to an EMR cluster? Either by adding an additional EMR step with AWS SDK (for Go lang) or somehow inside the Scala spark script? Or from the AWS EMR interface, but not from CLI as I need it to be automated.

s3-dist-cpcommand was already automatically on my 6.11 cluster with hadoop (and spark) installed, so maybe they brought it back, or maybe you need to select hadoop as an app to include on the cluster - IDK. FWIW, my issue was forgetting to put /usr/hadoop (forgot the exact spelling now) in my hdfs file path. – Towandatoward