First of all, my solution is based on @DrHouseofSQL and @Bhouse answers, so you have to read @DrHouseofSQL answer first then @BHouse answer then continue with this answer

Problem

Note : Page name will be dynamic and Column position may change (eg: Column "ABC may exist in first row or second row or ...

This situation is a little complex and can be solved using the following workaround:

Solution Overview

- Add a script task before the data flow task that import the data

- You have to use the script task to open the excel file and get the Worksheet name and the header row

- Build the Query and store it in a variable

- in the second Data Flow task you have to use the query stored above as source (Note that you have to set

Delay Validation property to true)

Solution Details

- First create an SSIS variable of type string (i.e. @[User::strQuery])

- Add another variable that contains the Excel File Path (i.e. @[User::ExcelFilePath])

- Add A Script Task, and select

@[User::strQuery] as ReadWrite Variable, and @[User::ExcelFilePath] as ReadOnly Variable (in the script task window)

- Set the Script Language to VB.Net and in the script editor window write the following script:

Note: you have to imports System.Data.OleDb

In the code below, we search the excel first 15 rows to find the header, you can increase the number if the header can be found after the 15 rows. Also i assumed that the columns range is from A to I

m_strExcelPath = Dts.Variables.Item("ExcelFilePath").Value.ToString

Dim strSheetname As String = String.Empty

Dim intFirstRow As Integer = 0

m_strExcelConnectionString = Me.BuildConnectionString()

Try

Using OleDBCon As New OleDbConnection(m_strExcelConnectionString)

If OleDBCon.State <> ConnectionState.Open Then

OleDBCon.Open()

End If

'Get all WorkSheets

m_dtschemaTable = OleDBCon.GetOleDbSchemaTable(OleDbSchemaGuid.Tables,

New Object() {Nothing, Nothing, Nothing, "TABLE"})

'Loop over work sheet to get the first one (the excel may contains temporary sheets or deleted ones

For Each schRow As DataRow In m_dtschemaTable.Rows

strSheetname = schRow("TABLE_NAME").ToString

If Not strSheetname.EndsWith("_") AndAlso strSheetname.EndsWith("$") Then

Using cmd As New OleDbCommand("SELECT * FROM [" & strSheetname & "A1:I15]", OleDBCon)

Dim dtTable As New DataTable("Table1")

cmd.CommandType = CommandType.Text

Using daGetDataFromSheet As New OleDbDataAdapter(cmd)

daGetDataFromSheet.Fill(dtTable)

For intCount As Integer = 0 To 15

If Not String.IsNullOrEmpty(dtTable.Rows(intCount)(0).ToString) Then

'+1 because datatable is zero based indexed, +1 because we want to start from the second row

intFirstRow = intCount + 2

End If

Next

End Using

If intFirstRow = 0 Then Throw New Exception("header not found")

End Using

'when the first correct sheet is found there is no need to check others

Exit For

End If

Next

OleDBCon.Close()

End Using

Catch ex As Exception

Throw New Exception(ex.Message, ex)

End Try

Dts.Variables.Item("strQuery").Value = "SELECT * FROM [" & strSheetname & "A" & intFirstRow.ToString & ":I]"

Dts.TaskResult = ScriptResults.Success

End Sub

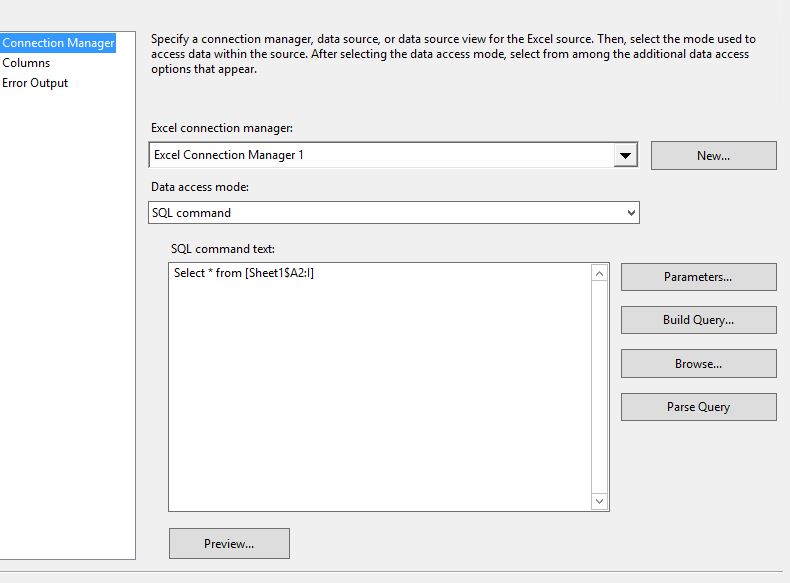

- Then you have to add an Excel connection manager, and choose the excel file that you want to import (just select a sample to define the metadata for the first time only)

- Assign a default value of

Select * from [Sheet1$A2:I] to the variable @[User::strQuery]

- In the Data Flow Task add an Excel Source, choose SQL Command from variable, and select

@[User::strQuery]

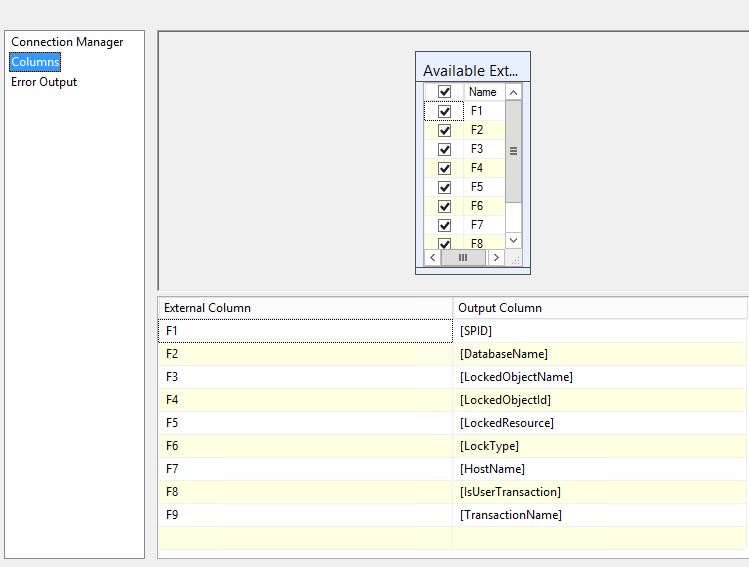

- Go to the columns tab and name the columns in the same way that @BHouse suggested

![Image]() Image taken from @BHouse answer

Image taken from @BHouse answer

- Set the DataFlow Task

Delay Validation property to True

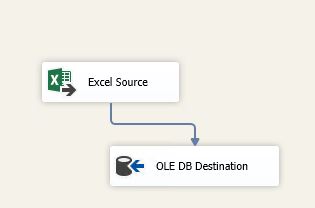

- Add other components to DataFlow Task

UPDATE 1:

From the OP comments: sometimes excel with empty data will come.(i.e) we have only header row not not data... in that case it fails entire task

Solution:

If your excel file contains no data (only header) you have to do these steps:

- Add an SSIS variable of type boolean *(i.e.

@[User::ImportFile])

- Add

@[User::ImportFile] to the script task ReadWrite variables

- In the Script Task check if the file contains rows

- If yes Set

@[User::ImportFile] = True, else @[User::ImportFile] = False

- Double Click on the arrow (precedence constraint) that connect the script task to the DataFlow

- Set its type to Constraint and Expression

Write the following expression

@[User::ImportFile] == True

Note: The new Script Task code is:

m_strExcelPath = Dts.Variables.Item("ExcelFilePath").Value.ToString

Dim strSheetname As String = String.Empty

Dim intFirstRow As Integer = 0

m_strExcelConnectionString = Me.BuildConnectionString()

Try

Using OleDBCon As New OleDbConnection(m_strExcelConnectionString)

If OleDBCon.State <> ConnectionState.Open Then

OleDBCon.Open()

End If

'Get all WorkSheets

m_dtschemaTable = OleDBCon.GetOleDbSchemaTable(OleDbSchemaGuid.Tables,

New Object() {Nothing, Nothing, Nothing, "TABLE"})

'Loop over work sheet to get the first one (the excel may contains temporary sheets or deleted ones

For Each schRow As DataRow In m_dtschemaTable.Rows

strSheetname = schRow("TABLE_NAME").ToString

If Not strSheetname.EndsWith("_") AndAlso strSheetname.EndsWith("$") Then

Using cmd As New OleDbCommand("SELECT * FROM [" & strSheetname & "A1:I15]", OleDBCon)

Dim dtTable As New DataTable("Table1")

cmd.CommandType = CommandType.Text

Using daGetDataFromSheet As New OleDbDataAdapter(cmd)

daGetDataFromSheet.Fill(dtTable)

For intCount As Integer = 0 To 15

If Not String.IsNullOrEmpty(dtTable.Rows(intCount)(0).ToString) Then

'+1 because datatable is zero based indexed, +1 because we want to start from the second row

intFirstRow = intCount + 2

End If

Next

End Using

End Using

'when the first correct sheet is found there is no need to check others

Exit For

End If

Next

OleDBCon.Close()

End Using

Catch ex As Exception

Throw New Exception(ex.Message, ex)

End Try

If intFirstRow = 0 OrElse _

intFirstRow > dtTable.Rows.Count Then

Dts.Variables.Item("ImportFile").Value = False

Else

Dts.Variables.Item("ImportFile").Value = True

End If

Dts.Variables.Item("strQuery").Value = "SELECT * FROM [" & strSheetname & "A" & intFirstRow.ToString & ":I]"

Dts.TaskResult = ScriptResults.Success

End Sub

UPDATE 2:

From the OP comments: is there any other work around available to process the data flow task without skipping all data flow task,Actually one of the task will log the filename and data count and all, which are missing here

Solution:

- Just add another DATA FLOW task

- Connect this dataflow with the script task using another connector and with the expression

@[User::ImportFile] == False (same steps of the first connector)

- In the DataFlow Task add a SCript Component as a Source

- Create the Output columns you want to import to Logs

- Create a Row that contains the information you need to import

- Add the Log Destination

Or Instead of adding another Data Flow Task, you can add an Execute SQL Task to insert a row in the Log Table