A bit late, but I'd like to contribute with another approach to solve this situation. My approach is based on image indexation, which are faster than looping through the image as the approach used in the accept answer.

I did some time measurement of both codes to illustrate what I just said. Take a look at the code below:

import cv2

from matplotlib import pyplot as plt

# Reading image to be used in the montage, this step is not important

original = cv2.imread('imgs/opencv.png')

# Starting time measurement

e1 = cv2.getTickCount()

# Reading the image

img = cv2.imread('imgs/opencv.png')

# Converting the image to grayscale

imgGray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# Converting the grayscale image into a binary image to get the whole image

ret,imgBinAll = cv2.threshold(imgGray,175,255,cv2.THRESH_BINARY)

# Converting the grayscale image into a binary image to get the text

ret,imgBinText = cv2.threshold(imgGray,5,255,cv2.THRESH_BINARY)

# Changing white pixels from original image to black

img[imgBinAll == 255] = [0,0,0]

# Changing black pixels from original image to white

img[imgBinText == 0] = [255,255,255]

# Finishing time measurement

e2 = cv2.getTickCount()

t = (e2 - e1)/cv2.getTickFrequency()

print(f'Time spent in seconds: {t}')

At this point I stopped timing because the next step is just to plot the montage, the code follows:

# Plotting the image

plt.subplot(1,5,1),plt.imshow(original)

plt.title('original')

plt.xticks([]),plt.yticks([])

plt.subplot(1,5,2),plt.imshow(imgGray,'gray')

plt.title('grayscale')

plt.xticks([]),plt.yticks([])

plt.subplot(1,5,3),plt.imshow(imgBinAll,'gray')

plt.title('binary - all')

plt.xticks([]),plt.yticks([])

plt.subplot(1,5,4),plt.imshow(imgBinText,'gray')

plt.title('binary - text')

plt.xticks([]),plt.yticks([])

plt.subplot(1,5,5),plt.imshow(img,'gray')

plt.title('final result')

plt.xticks([]),plt.yticks([])

plt.show()

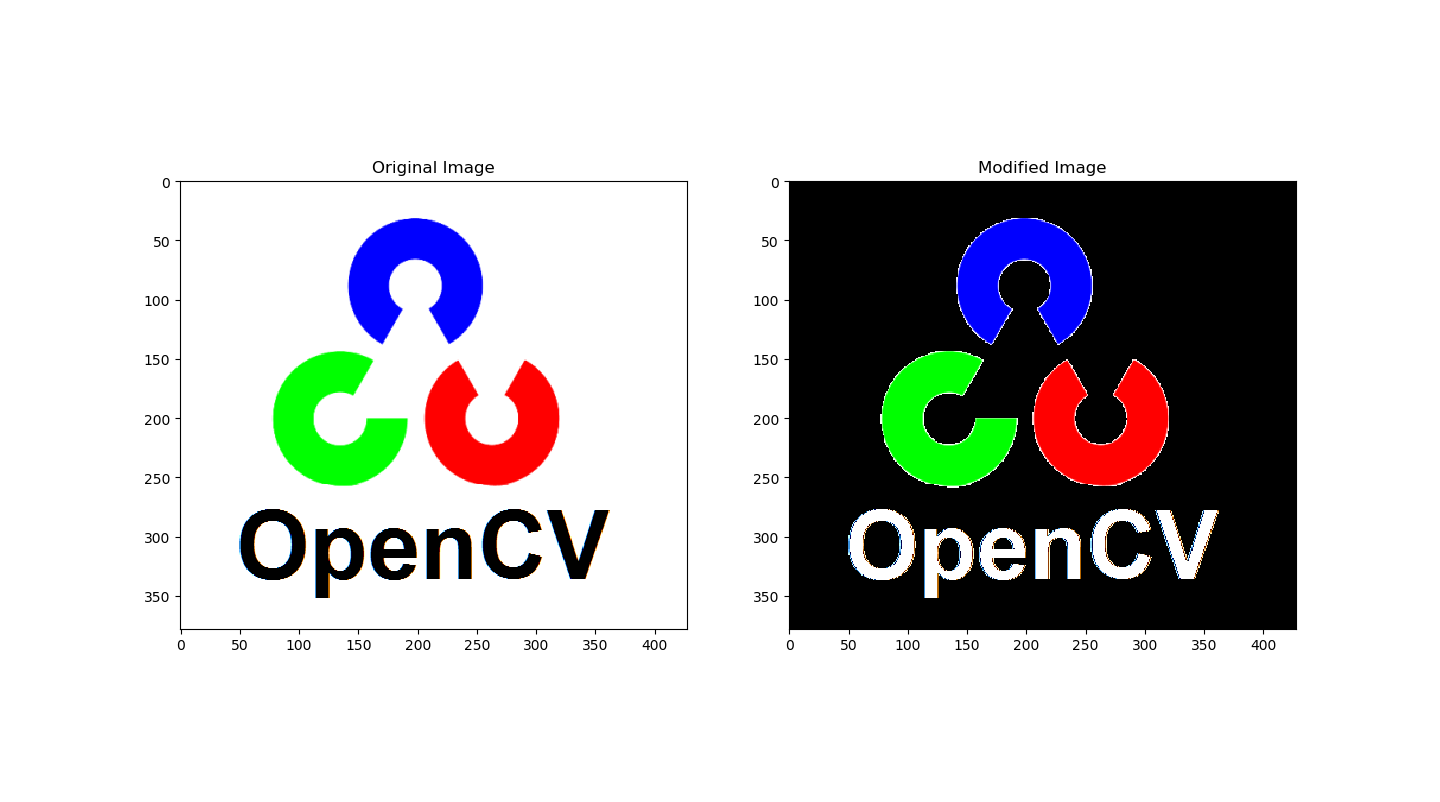

That is the final result:

Montage showing all steps of the proposed approach

And this is the time consumed (printed in the console):

Time spent in seconds: 0.008526025

In order to compare both approaches I commented the line where the image is resized. Also, I stopped timing before the imshow command. These were the results:

Time spent in seconds: 1.837972522

Final result of the looping approach

If you examine both images you'll see some contour differences. Sometimes when you are working with image processing, efficiency is key. Therefore, it is a good idea to save time where it is possible. This approach can be adapted for different situations, take a look at the threshold documentation.