Dlib C++ can detect landmark and estimate face pose very well. However, how can I get 3D coordinate Axes direction (x,y,z) of head pose?

How to get 3D coordinate Axes of head pose estimation in Dlib C++

Asked Answered

This question already has an accepted answer. Nevertheless, just for future reference, there is also this great blog post on the subject: learnopencv.com/head-pose-estimation-using-opencv-and-dlib –

Handel

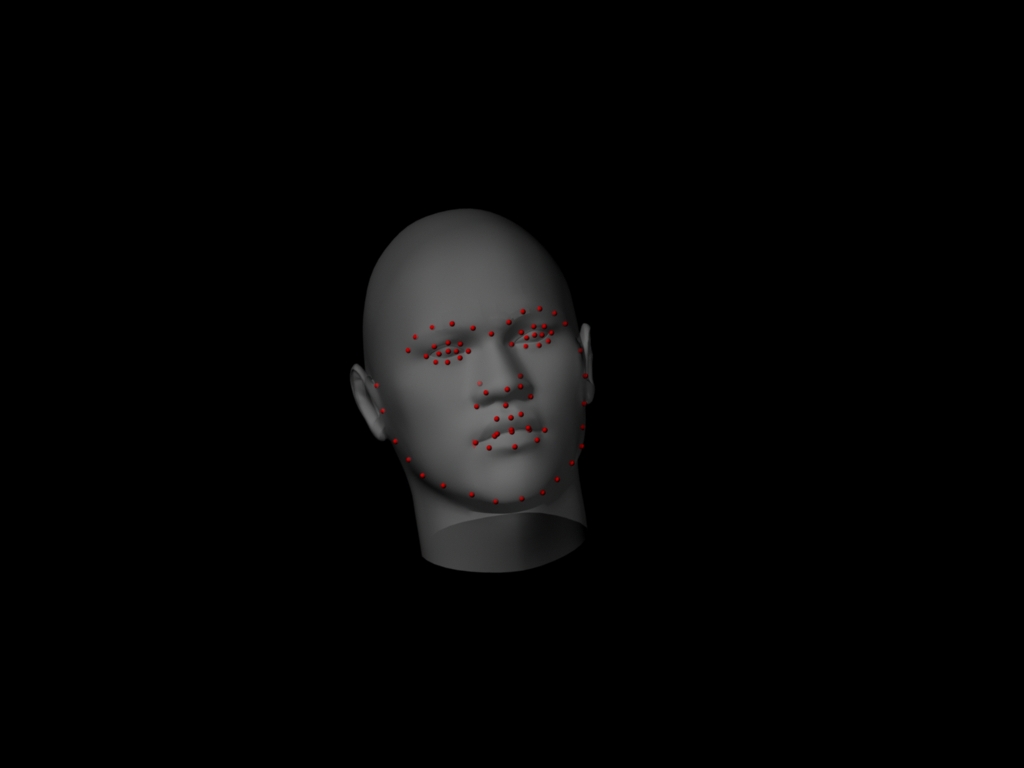

I was also facing the same issue, a while back ago, searched and found 1-2 useful blog posts, this link would get you an overview of the techniques involved, If you only need to calculate the 3D pose in decimal places then you may skip the OpenGL rendering part, However if you want to visually get the Feedback then you may try with OpenGL as well, But I would suggest you to ignore the OpenGL part as a beginner, So the smallest working code snippet extracted from github page, would look something like this:

// Reading image using OpenCV, you may use dlib as well.

cv::Mat img = cv::imread(imagePath);

std::vector<double> rv(3), tv(3);

cv::Mat rvec(rv),tvec(tv);

cv::Vec3d eav;

// Labelling the 3D Points derived from a 3D model of human face.

// You may replace these points as per your custom 3D head model if any

std::vector<cv::Point3f > modelPoints;

modelPoints.push_back(cv::Point3f(2.37427,110.322,21.7776)); // l eye (v 314)

modelPoints.push_back(cv::Point3f(70.0602,109.898,20.8234)); // r eye (v 0)

modelPoints.push_back(cv::Point3f(36.8301,78.3185,52.0345)); //nose (v 1879)

modelPoints.push_back(cv::Point3f(14.8498,51.0115,30.2378)); // l mouth (v 1502)

modelPoints.push_back(cv::Point3f(58.1825,51.0115,29.6224)); // r mouth (v 695)

modelPoints.push_back(cv::Point3f(-61.8886f,127.797,-89.4523f)); // l ear (v 2011)

modelPoints.push_back(cv::Point3f(127.603,126.9,-83.9129f)); // r ear (v 1138)

// labelling the position of corresponding feature points on the input image.

std::vector<cv::Point2f> srcImagePoints = {cv::Point2f(442, 442), // left eye

cv::Point2f(529, 426), // right eye

cv::Point2f(501, 479), // nose

cv::Point2f(469, 534), //left lip corner

cv::Point2f(538, 521), // right lip corner

cv::Point2f(349, 457), // left ear

cv::Point2f(578, 415) // right ear};

cv::Mat ip(srcImagePoints);

cv::Mat op = cv::Mat(modelPoints);

cv::Scalar m = mean(cv::Mat(modelPoints));

rvec = cv::Mat(rv);

double _d[9] = {1,0,0,

0,-1,0,

0,0,-1};

Rodrigues(cv::Mat(3,3,CV_64FC1,_d),rvec);

tv[0]=0;tv[1]=0;tv[2]=1;

tvec = cv::Mat(tv);

double max_d = MAX(img.rows,img.cols);

double _cm[9] = {max_d, 0, (double)img.cols/2.0,

0 , max_d, (double)img.rows/2.0,

0 , 0, 1.0};

cv::Mat camMatrix = cv::Mat(3,3,CV_64FC1, _cm);

double _dc[] = {0,0,0,0};

solvePnP(op,ip,camMatrix,cv::Mat(1,4,CV_64FC1,_dc),rvec,tvec,false,CV_EPNP);

double rot[9] = {0};

cv::Mat rotM(3,3,CV_64FC1,rot);

Rodrigues(rvec,rotM);

double* _r = rotM.ptr<double>();

printf("rotation mat: \n %.3f %.3f %.3f\n%.3f %.3f %.3f\n%.3f %.3f %.3f\n",

_r[0],_r[1],_r[2],_r[3],_r[4],_r[5],_r[6],_r[7],_r[8]);

printf("trans vec: \n %.3f %.3f %.3f\n",tv[0],tv[1],tv[2]);

double _pm[12] = {_r[0],_r[1],_r[2],tv[0],

_r[3],_r[4],_r[5],tv[1],

_r[6],_r[7],_r[8],tv[2]};

cv::Mat tmp,tmp1,tmp2,tmp3,tmp4,tmp5;

cv::decomposeProjectionMatrix(cv::Mat(3,4,CV_64FC1,_pm),tmp,tmp1,tmp2,tmp3,tmp4,tmp5,eav);

printf("Face Rotation Angle: %.5f %.5f %.5f\n",eav[0],eav[1],eav[2]);

OutPut:

**X** **Y** **Z**

Face Rotation Angle: 171.44027 -8.72583 -9.90596

I appreciate for your help, I will try this code tonight :D –

Senega

I'm trying your solution, but I stuck at the step of getting point's positions of eyes, nose,... from the 3d model provided by dlib at link: sourceforge.net/projects/dclib/files/dlib/v18.10/…. The .dat file is really generic. I tried to change the file extension in order to read it from some 3D softwares but no use. Do you have any suggesstion? –

Senega

You don't have to rewrite those 3D points, you just need to update the

srcImagePoints accordingly. –

Heraclitus Hi, I'm trying your solution, as you say I should put the corresponding points into the model to get them mapped to 3d model. But HOW does the given shape_predictor_68_face_landmarks.dat file label the points? There are 68 of them but of course we need to get the correct ones to put into srcImagePoints. I've tried to look for some sort of docs but I didn't find anything useful, any suggestion about that? –

Adventist

@ZdaR: I'm trying this solution and it seems to work. I want to change the use a little bit by having an "Origin pose" from which all other poses will be compared (I want to know if the object has moved from its original position and if so compute this change). Is it ok to just subtract the Origin Rotation Angle to the current Rotation Angle? (Same with translations?) Thanks for your help. –

Skywriting

@Skywriting for translation part it would work fine, but I am not sure about the rotation part, however you can give it a try and see if it works ? –

Heraclitus

© 2022 - 2024 — McMap. All rights reserved.