How can I establish a connection between EMR master cluster(created by Terraform) and Airflow. I have Airflow setup under AWS EC2 server with same SG,VPC and Subnet.

I need solutions so that Airflow can talk to EMR and execute Spark submit.

These blogs have understanding on execution after connection has been established.(Didn't help much)

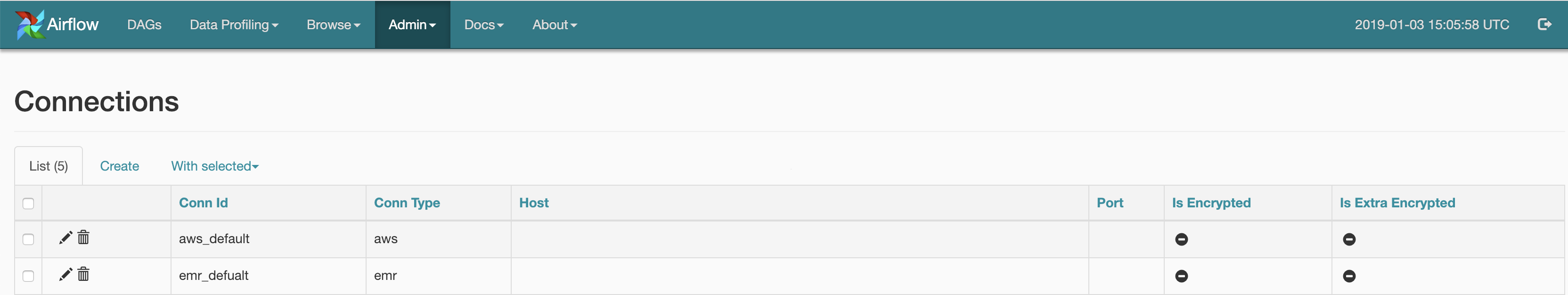

In airflow I have made a connection using UI for AWS and EMR:-

Below is the code which will list the EMR cluster's which are Active and Terminated, I can also fine tune to get Active Clusters:-

from airflow.contrib.hooks.aws_hook import AwsHook

import boto3

hook = AwsHook(aws_conn_id=‘aws_default’)

client = hook.get_client_type(‘emr’, ‘eu-central-1’)

for x in a:

print(x[‘Status’][‘State’],x[‘Name’])

My question is - How can I update my above code can do Spark-submit actions