Nikie's answer solved my problem, but his answer was in Mathematica. So I thought I should give its OpenCV adaptation here. But after implementing I could see that OpenCV code is much bigger than nikie's mathematica code. And also, I couldn't find interpolation method done by nikie in OpenCV ( although it can be done using scipy, i will tell it when time comes.)

1. Image PreProcessing ( closing operation )

import cv2

import numpy as np

img = cv2.imread('dave.jpg')

img = cv2.GaussianBlur(img,(5,5),0)

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

mask = np.zeros((gray.shape),np.uint8)

kernel1 = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(11,11))

close = cv2.morphologyEx(gray,cv2.MORPH_CLOSE,kernel1)

div = np.float32(gray)/(close)

res = np.uint8(cv2.normalize(div,div,0,255,cv2.NORM_MINMAX))

res2 = cv2.cvtColor(res,cv2.COLOR_GRAY2BGR)

Result :

![Result of closing]()

2. Finding Sudoku Square and Creating Mask Image

thresh = cv2.adaptiveThreshold(res,255,0,1,19,2)

contour,hier = cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

max_area = 0

best_cnt = None

for cnt in contour:

area = cv2.contourArea(cnt)

if area > 1000:

if area > max_area:

max_area = area

best_cnt = cnt

cv2.drawContours(mask,[best_cnt],0,255,-1)

cv2.drawContours(mask,[best_cnt],0,0,2)

res = cv2.bitwise_and(res,mask)

Result :

![enter image description here]()

3. Finding Vertical lines

kernelx = cv2.getStructuringElement(cv2.MORPH_RECT,(2,10))

dx = cv2.Sobel(res,cv2.CV_16S,1,0)

dx = cv2.convertScaleAbs(dx)

cv2.normalize(dx,dx,0,255,cv2.NORM_MINMAX)

ret,close = cv2.threshold(dx,0,255,cv2.THRESH_BINARY+cv2.THRESH_OTSU)

close = cv2.morphologyEx(close,cv2.MORPH_DILATE,kernelx,iterations = 1)

contour, hier = cv2.findContours(close,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

for cnt in contour:

x,y,w,h = cv2.boundingRect(cnt)

if h/w > 5:

cv2.drawContours(close,[cnt],0,255,-1)

else:

cv2.drawContours(close,[cnt],0,0,-1)

close = cv2.morphologyEx(close,cv2.MORPH_CLOSE,None,iterations = 2)

closex = close.copy()

Result :

![enter image description here]()

4. Finding Horizontal Lines

kernely = cv2.getStructuringElement(cv2.MORPH_RECT,(10,2))

dy = cv2.Sobel(res,cv2.CV_16S,0,2)

dy = cv2.convertScaleAbs(dy)

cv2.normalize(dy,dy,0,255,cv2.NORM_MINMAX)

ret,close = cv2.threshold(dy,0,255,cv2.THRESH_BINARY+cv2.THRESH_OTSU)

close = cv2.morphologyEx(close,cv2.MORPH_DILATE,kernely)

contour, hier = cv2.findContours(close,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

for cnt in contour:

x,y,w,h = cv2.boundingRect(cnt)

if w/h > 5:

cv2.drawContours(close,[cnt],0,255,-1)

else:

cv2.drawContours(close,[cnt],0,0,-1)

close = cv2.morphologyEx(close,cv2.MORPH_DILATE,None,iterations = 2)

closey = close.copy()

Result :

![enter image description here]()

Of course, this one is not so good.

5. Finding Grid Points

res = cv2.bitwise_and(closex,closey)

Result :

![enter image description here]()

6. Correcting the defects

Here, nikie does some kind of interpolation, about which I don't have much knowledge. And i couldn't find any corresponding function for this OpenCV. (may be it is there, i don't know).

Check out this SOF which explains how to do this using SciPy, which I don't want to use : Image transformation in OpenCV

So, here I took 4 corners of each sub-square and applied warp Perspective to each.

For that, first we find the centroids.

contour, hier = cv2.findContours(res,cv2.RETR_LIST,cv2.CHAIN_APPROX_SIMPLE)

centroids = []

for cnt in contour:

mom = cv2.moments(cnt)

(x,y) = int(mom['m10']/mom['m00']), int(mom['m01']/mom['m00'])

cv2.circle(img,(x,y),4,(0,255,0),-1)

centroids.append((x,y))

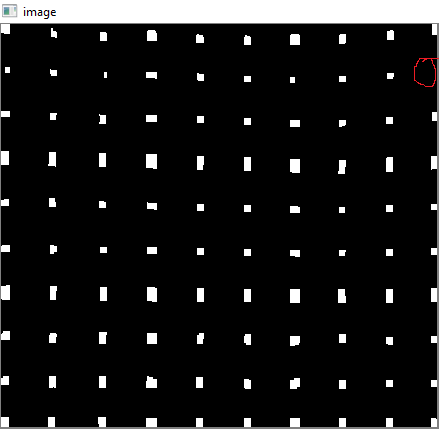

But resulting centroids won't be sorted. Check out below image to see their order:

![enter image description here]()

So we sort them from left to right, top to bottom.

centroids = np.array(centroids,dtype = np.float32)

c = centroids.reshape((100,2))

c2 = c[np.argsort(c[:,1])]

b = np.vstack([c2[i*10:(i+1)*10][np.argsort(c2[i*10:(i+1)*10,0])] for i in xrange(10)])

bm = b.reshape((10,10,2))

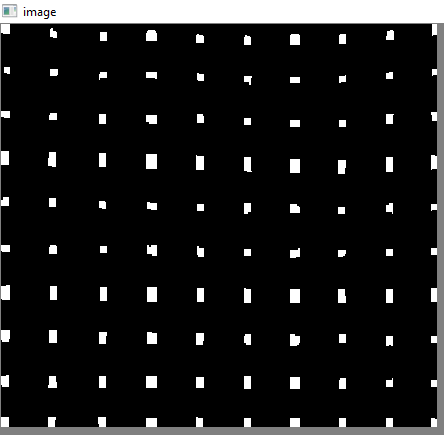

Now see below their order :

![enter image description here]()

Finally we apply the transformation and create a new image of size 450x450.

output = np.zeros((450,450,3),np.uint8)

for i,j in enumerate(b):

ri = i/10

ci = i%10

if ci != 9 and ri!=9:

src = bm[ri:ri+2, ci:ci+2 , :].reshape((4,2))

dst = np.array( [ [ci*50,ri*50],[(ci+1)*50-1,ri*50],[ci*50,(ri+1)*50-1],[(ci+1)*50-1,(ri+1)*50-1] ], np.float32)

retval = cv2.getPerspectiveTransform(src,dst)

warp = cv2.warpPerspective(res2,retval,(450,450))

output[ri*50:(ri+1)*50-1 , ci*50:(ci+1)*50-1] = warp[ri*50:(ri+1)*50-1 , ci*50:(ci+1)*50-1].copy()

Result :

![enter image description here]()

The result is almost same as nikie's, but code length is large. May be, better methods are available out there, but until then, this works OK.

Regards

ARK.

![enter image description here]()

![enter image description here]()