You almost got it but what I had in mind (from comments) was more like this:

prepare image

switch to gray-scale, remove noise (by some bluring), enhance dynamic range, etc.

derive image by both x,y axises and create gradient 2D field

so recolor the image and create 2D vector field. each pixel has RGB so use R for one axis and B for the other. I do this like this:

Blue(x,y)=abs(Intensity(x,y)-Intensity(x-1,y))

Red (x,y)=abs(Intensity(x,y)-Intensity(x,y-1))

The sub result looks like this: ![edges]()

Treshold image to emphasize edges

So pick each pixel and compare Blue(x,y)+Red(x,y)<treshold if true recolor to unknown else recolor to edge color. For your sample image I used treshold 24 After that smooth the result to fill the small gaps with blurred color. The sub result looks like this: ![enter image description here]()

The greenish stuff is my unknown color and White are edges. As you can see I blurred quite a lot (too lazy to implement connected components).

detect background

So now to distinguish background from the bones inside I use special filling method (but a simple flood fill will do) I developed for DIP stuff and found very useful many times over the former expectations.

void growfill(DWORD c0,DWORD c1,DWORD c2); // grow/flood fill c0 neigbouring c1 with c2

Which simply checks all pixels in the image and if found color c0 near c1 then recolors it to c2 and loop until no recolor has occur. For bigger resolution is usually much faster then flood fill due to no need for recursion or stack/heap/lists. Also it can be use for many cool effects like thinning/thickening etc with simple few calls.

OK back to the topic I choose 3 base colors:

//RRGGBB

const DWORD col_unknown =0x00408020; // yet undetermined pixels

const DWORD col_background=0x00000000;

const DWORD col_edge =0x00FFFFFF;

Now background is around edges for sure so I draw rectangle with col_background around image and growth fill all col_unknown pixels near col_background with col_background which basically flood fill image from outside to inside.

After this I recolor all pixels that are not any of the 3 defined colors to their closest match. This will remove the blur as it is not desirable anymore. The sub result looks like this: ![background]()

segmentation/labeling

Now just scan whole image and if any col_unknown is found growth fill it with object distinct color/index. Change the actual object distinct color/index (increment) and continue until end of image. Beware with the colors you have to avoid the use of the 3 predetermined colors otherwise you merge the areas which you do not want.

The Final result looks like this: ![bones]()

now you can apply any form of analysis/comparison

you got pixel mask of each object region so you can count the pixels (area) and remove ignore too small areas. Compute the avg pixel position (center) of each object and use that to detect which bone it actually is. Compute the homogenity of area ... rescale to template bones ... etc ...

Here some C++ code I did this with

color c,d;

int x,y,i,i0,i1;

int tr0=Form1->sb_treshold0->Position; // =24 treshold from scrollbar

//RRGGBB

const DWORD col_unknown =0x00408020; // yet undetermined pixels

const DWORD col_background=0x00000000;

const DWORD col_edge =0x00FFFFFF;

// [prepare image]

pic1=pic0; // copy input image pic0 to output pic1

pic1.pixel_format(_pf_u); // convert to grayscale intensity <0,765>

pic1.enhance_range(); // recompute colors so they cover full dynamic range

pic1.smooth(1); // blur a bit to remove noise

// extract edges

pic1.deriveaxy(); // compute derivations (change in intensity in x and y axis as 2D gradient vector)

pic1.save("out0.png");

pic1.pf=_pf_rgba; // from now on the recolored image will be RGBA (no need for conversion)

for (y=0;y<pic1.ys;y++) // treshold recolor

for (x=0;x<pic1.xs;x++)

{

c=pic1.p[y][x];

i=c.dw[picture::_x]+c.dw[picture::_y]; // i=|dcolor/dx| + |dcolor/dy|

if (i<tr0) c.dd=col_unknown; else c.dd=col_edge; // treshold test&recolor

pic1.p[y][x]=c;

}

pic1.smooth(5); // blur a bit to fill the small gaps

pic1.save("out1.png");

// [background]

// render backround color rectangle around image

pic1.bmp->Canvas->Pen->Color=rgb2bgr(col_background);

pic1.bmp->Canvas->Brush->Style=bsClear;

pic1.bmp->Canvas->Rectangle(0,0,pic1.xs,pic1.ys);

pic1.bmp->Canvas->Brush->Style=bsSolid;

// growth fill all col_unknonw pixels near col_background pixels with col_background similar to floodfill but without recursion and more usable.

pic1.growfill(col_unknown,col_background,col_background);

// recolor blured colors back to their closest match

for (y=0;y<pic1.ys;y++)

for (x=0;x<pic1.xs;x++)

{

c=pic1.p[y][x];

d.dd=col_edge ; i=abs(c.db[0]-d.db[0])+abs(c.db[1]-d.db[1])+abs(c.db[2]-d.db[2]); i0=i; i1=col_edge;

d.dd=col_unknown ; i=abs(c.db[0]-d.db[0])+abs(c.db[1]-d.db[1])+abs(c.db[2]-d.db[2]); if (i0>i) { i0=i; i1=d.dd; }

d.dd=col_background; i=abs(c.db[0]-d.db[0])+abs(c.db[1]-d.db[1])+abs(c.db[2]-d.db[2]); if (i0>i) { i0=i; i1=d.dd; }

pic1.p[y][x].dd=i1;

}

pic1.save("out2.png");

// [segmentation/labeling]

i=0x00202020; // labeling color/idx

for (y=0;y<pic1.ys;y++)

for (x=0;x<pic1.xs;x++)

if (pic1.p[y][x].dd==col_unknown)

{

pic1.p[y][x].dd=i;

pic1.growfill(col_unknown,i,i);

i+=0x00050340;

}

pic1.save("out3.png");

I use my own picture class for images so some members are:

xs,ys size of image in pixelsp[y][x].dd is pixel at (x,y) position as 32 bit integer typep[y][x].dw[2] is pixel at (x,y) position as 2x16 bit integer type for 2D fieldsp[y][x].db[4] is pixel at (x,y) position as 4x8 bit integer type for easy channel accessclear(color) - clears entire imageresize(xs,ys) - resizes image to new resolutionbmp - VCL encapsulated GDI Bitmap with Canvas accesssmooth(n) - fast blur the image n timesgrowfill(DWORD c0,DWORD c1,DWORD c2) - grow/flood fill c0 neigbouring c1 with c2

[Edit1] scan line based bone detection

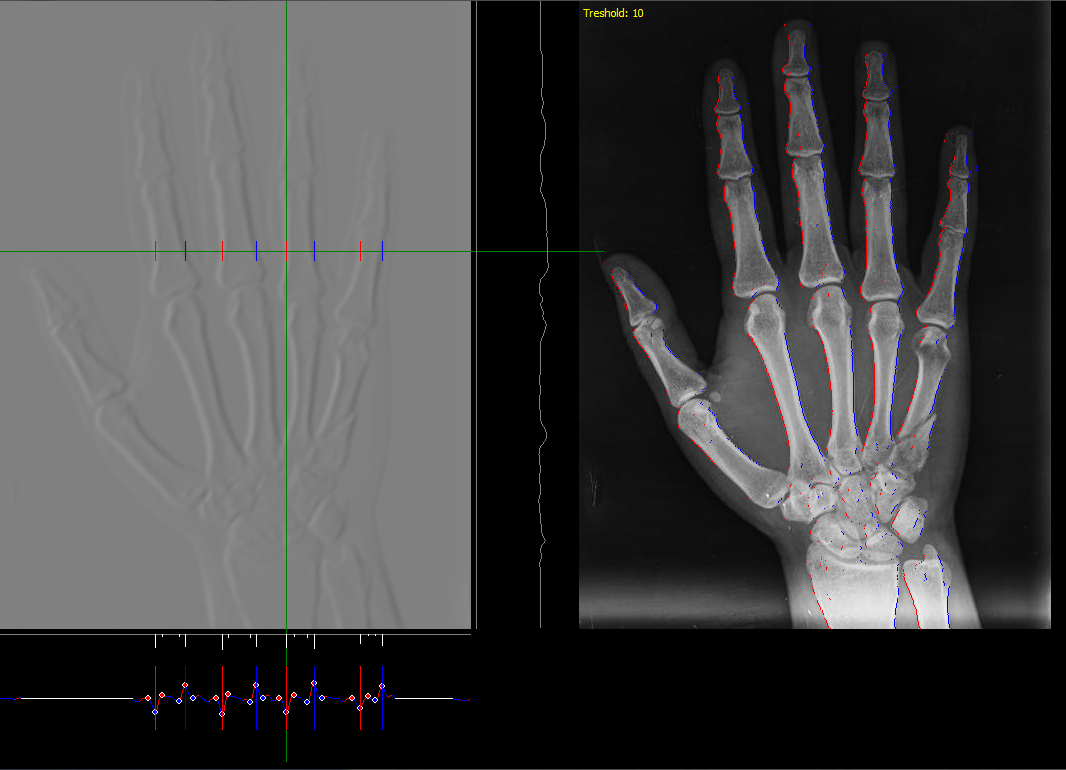

As in the linked find horizon QA you have to cast scan lines and search for distinct feature recognizing a bone. I would start with the partial derivation of image (in x axis) like this one::

![scan line]()

On the left is the color intensity derivation by x (gray means zero) and on the right original image. The side graphs are the derivation graphs as a function of x and y taken for line and row of actual mouse position. As you can see each bone has a distinct shape in the derivation which can be detected. I used a very simple detector like this:

- for processed image line do a partial derivation by

x

- find all peaks (the circles)

- remove too small peaks and merge the same sign peaks together

- detect bone by its 4 consequent peaks:

- big negative

- small positive

- small negative

- big positive

For each found bone edge I render red and blue pixel in the original image (on place of the big peaks) to visually check correctness. You van do this also in y axis in the same manner and merge the results. To improve this you should use better detection for example by use of correlation ...

Instead of the edge rendering You can easily create a mask of bones and then segmentate it to separate bones and handle as in the above text. Also you can use morphological operations to fill any gaps.

The last thing I can think of is to add also some detection for the joints side of bones (the shape is there different). It needs a lot of experimenting but at least you know which way to go.

![img]()

![img]()

x,yposition so you can compare the coresponding bones between images. – Towandatoward