How can I calculate pearson cross correlation matrix of large (>10TB) data set, possibly in distributed manner ? Any efficient distributed algorithm suggestion will be appreciated.

update: I read the implementation of apache spark mlib correlation

Pearson Computaation:

/home/d066537/codespark/spark/mllib/src/main/scala/org/apache/spark/mllib/stat/correlation/Correlation.scala

Covariance Computation:

/home/d066537/codespark/spark/mllib/src/main/scala/org/apache/spark/mllib/linalg/distributed/RowMatrix.scala

but for me it looks like all the computation is happening at one node and it is not distributed in real sense.

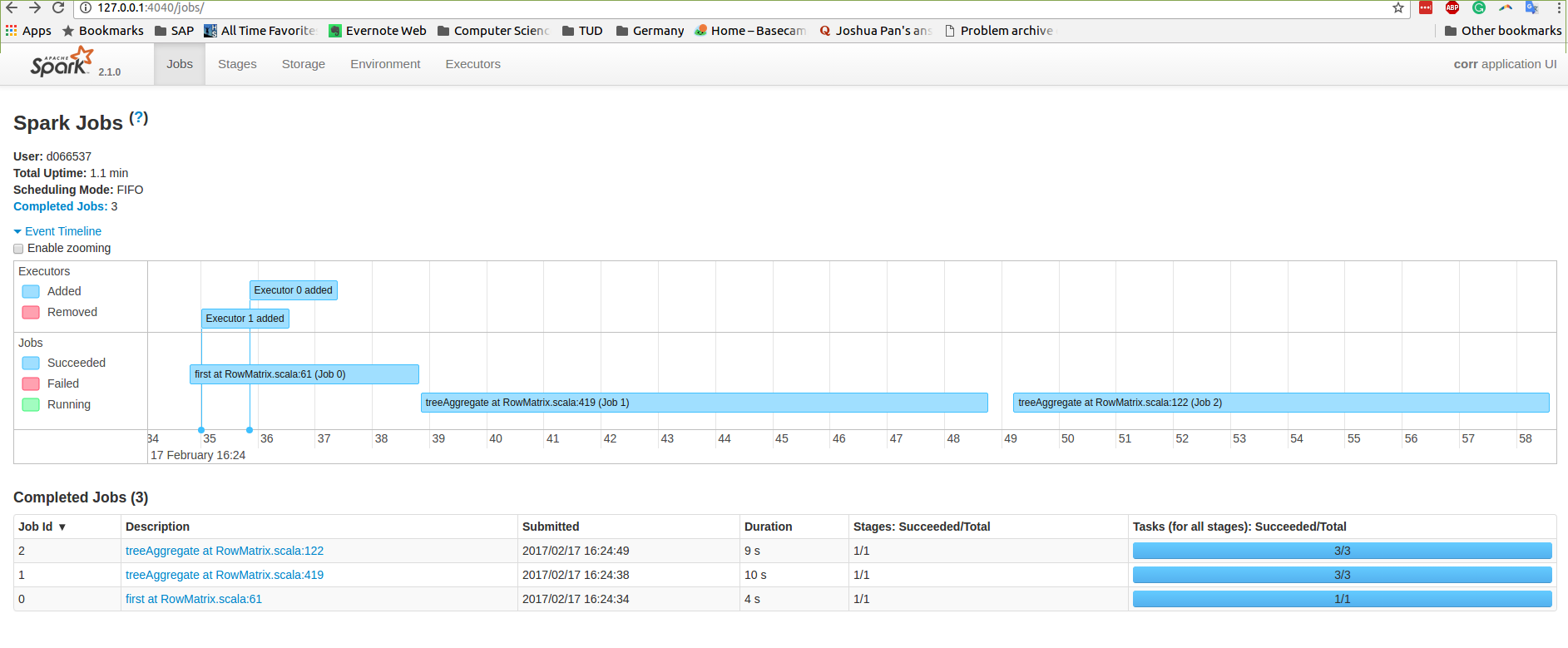

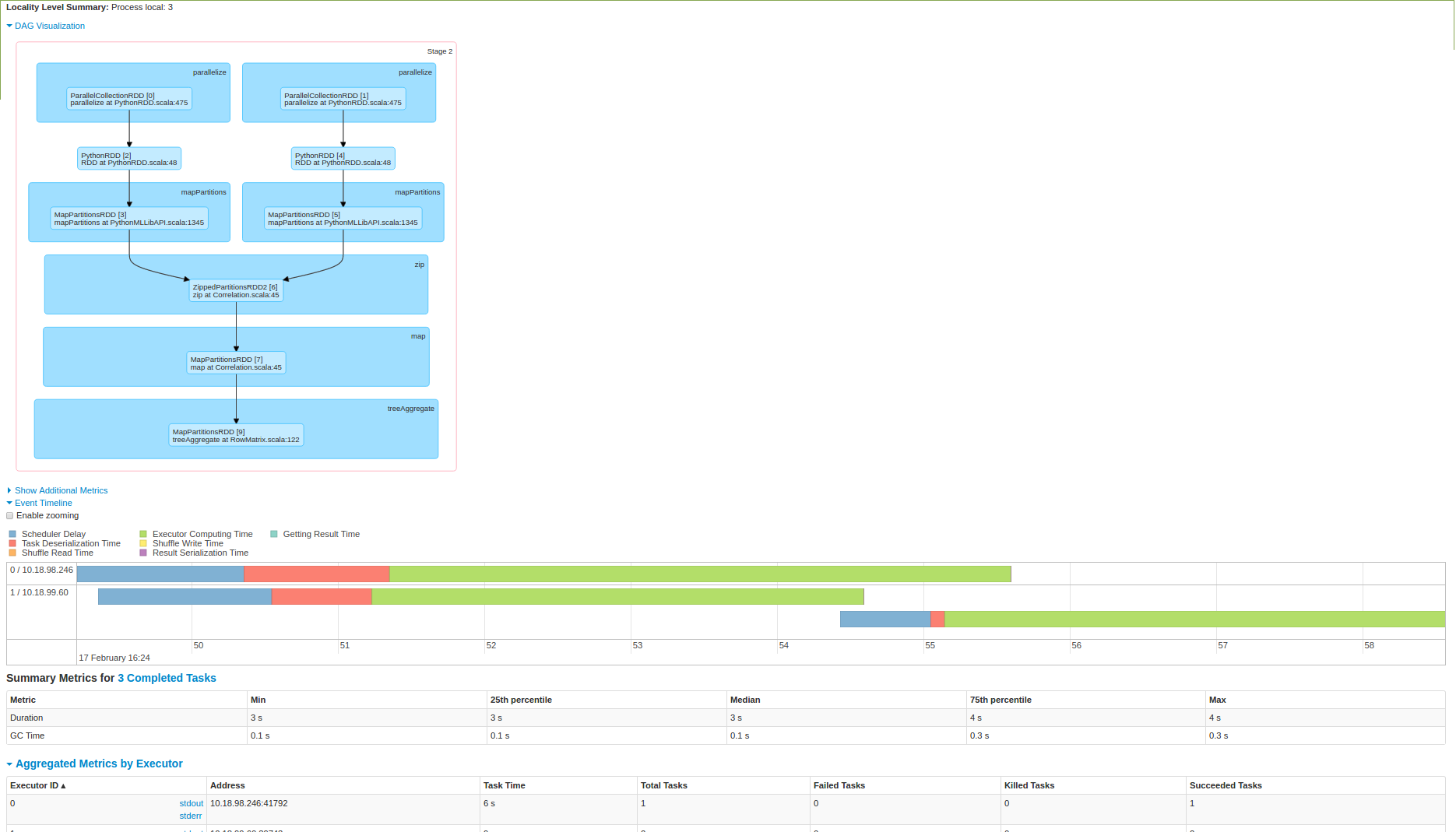

Please put some light in here. I also tried executing it on a 3 node spark cluster and below are the screenshot:

As you can see from 2nd image that data is pulled up at one node and then computation is being done.Am i right in here ?