First of all, you said:

/.../ but it seems max_tokens is for breaking the input down not the output.

This is wrong. The max_tokens parameter is shared between the input (i.e., prompt) and output (i.e., response). Tokens from the prompt and the completion all together should not exceed the token limit of a particular OpenAI model.

As stated in the official OpenAI article:

Depending on the model used, requests can use up to 4097 tokens shared

between prompt and completion. If your prompt is 4000 tokens, your

completion can be 97 tokens at most.

Solution 1: Using the max_tokens parameter (works with the GPT-3, GPT-3.5, and GPT-4 APIs)

STEP 1: Choose the maximum length restriction for the prompt

Let's say that you'll allow the user to enter a prompt of a maximum length of 20 words. That should be enough for simple questions like:

- How does climate change impact oceans and marine life? (9 words, 10 tokens)

- Explain the theory of relativity and its significance in modern physics. (11 words, 13 tokens)

- What are the potential benefits and risks of artificial intelligence in healthcare? (12 words, 13 tokens)

- Describe the process of cellular respiration and its role in producing energy for cells. (14 words, 17 tokens)

- Discuss the effects of deforestation on local ecosystems, climate, and global biodiversity. (12 words, 15 tokens)

Using the Tokenizer, you can see that 1 word is not equal to 1 token. For example, questions 3 and 5 consist of 12 words but different numbers of tokens! Because the OpenAI API operates with tokens, not words, you need to transform your limit of 20 words per prompt into tokens. Let's say that you'll allow the user to enter a prompt of a maximum length of 22 tokens. This will be approximately 20 words, plus or minus a few words depending on the text (as I previously said, 1 word is not equal to 1 token).

STEP 2: Use tiktoken to calculate the number of tokens in a prompt the user enters before(!) sending an API request to the OpenAI API

After you have chosen the maximum length restriction for the prompt, you need to check the prompt every time the user enters it to see if it doesn't exceed your limit of 22 tokens. You need to do this before you send an API request to the OpenAI API.

You can do this with tiktoken. As stated in the official OpenAI example:

Tiktoken is a fast open-source tokenizer by OpenAI.

Given a text string (e.g., "tiktoken is great!") and an encoding

(e.g., "cl100k_base"), a tokenizer can split the text string into a

list of tokens (e.g., ["t", "ik", "token", " is", " great", "!"]).

Tiktoken is very simple to use. See my past answer for more detailed information.

The logic is the following:

- If tiktoken returns 22 tokens or less, send the prompt to the OpenAI API.

- If tiktoken returns more than 22 tokens, send a message to the user something along the lines of: The text you entered is too long. Please make it shorter.

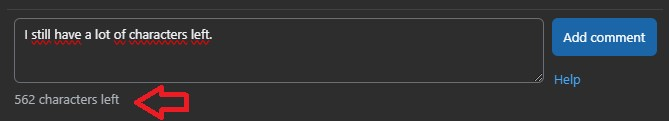

Note: This is not a really good UX for the user because the user doesn't know what your limit is (i.e., 22 tokens), and even if you state it, the user might not know how to calculate tokens with Tokenizer. This can be very easily solved on the frontend, where you implement a counter so that the user can see how many words it can enter without exceeding the limit. Something like StackOverflow implements in the comment section. It would be very bad UX if we could press the Add comment button only to get back the error message saying: Your comment is too long. Please make it shorter.

![Screenshot SO]()

STEP 3: Set the max_tokens parameter

Again, the OpenAI API operates with tokens, not words. You need to transform your limit of 300 words per response into tokens. Let's say that's 700 tokens.

Simple math gives you an answer. You need to set the max_tokens parameter to 722 tokens (i.e., 22 tokens + 700 tokens = 722 tokens).

Of course, if the user enters a prompt of 15 tokens, the completion he'll get will be 707 tokens, not 700 tokens. As you can see, the response length will depend on the prompt length entered but will never be more than 700 tokens.

Solution 2: Using the system message (works with the GPT-3.5 and GPT-4 APIs)

This solution works with the Chat Completions API (i.e., GPT-3.5 or GPT-4 models), not the Completions API (i.e., GPT-3) because the messages parameter in the Chat Completions API allows you to set the system message, which helps to set the behavior of the assistant, as stated in the official OpenAI documentation:

The main input is the messages parameter. Messages must be an array of

message objects, where each object has a role (either "system",

"user", or "assistant") and content. Conversations can be as short as

one message or many back and forth turns.

Typically, a conversation is formatted with a system message first,

followed by alternating user and assistant messages.

The system message helps set the behavior of the assistant. For

example, you can modify the personality of the assistant or provide

specific instructions about how it should behave throughout the

conversation. However note that the system message is optional and the

model’s behavior without a system message is likely to be similar to

using a generic message such as "You are a helpful assistant."

The user messages provide requests or comments for the assistant to

respond to. Assistant messages store previous assistant responses, but

can also be written by you to give examples of desired behavior.

Setting the system message is the most proper way to set the behavior of the model, as far as I know. You could try with the following code:

const { Configuration, OpenAIApi } = require('openai');

const configuration = new Configuration({

apiKey: process.env.OPENAI_API_KEY,

});

const openai = new OpenAIApi(configuration);

async function getCompletionFromOpenAI() {

const completion = await openai.createChatCompletion({

model: 'gpt-3.5-turbo',

messages: [

{ role: 'system', content: 'You are a helpful assistant. Your response should be less than or equal to 300 words.' },

{ role: 'user', content: 'Who is Elon Musk?'},

]

});

console.log(completion.data.choices[0].message.content);

}

getCompletionFromOpenAI();