I'm trying to build a very basic data flow in Azure Data Factory pulling a JSON file from blob storage, performing a transformation on some columns, and storing in a SQL database. I originally authenticated to the storage account using Managed Identity, but I get the error below when attempting to test the connection to the source:

com.microsoft.dataflow.broker.MissingRequiredPropertyException: account is a required property for [myStorageAccountName]. com.microsoft.dataflow.broker.PropertyNotFoundException: Could not extract value from [myStorageAccountName] - RunId: xxx

I also see the following message in the Factory Validation Output:

[MyDataSetName] AzureBlobStorage does not support SAS, MSI, or Service principal authentication in data flow.

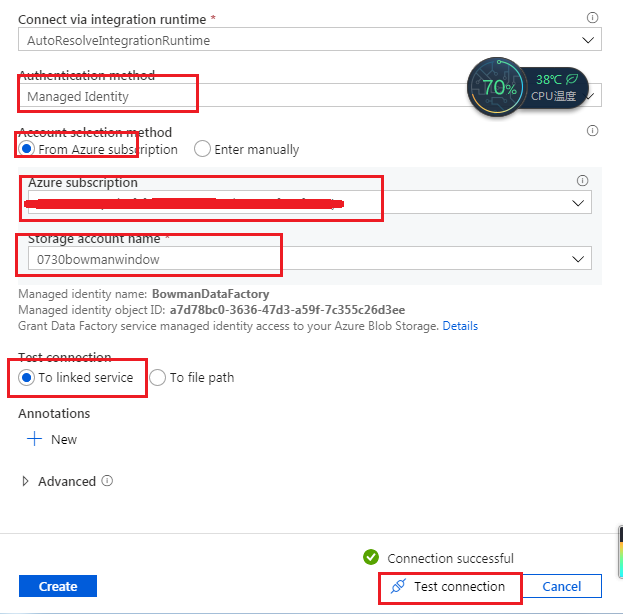

With this I assumed that all I would need to do is switch my Blob Storage Linked Service to an Account Key authentication method. After I switched to Account Key authentication though and select my subscription and storage account, when testing the connection I get the following error:

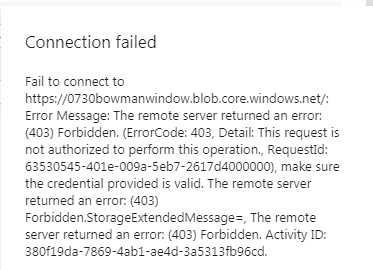

Connection failed Fail to connect to https://[myBlob].blob.core.windows.net/: Error Message: The remote server returned an error: (403) Forbidden. (ErrorCode: 403, Detail: This request is not authorized to perform this operation., RequestId: xxxx), make sure the credential provided is valid. The remote server returned an error: (403) Forbidden.StorageExtendedMessage=, The remote server returned an error: (403) Forbidden. Activity ID: xxx.

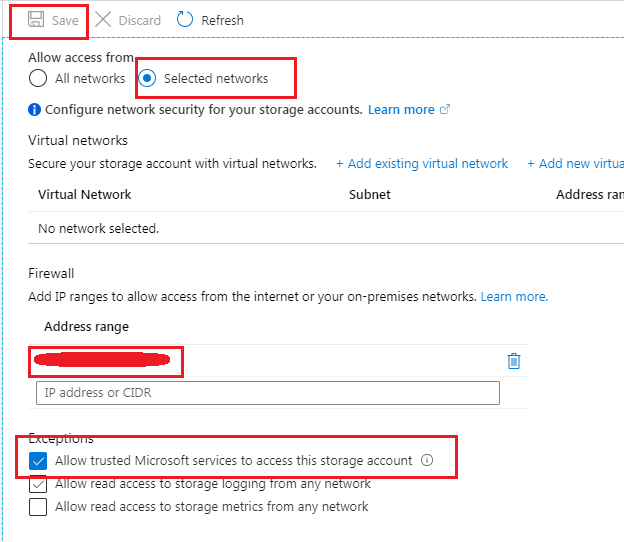

I've tried selecting from Azure directly and also entering the key manually and get the same error either way. One thing to note is the storage account only allows access to specified networks. I tried connecting to a different, public storage account and am able to access fine. The ADF account has the Storage Account Contributor role and I've added the IP address of where I am working currently as well as the IP range of Azure Data Factory that I found here: https://learn.microsoft.com/en-us/azure/data-factory/azure-integration-runtime-ip-addresses

Also note, I have about 5 copy data tasks working perfectly fine with Managed Identity currently, but I need to start doing more complex operations.

This seems like a similar issue as Unable to create a linked service in Azure Data Factory but the Storage Account Contributor and Owner roles I have assigned should supersede the Reader role as suggested in the reply. I'm also not sure if the poster is using a public storage account or private.

Thank you in advance.