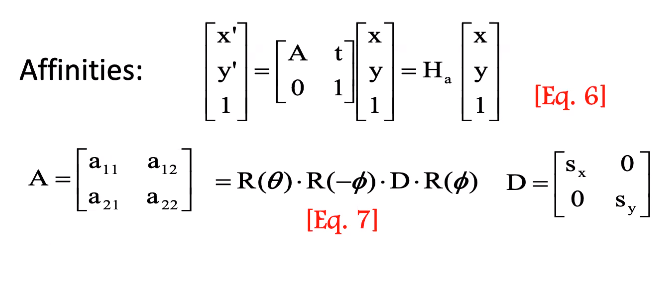

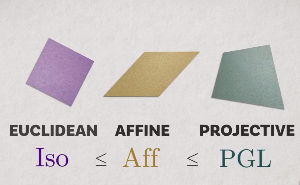

What is anisotropic scaling? And how is it achieved w.r.t image processing and computer vision?

I understand that it is some form of non-uniform scaling as Wikipedia puts it, but I still don't get a good grasp of what it actually means when applied to images. Recently, some deep learning architectures like R-CNN for object detection also uses it but doesn't show much light on this topic.

Any examples and visual illustrations that explains the concept clearly would be really nice.