I doubt any such model exists.

The main issue is that languages are generated from the grammars and it is next to impossible to convert back due to the infinite number of parser trees (combinations) available for various source-codes.

So in your case, say you train on python code (1000 sample codes), the resultant grammar for training will be the same. So, the model will always generate the same grammar irrespective of the example source code.

If you use training samples from a number of languages, the model still can't generate the grammar as it consists of an infinite number of possibilities.

Your example of Google translate works for real life translation as small errors are acceptable, but these models don't rely on generating the root grammar for each language. There are some tools that can translate programming languages example, but they don't generate the grammar, work based on the grammar.

Update

How to learn grammar from code.

After comparing to some NLP concepts, I have a list of issue that may arise and a way to counter them.

Dealing with variable names, coding structures and tokens.

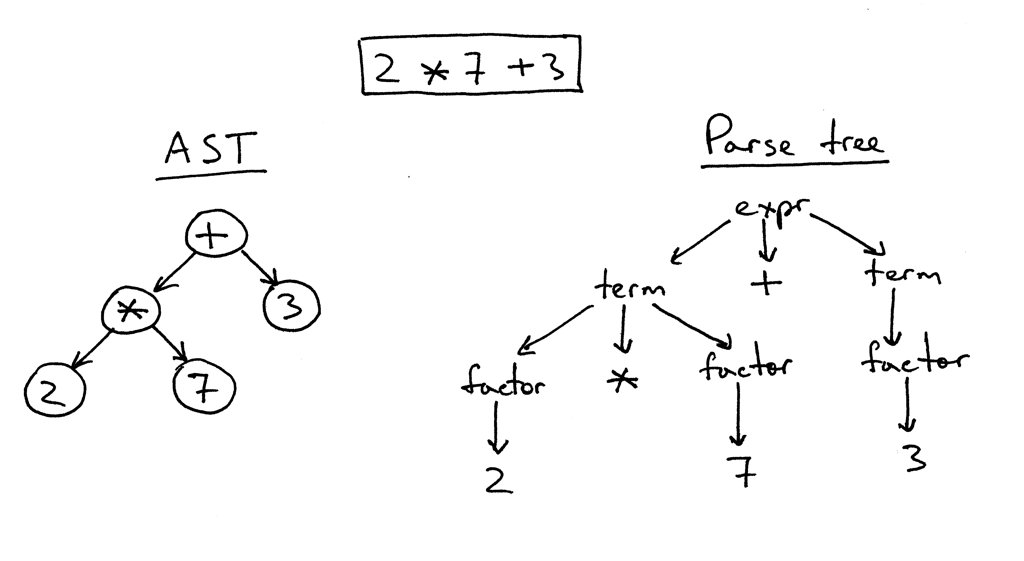

For understanding the grammar, we'll have to breakdown the code to its bare minimum form. This means understanding what each and every term in the code means. Have a look at this example

![Syntax tree]()

The already simple expression is reduced to the parse tree. We can see that the tree breaks down the expression and tags each number as a factor. This is really important to get rid of the human element of the code (such as variable names etc.) and dive into the actual grammar. In NLP this concept is known as Part of Speech tagging. You'll have to develop your own method to do the tagging, by it's easy given that you know grammar for the language.

Understanding the relations

For this, you can tokenize the reduced code and train using a model based on the output you are looking for. In case you want to write code, make use of a n grams model using LSTM like this example. The model will learn the grammar, but extracting it is not a simple task. You'll have to run separate code to try and extract all the possible relations learned by the model.

Example

Code snippet

# Sample code

int a = 1 + 2;

cout<<a;

Tags

# Sample tags and tokens

int a = 1 + 2 ;

[int] [variable] [operator] [factor] [expr] [factor] [end]

Leaving the operator, expr and keywords shouldn't matter if there is enough data present, but they will become a part of the grammar.

This is a sample to help understand my idea. You can improve on this by having a deeper look at the Theory of Computation and understanding the working of the automata and the different grammars.