I'm working on this class on convolutional neural networks. I've been trying to implement the gradient of a loss function for an svm and (I have a copy of the solution) I'm having trouble understanding why the solution is correct.

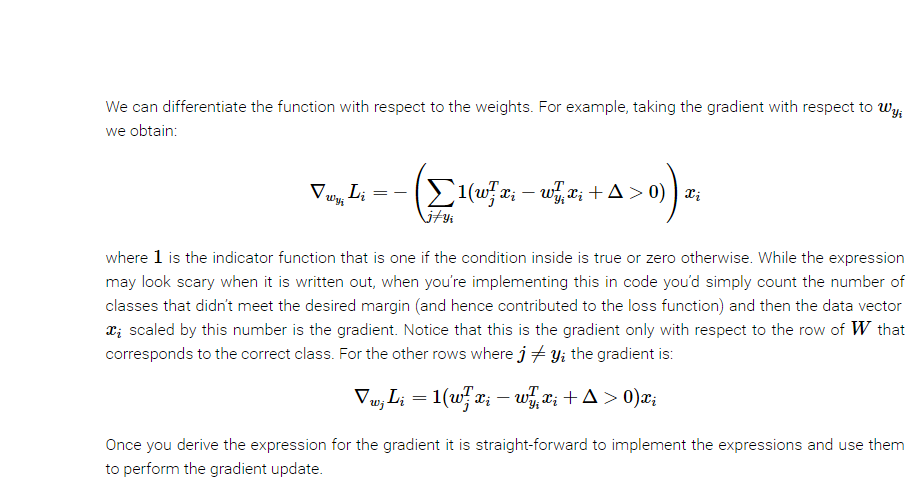

On this page it defines the gradient of the loss function to be as follows:

In my code I my analytic gradient matches with the numeric one when implemented in code as follows:

In my code I my analytic gradient matches with the numeric one when implemented in code as follows:

dW = np.zeros(W.shape) # initialize the gradient as zero

# compute the loss and the gradient

num_classes = W.shape[1]

num_train = X.shape[0]

loss = 0.0

for i in xrange(num_train):

scores = X[i].dot(W)

correct_class_score = scores[y[i]]

for j in xrange(num_classes):

if j == y[i]:

if margin > 0:

continue

margin = scores[j] - correct_class_score + 1 # note delta = 1

if margin > 0:

dW[:, y[i]] += -X[i]

dW[:, j] += X[i] # gradient update for incorrect rows

loss += margin

However, it seems like, from the notes, that dW[:, y[i]] should be changed every time j == y[i] since we subtract the the loss whenever j == y[i]. I'm very confused why the code is not:

dW = np.zeros(W.shape) # initialize the gradient as zero

# compute the loss and the gradient

num_classes = W.shape[1]

num_train = X.shape[0]

loss = 0.0

for i in xrange(num_train):

scores = X[i].dot(W)

correct_class_score = scores[y[i]]

for j in xrange(num_classes):

if j == y[i]:

if margin > 0:

dW[:, y[i]] += -X[i]

continue

margin = scores[j] - correct_class_score + 1 # note delta = 1

if margin > 0:

dW[:, j] += X[i] # gradient update for incorrect rows

loss += margin

and the loss would change when j == y[i]. Why are they both being computed when J != y[i]?

dW[:, y[i]] += -X[i]anddW[:, j] += X[i]do? I still feel confused. – Sinecure