I am learning to use Gekko's brain module for deep learning applications.

I have been setting up a neural network to learn the numpy.cos() function and then produce similar results.

I get a good fit when the bounds on my training are:

x = np.linspace(0,2*np.pi,100)

But the model falls apart when I try to extend the bounds to:

x = np.linspace(0,3*np.pi,100)

What do I need to change in my neural network to increase the flexibility of my model so that it works for other bounds?

This is my code:

from gekko import brain

import numpy as np

import matplotlib.pyplot as plt

#Set up neural network

b = brain.Brain()

b.input_layer(1)

b.layer(linear=2)

b.layer(tanh=2)

b.layer(linear=2)

b.output_layer(1)

#Train neural network

x = np.linspace(0,2*np.pi,100)

y = np.cos(x)

b.learn(x,y)

#Calculate using trained nueral network

xp = np.linspace(-2*np.pi,4*np.pi,100)

yp = b.think(xp)

#Plot results

plt.figure()

plt.plot(x,y,'bo')

plt.plot(xp,yp[0],'r-')

plt.show()

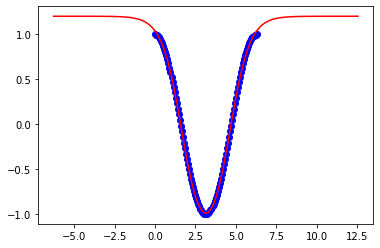

These are results to 2pi:

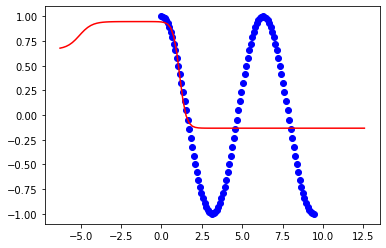

These are results to 3pi: