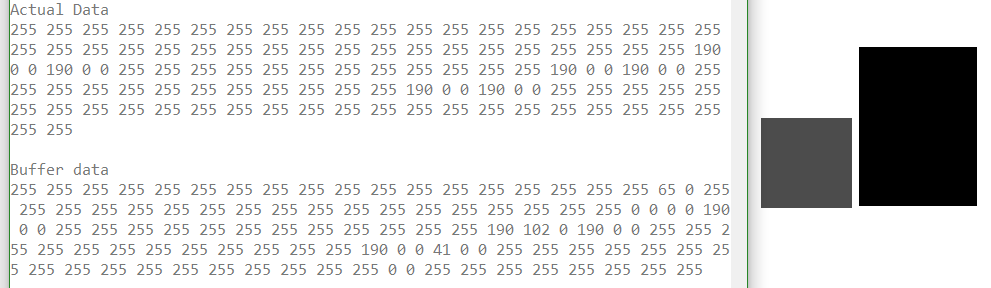

Is glGetBufferSubData used both for regular and texture buffers ? I am trying to troubleshoot why my texture is not showing up and when I use glGetBufferSubData to read the buffer I get some garbage

struct TypeGLtexture //Associate texture data with a GL buffer

{

TypeGLbufferID GLbuffer;

TypeImageFile ImageFile;

void GenerateGLbuffer ()

{

if (GLbuffer.isActive==true || ImageFile.GetPixelArray().size()==0) return;

GLbuffer.isActive=true;

GLbuffer.isTexture=true;

GLbuffer.Name="Texture Buffer";

GLbuffer.ElementCount=ImageFile.GetPixelArray().size();

glEnable(GL_TEXTURE_2D);

glGenTextures (1,&GLbuffer.ID); //instantiate ONE buffer object and return its handle/ID

glBindTexture (GL_TEXTURE_2D,GLbuffer.ID); //connect the object to the GL_TEXTURE_2D docking point

glTexImage2D (GL_TEXTURE_2D,0,GL_RGB,ImageFile.GetProperties().width, ImageFile.GetProperties().height,0,GL_RGB,GL_UNSIGNED_BYTE,&(ImageFile.GetPixelArray()[0]));

if(ImageFile.GetProperties().width==6){

cout<<"Actual Data"<<endl;

for (unsigned i=0;i<GLbuffer.ElementCount;i++) cout<<(int)ImageFile.GetPixelArray()[i]<<" ";

cout<<endl<<endl;

cout<<"Buffer data"<<endl;

GLubyte read[GLbuffer.ElementCount]; //Read back from the buffer (to make sure)

glGetBufferSubData(GL_TEXTURE_2D,0,GLbuffer.ElementCount,read);

for (unsigned i=0;i<GLbuffer.ElementCount;i++) cout<<(int)read[i]<<" ";

cout<<endl<<endl;}

}

EDIT:

Using glGetTexImage(GL_TEXTURE_2D,0,GL_RGB,GL_UNSIGNED_BYTE,read);

the data still differs: