As others have discussed, GLSL lacks any kind of printf debugging.

But sometimes I really want to examine numeric values while debugging my shaders.

I've been trying to create a visual debugging tool.

I found that it's possible to render an arbitrary series of digits fairly easily in a shader, if you work with a sampler2D in which the digits 0123456789 have been rendered in monospace. Basically, you just juggle your x coordinate.

Now, to use this to examine a floating-point number, I need an algorithm for converting a float to a sequence of decimal digits, such as you might find in any printf implementation.

Unfortunately, as far as I understand the topic, these algorithms seem to need to represent the

floating-point number in a higher-precision format, and I don't see how this is going to be

possible in GLSL where I seem to have only 32-bit floats available.

For this reason, I think this question is not a duplicate of any general "how does printf work" question, but rather specifically about how such algorithms can be made to work under the constraints of GLSL. I've seen this question and answer, but have no idea what's going on there.

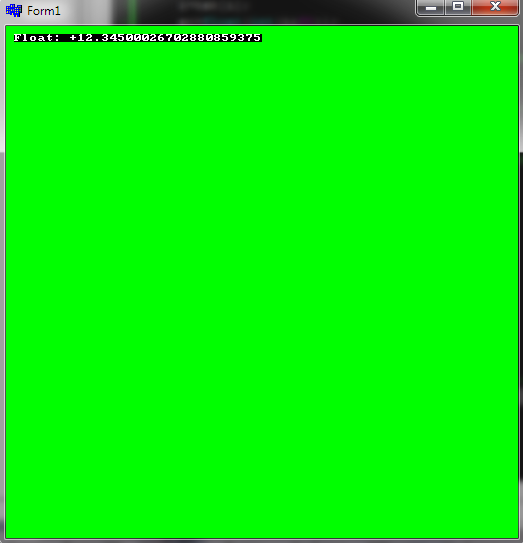

The algorithms I've tried aren't very good.

My first try, marked Version A (commented out) seemed pretty bad:

to take three random examples, RenderDecimal(1.0) rendered as 1.099999702, RenderDecimal(2.5) gave me

2.599999246 and RenderDecimal(2.6) came out as 2.699999280.

My second try, marked Version B, seemed

slightly better: 1.0 and 2.6 both come out fine, but RenderDecimal(2.5) still mismatches an apparent

rounding-up of the 5 with the fact that the residual is 0.099.... The result appears as 2.599000022.

My minimal/complete/verifiable example, below, starts with some shortish GLSL 1.20 code, and then

I happen to have chosen Python 2.x for the rest, just to get the shaders compiled and the textures loaded and rendered. It requires the pygame, NumPy, PyOpenGL and PIL third-party packages. Note that the Python is really just boilerplate and could be trivially (though tediously) re-written in C or anything else. Only the GLSL code at the top is critical for this question, and for this reason I don't think the python or python 2.x tags would be helpful.

It requires the following image to be saved as digits.png:

vertexShaderSource = """\

varying vec2 vFragCoordinate;

void main(void)

{

vFragCoordinate = gl_Vertex.xy;

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

"""

fragmentShaderSource = """\

varying vec2 vFragCoordinate;

uniform vec2 uTextureSize;

uniform sampler2D uTextureSlotNumber;

float OrderOfMagnitude( float x )

{

return x == 0.0 ? 0.0 : floor( log( abs( x ) ) / log( 10.0 ) );

}

void RenderDecimal( float value )

{

// Assume that the texture to which uTextureSlotNumber refers contains

// a rendering of the digits '0123456789' packed together, such that

const vec2 startOfDigitsInTexture = vec2( 0, 0 ); // the lower-left corner of the first digit starts here and

const vec2 sizeOfDigit = vec2( 100, 125 ); // each digit spans this many pixels

const float nSpaces = 10.0; // assume we have this many digits' worth of space to render in

value = abs( value );

vec2 pos = vFragCoordinate - startOfDigitsInTexture;

float dpstart = max( 0.0, OrderOfMagnitude( value ) );

float decimal_position = dpstart - floor( pos.x / sizeOfDigit.x );

float remainder = mod( pos.x, sizeOfDigit.x );

if( pos.x >= 0 && pos.x < sizeOfDigit.x * nSpaces && pos.y >= 0 && pos.y < sizeOfDigit.y )

{

float digit_value;

// Version B

float dp, running_value = value;

for( dp = dpstart; dp >= decimal_position; dp -= 1.0 )

{

float base = pow( 10.0, dp );

digit_value = mod( floor( running_value / base ), 10.0 );

running_value -= digit_value * base;

}

// Version A

//digit_value = mod( floor( value * pow( 10.0, -decimal_position ) ), 10.0 );

vec2 textureSourcePosition = vec2( startOfDigitsInTexture.x + remainder + digit_value * sizeOfDigit.x, startOfDigitsInTexture.y + pos.y );

gl_FragColor = texture2D( uTextureSlotNumber, textureSourcePosition / uTextureSize );

}

// Render the decimal point

if( ( decimal_position == -1.0 && remainder / sizeOfDigit.x < 0.1 && abs( pos.y ) / sizeOfDigit.y < 0.1 ) ||

( decimal_position == 0.0 && remainder / sizeOfDigit.x > 0.9 && abs( pos.y ) / sizeOfDigit.y < 0.1 ) )

{

gl_FragColor = texture2D( uTextureSlotNumber, ( startOfDigitsInTexture + sizeOfDigit * vec2( 1.5, 0.5 ) ) / uTextureSize );

}

}

void main(void)

{

gl_FragColor = texture2D( uTextureSlotNumber, vFragCoordinate / uTextureSize );

RenderDecimal( 2.5 ); // for current demonstration purposes, just a constant

}

"""

# Python (PyOpenGL) code to demonstrate the above

# (Note: the same OpenGL calls could be made from any language)

import os, sys, time

import OpenGL

from OpenGL.GL import *

from OpenGL.GLU import *

import pygame, pygame.locals # just for getting a canvas to draw on

try: from PIL import Image # PIL.Image module for loading image from disk

except ImportError: import Image # old PIL didn't package its submodules on the path

import numpy # for manipulating pixel values on the Python side

def CompileShader( type, source ):

shader = glCreateShader( type )

glShaderSource( shader, source )

glCompileShader( shader )

result = glGetShaderiv( shader, GL_COMPILE_STATUS )

if result != 1:

raise Exception( "Shader compilation failed:\n" + glGetShaderInfoLog( shader ) )

return shader

class World:

def __init__( self, width, height ):

self.window = pygame.display.set_mode( ( width, height ), pygame.OPENGL | pygame.DOUBLEBUF )

# compile shaders

vertexShader = CompileShader( GL_VERTEX_SHADER, vertexShaderSource )

fragmentShader = CompileShader( GL_FRAGMENT_SHADER, fragmentShaderSource )

# build shader program

self.program = glCreateProgram()

glAttachShader( self.program, vertexShader )

glAttachShader( self.program, fragmentShader )

glLinkProgram( self.program )

# try to activate/enable shader program, handling errors wisely

try:

glUseProgram( self.program )

except OpenGL.error.GLError:

print( glGetProgramInfoLog( self.program ) )

raise

# enable alpha blending

glTexEnvf( GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE, GL_MODULATE )

glEnable( GL_DEPTH_TEST )

glEnable( GL_BLEND )

glBlendEquation( GL_FUNC_ADD )

glBlendFunc( GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA )

# set projection and background color

gluOrtho2D( 0, width, 0, height )

glClearColor( 0.0, 0.0, 0.0, 1.0 )

self.uTextureSlotNumber_addr = glGetUniformLocation( self.program, 'uTextureSlotNumber' )

self.uTextureSize_addr = glGetUniformLocation( self.program, 'uTextureSize' )

def RenderFrame( self, *textures ):

glClear( GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT )

for t in textures: t.Draw( world=self )

pygame.display.flip()

def Close( self ):

pygame.display.quit()

def Capture( self ):

w, h = self.window.get_size()

rawRGB = glReadPixels( 0, 0, w, h, GL_RGB, GL_UNSIGNED_BYTE )

return Image.frombuffer( 'RGB', ( w, h ), rawRGB, 'raw', 'RGB', 0, 1 ).transpose( Image.FLIP_TOP_BOTTOM )

class Texture:

def __init__( self, source, slot=0, position=(0,0,0) ):

# wrangle array

source = numpy.array( source )

if source.dtype.type not in [ numpy.float32, numpy.float64 ]: source = source.astype( float ) / 255.0

while source.ndim < 3: source = numpy.expand_dims( source, -1 )

if source.shape[ 2 ] == 1: source = source[ :, :, [ 0, 0, 0 ] ] # LUMINANCE -> RGB

if source.shape[ 2 ] == 2: source = source[ :, :, [ 0, 0, 0, 1 ] ] # LUMINANCE_ALPHA -> RGBA

if source.shape[ 2 ] == 3: source = source[ :, :, [ 0, 1, 2, 2 ] ]; source[ :, :, 3 ] = 1.0 # RGB -> RGBA

# now it can be transferred as GL_RGBA and GL_FLOAT

# housekeeping

self.textureSize = [ source.shape[ 1 ], source.shape[ 0 ] ]

self.textureSlotNumber = slot

self.textureSlotCode = getattr( OpenGL.GL, 'GL_TEXTURE%d' % slot )

self.listNumber = slot + 1

self.position = list( position )

# transfer texture content

glActiveTexture( self.textureSlotCode )

self.textureID = glGenTextures( 1 )

glBindTexture( GL_TEXTURE_2D, self.textureID )

glEnable( GL_TEXTURE_2D )

glTexImage2D( GL_TEXTURE_2D, 0, GL_RGBA32F, self.textureSize[ 0 ], self.textureSize[ 1 ], 0, GL_RGBA, GL_FLOAT, source[ ::-1 ] )

glTexParameterf( GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST )

glTexParameterf( GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST )

# define surface

w, h = self.textureSize

glNewList( self.listNumber, GL_COMPILE )

glBegin( GL_QUADS )

glColor3f( 1, 1, 1 )

glNormal3f( 0, 0, 1 )

glVertex3f( 0, h, 0 )

glVertex3f( w, h, 0 )

glVertex3f( w, 0, 0 )

glVertex3f( 0, 0, 0 )

glEnd()

glEndList()

def Draw( self, world ):

glPushMatrix()

glTranslate( *self.position )

glUniform1i( world.uTextureSlotNumber_addr, self.textureSlotNumber )

glUniform2f( world.uTextureSize_addr, *self.textureSize )

glCallList( self.listNumber )

glPopMatrix()

world = World( 1000, 800 )

digits = Texture( Image.open( 'digits.png' ) )

done = False

while not done:

world.RenderFrame( digits )

for event in pygame.event.get():

# Press 'q' to quit or 's' to save a timestamped snapshot

if event.type == pygame.locals.QUIT: done = True

elif event.type == pygame.locals.KEYUP and event.key in [ ord( 'q' ), 27 ]: done = True

elif event.type == pygame.locals.KEYUP and event.key in [ ord( 's' ) ]:

world.Capture().save( time.strftime( 'snapshot-%Y%m%d-%H%M%S.png' ) )

world.Close()