I've implemented backpropagation as explained in this video. https://class.coursera.org/ml-005/lecture/51

This seems to have worked successfully, passing gradient checking and allowing me to train on MNIST digits.

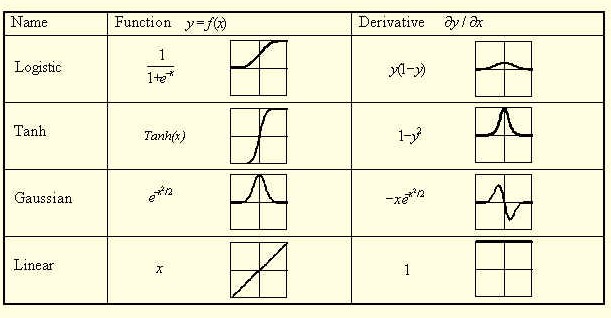

However, I've noticed most other explanations of backpropagation calculate the output delta as

d = (a - y) * f'(z) http://ufldl.stanford.edu/wiki/index.php/Backpropagation_Algorithm

whilst the video uses.

d = (a - y).

When I multiply my delta by the activation derivative (sigmoid derivative), I no longer end up with the same gradients as gradient checking (at least an order of magnitude in difference).

What allows Andrew Ng (video) to leave out the derivative of the activation for the output delta? And why does it work? Yet when adding the derivative, incorrect gradients are calculated?

EDIT

I have now tested with linear and sigmoid activation functions on the output, gradient checking only passes when I use Ng's delta equation (no sigmoid derivative) for both cases.