Could someone please explain multiplexing in relation to HTTP/2 and how it works?

Put simply, multiplexing allows your Browser to fire off multiple requests at once on the same connection and receive the requests back in any order.

And now for the much more complicated answer...

When you load a web page, it downloads the HTML page, it sees it needs some CSS, some JavaScript, a load of images... etc.

Under HTTP/1.1 you can only download one of those at a time on your HTTP/1.1 connection. So your browser downloads the HTML, then it asks for the CSS file. When that's returned it asks for the JavaScript file. When that's returned it asks for the first image file... etc. HTTP/1.1 is basically synchronous - once you send a request you're stuck until you get a response. This means most of the time the browser is not doing very much, as it has fired off a request, is waiting for a response, then fires off another request, then is waiting for a response... etc. Of course complex sites with lots of JavaScript do require the Browser to do lots of processing, but that depends on the JavaScript being downloaded so, at least for the beginning, the delays inherit to HTTP/1.1 do cause problems. Typically the server isn't doing very much either (at least per request - of course they add up for busy sites), because it should respond almost instantly for static resources (like CSS, JavaScript, images, fonts... etc.) and hopefully not too much longer even for dynamic requests (that require a database call or the like).

So one of the main issues on the web today is the network latency in sending the requests between browser and server. It may only be tens or perhaps hundreds of millisecond, which might not seem much, but they add up and are often the slowest part of web browsing - especially as websites get more complex and require extra resources (as they are getting) and Internet access is increasingly via mobile (with slower latency than broadband).

As an example let's say there are 10 resources that your web page needs to load after the HTML is loaded itself (which is a very small site by today's standards as 100+ resources is common, but we'll keep it simple and go with this example). And let's say each request takes 100ms to travel across the Internet to web server and back and the processing time at either end is negligible (let's say 0 for this example for simplicity sake). As you have to send each resource and wait for a response one at a time, this will take 10 * 100ms = 1,000ms or 1 second to download the whole site.

To get around this, browsers usually open multiple connections to the web server (typically 6). This means a browser can fire off multiple requests at the same time, which is much better, but at the cost of the complexity of having to set-up and manage multiple connections (which impacts both browser and server). Let's continue the previous example and also say there are 4 connections and, for simplicity, let's say all requests are equal. In this case you can split the requests across all four connections, so two will have 3 resources to get, and two will have 2 resources to get totally the ten resources (3 + 3 + 2 + 2 = 10). In that case the worst case is 3 round times or 300ms = 0.3 seconds - a good improvement, but this simple example does not include the cost of setting up those multiple connections, nor the resource implications of managing them (which I've not gone into here as this answer is long enough already but setting up separate TCP connections does take time and other resources - to do the TCP connection, HTTPS handshake and then get up to full speed due to TCP slow start).

HTTP/2 allows you to send off multiple requests on the same connection - so you don't need to open multiple connections as per above. So your browser can say "Gimme this CSS file. Gimme that JavaScript file. Gimme image1.jpg. Gimme image2.jpg... Etc." to fully utilise the one single connection. This has the obvious performance benefit of not delaying sending of those requests waiting for a free connection. All these requests make their way through the Internet to the server in (almost) parallel. The server responds to each one, and then they start to make their way back. In fact it's even more powerful than that as the web server can respond to them in any order it feels like and send back files in different order, or even break each file requested into pieces and intermingle the files together. This has the secondary benefit of one heavy request not blocking all the other subsequent requests (known as the head of line blocking issue). The web browser then is tasked with putting all the pieces back together. In best case (assuming no bandwidth limits - see below), if all 10 requests are fired off pretty much at once in parallel, and are answered by the server immediately, this means you basically have one round trip or 100ms or 0.1 seconds, to download all 10 resources. And this has none of the downsides that multiple connections had for HTTP/1.1! This is also much more scalable as resources on each website grow (currently browsers open up to 6 parallel connections under HTTP/1.1 but should that grow as sites get more complex?).

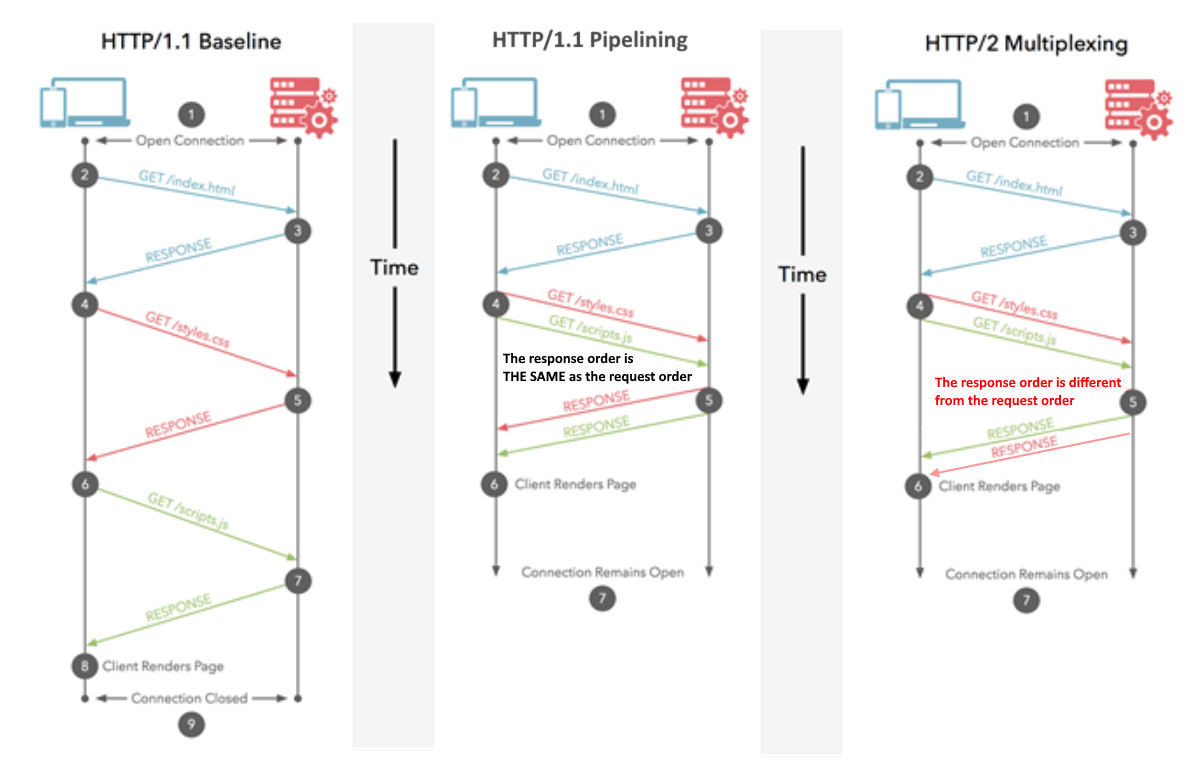

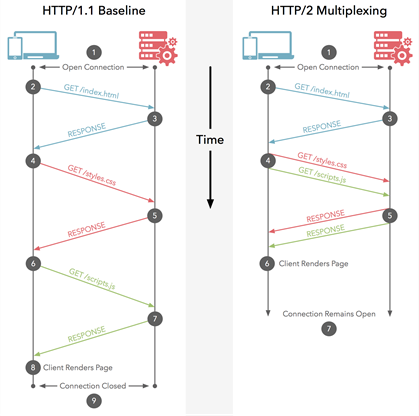

This diagram shows the differences, and there is an animated version too.

Note: HTTP/1.1 does have the concept of pipelining which also allows multiple requests to be sent off at once. However they still had to be returned in order they were requested, in their entirety, so nowhere near as good as HTTP/2, even if conceptually it's similar. Not to mention the fact this is so poorly supported by both browsers and servers that it is rarely used.

One thing highlighted in below comments is how bandwidth impacts us here. Of course your Internet connection is limited by how much you can download and HTTP/2 does not address that. So if those 10 resources discussed in above examples are all massive print-quality images, then they will still be slow to download. However, for most web browser, bandwidth is less of a problem than latency. So if those ten resources are small items (particularly text resources like CSS and JavaScript which can be gzipped to be tiny), as is very common on websites, then bandwidth is not really an issue - it's the sheer volume of resources that is often the problem and HTTP/2 looks to address that. This is also why concatenation is used in HTTP/1.1 as another workaround, so for example all CSS is often joined together into one file: the amount of CSS downloaded is the same but by doing it as one resource there are huge performance benefits (though less so with HTTP/2 and in fact some say concatenation should be an anti-pattern under HTTP/2 - though there are arguments against doing away with it completely too).

To put it as a real world example: assume you have to order 10 items from a shop for home delivery:

HTTP/1.1 with one connection means you have to order them one at a time and you cannot order the next item until the last arrives. You can understand it would take weeks to get through everything.

HTTP/1.1 with multiple connections means you can have a (limited) number of independent orders on the go at the same time.

HTTP/1.1 with pipelining means you can ask for all 10 items one after the other without waiting, but then they all arrive in the specific order you asked for them. And if one item is out of stock then you have to wait for that before you get the items you ordered after that - even if those later items are actually in stock! This is a bit better but is still subject to delays, and let's say most shops don't support this way of ordering anyway.

HTTP/2 means you can order your items in any particular order - without any delays (similar to above). The shop will dispatch them as they are ready, so they may arrive in a different order than you asked for them, and they may even split items so some parts of that order arrive first (so better than above). Ultimately this should mean you 1) get everything quicker overall and 2) can start working on each item as it arrives ("oh that's not as nice as I thought it would be, so I might want to order something else as well or instead").

Of course you're still limited by the size of your postman's van (the bandwidth) so they might have to leave some packages back at the sorting office until the next day if they are full up for that day, but that's rarely a problem compared to the delay in actually sending the order across and back. Most of web browsing involves sending small letters back and forth, rather than bulky packages.

HTTP/2 means you can order your items in any particular order, so how can you manage the order of your js scripts ? for example if you are using jQuery, you should load it first before using it inside your own script. In that case the order does matter, and you will have a problem if your script is loaded before jQuery –

Boothe Since @Juanma Menendez answer is correct while his diagram is confusing, I decided to improve upon it, clarifying the difference between multiplexing and pipelining, the notions that are often conflated.

Pipelining (HTTP/1.1)

Multiple requests are sent over the same HTTP connection. Responses are received in the same order. If the first response takes a lot of time, other responses have to wait in line. Similar to CPU pipeling where an instruction is fetched while another one is being decoded. Multiple instructions are in flight at the same time, but their order is preserved.

Multiplexing (HTTP/2)

Multiple requests are sent over the same HTTP connection. Responses are received in the arbitrary order. No need to wait for a slow response that's blocking others. Similar to out-of-order instruction execution in modern CPUs.

Hopefully the improved image clarifies the difference:

Request multiplexing

HTTP/2 can send multiple requests for data in parallel over a single TCP connection. This is the most advanced feature of the HTTP/2 protocol because it allows you to download web files asynchronously from one server. Most modern browsers limit TCP connections to one server. This reduces the additional round trip time (RTT), making your website load faster without any optimization, and makes domain sharding unnecessary.

Multiplexing in HTTP 2.0 is the type of relationship between the browser and the server that use a single connection to deliver multiple requests and responses in parallel, creating many individual frames in this process.

Multiplexing breaks away from the strict request-response semantics and enables one-to-many or many-to-many relationships.

Simple Ans (Source) :

Multiplexing means your browser can send multiple requests and receive multiple responses "bundled" into a single TCP connection. So the workload associated with DNS lookups and handshakes is saved for files coming from the same server.

Complex/Detailed Ans:

Look out the answer provided by @BazzaDP.

© 2022 - 2024 — McMap. All rights reserved.