I'm trying to realize a virtual Pan-Tilt-Zoom (PTZ) camera, based on data from physical fisheye camera (180 degrees FOV).

In my opinion I have to realize the next sequence.

- Get the coordinates of center of fisheye circle in coordinates of fisheye sensor matrix.

- Get radius of fisheye circle in the same coordinate system.

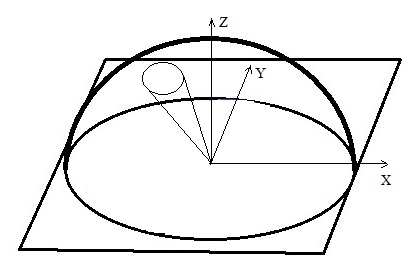

- Generate a sphere equation, which has the same center and radius as flat fisheye image on flat camera sensor.

- Project all colored points from flat image to upper hemisphere.

- Choose angles in X Y plane and X Z plane to describe the direction of view of virtual PTZ.

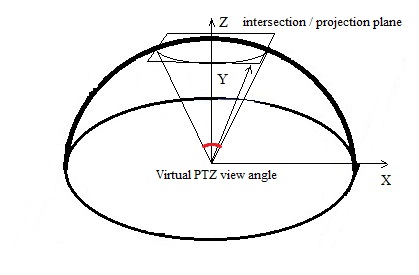

- Choose view angle and mark it with circle around virtual PTZ view vector which will be painted on the surface of hemisphere.

- Generate the plane equation which intersection with hemisphere will be a circle around the direction of view.

- Move all colored points from the circle to the plane of circle around the direction of view, using direction from hemisphere edge to hemisphere center for projection.

- Paint all unpainted points inside circle of projection, using interpolation (realized in cv::remap).

In my opinion the most important step is to rise colored points from flat image to 3-D hemisphere.

My question is: Will it be correct to just set Z-coordinate to all colored points of flat image in accordance with hemisphere equation, to rise points from image plane to hemisphere surface?