How do I collect all the data from a Node.js stream into a string?

(This answer is from years ago, when it was the best answer. There is now a better answer below this. I haven't kept up with node.js, and I cannot delete this answer because it is marked "correct on this question". If you are thinking of down clicking, what do you want me to do?)

The key is to use the data and end events of a Readable Stream. Listen to these events:

stream.on('data', (chunk) => { ... });

stream.on('end', () => { ... });

When you receive the data event, add the new chunk of data to a Buffer created to collect the data.

When you receive the end event, convert the completed Buffer into a string, if necessary. Then do what you need to do with it.

Another way would be to convert the stream to a promise (refer to the example below) and use then (or await) to assign the resolved value to a variable.

function streamToString (stream) {

const chunks = [];

return new Promise((resolve, reject) => {

stream.on('data', (chunk) => chunks.push(Buffer.from(chunk)));

stream.on('error', (err) => reject(err));

stream.on('end', () => resolve(Buffer.concat(chunks).toString('utf8')));

})

}

const result = await streamToString(stream)

SyntaxError: await is only valid in async function. What am I doing wrong? –

Toluene streamToString(stream).then(function(response){//Do whatever you want with response}); –

Parley .toString("utf8") at the end, to avoid the problem of a decode failure if a chunk is split in the middle of a multibyte character; (2) actual error handling; (3) putting the code in a function, so it can be reused, not copy-pasted; (4) using Promises so the function can be await-ed on; (5) small code that doesn't drag in a million dependencies, unlike certain npm libraries; (6) ES6 syntax and modern best practices. –

Delcine Uncaught TypeError [ERR_INVALID_ARG_TYPE]: The "list[0]" argument must be an instance of Buffer or Uint8Array. Received type string if the stream produces string chunks instead of Buffer. Using chunks.push(Buffer.from(chunk)) should work with both string and Buffer chunks. –

Deepdyed chunks.push(Buffer.from(chunk)) gives a typescript error Argument type Buffer | string is not assignable to parameter type WithImplicitCoercion<ArrayBuffer | SharedArrayBuffer>. Is this a valid error or are the types broken? –

Fantasy reject as the callback instead of calling it)--will that run into errors? –

Copacetic readLine.createInterface({ input: stream, crlfDelay: Infinity }) –

Retortion const packagePath = execSync("rospack find my_package").toString(). –

Vend import { text } from 'node:stream/consumers'; https://mcmap.net/q/116452/-how-do-i-read-the-contents-of-a-node-js-stream-into-a-string-variable –

Downtoearth What do you think about this ?

async function streamToString(stream) {

// lets have a ReadableStream as a stream variable

const chunks = [];

for await (const chunk of stream) {

chunks.push(Buffer.from(chunk));

}

return Buffer.concat(chunks).toString("utf-8");

}

chunks.push(Buffer.from(chunk)); to make it work with string chunks. –

Vierno for await is a valid ECMAScript syntax) or is itself outdated if it attempts to (unsuccessfully) execute some code containing for await. Which IDE is it? Anyway, IDEs aren't designed to actually run programs "in production", they lint them and help with analysis during development. –

Backstroke for await. Query the version of the program and find out if the version actually supports the syntax. Then find out why your IDE is using the particular "outdated" version of the program and find a way to update both. –

Backstroke push the chunk using Buffer.from, ex. chunks.push(Buffer.from(chunk)), but otherwise a nice and elegant solution! thanks! –

Paule None of the above worked for me. I needed to use the Buffer object:

const chunks = [];

readStream.on("data", function (chunk) {

chunks.push(chunk);

});

// Send the buffer or you can put it into a var

readStream.on("end", function () {

res.send(Buffer.concat(chunks));

});

Hope this is more useful than the above answer:

var string = '';

stream.on('data',function(data){

string += data.toString();

console.log('stream data ' + part);

});

stream.on('end',function(){

console.log('final output ' + string);

});

Note that string concatenation is not the most efficient way to collect the string parts, but it is used for simplicity (and perhaps your code does not care about efficiency).

Also, this code may produce unpredictable failures for non-ASCII text (it assumes that every character fits in a byte), but perhaps you do not care about that, either.

join("") on the array at the end. –

Ginger toString('utf8') - but by default string encoding is utf8 so i suspect that your stream may not be utf8 @Magee - see #12122275 for more –

Prelacy (This answer is from years ago, when it was the best answer. There is now a better answer below this. I haven't kept up with node.js, and I cannot delete this answer because it is marked "correct on this question". If you are thinking of down clicking, what do you want me to do?)

The key is to use the data and end events of a Readable Stream. Listen to these events:

stream.on('data', (chunk) => { ... });

stream.on('end', () => { ... });

When you receive the data event, add the new chunk of data to a Buffer created to collect the data.

When you receive the end event, convert the completed Buffer into a string, if necessary. Then do what you need to do with it.

I'm using usually this simple function to transform a stream into a string:

function streamToString(stream, cb) {

const chunks = [];

stream.on('data', (chunk) => {

chunks.push(chunk.toString());

});

stream.on('end', () => {

cb(chunks.join(''));

});

}

Usage example:

let stream = fs.createReadStream('./myFile.foo');

streamToString(stream, (data) => {

console.log(data); // data is now my string variable

});

chunks.push(chunk.toString()); –

Icelandic And yet another one for strings using promises:

function getStream(stream) {

return new Promise(resolve => {

const chunks = [];

# Buffer.from is required if chunk is a String, see comments

stream.on("data", chunk => chunks.push(Buffer.from(chunk)));

stream.on("end", () => resolve(Buffer.concat(chunks).toString()));

});

}

Usage:

const stream = fs.createReadStream(__filename);

getStream(stream).then(r=>console.log(r));

remove the .toString() to use with binary Data if required.

update: @AndreiLED correctly pointed out this has problems with strings. I couldn't get a stream returning strings with the version of node I have, but the api notes this is possible.

Uncaught TypeError [ERR_INVALID_ARG_TYPE]: The "list[0]" argument must be an instance of Buffer or Uint8Array. Received type string if the stream produces string chunks instead of Buffer. Using chunks.push(Buffer.from(chunk)) should work with both string and Buffer chunks. –

Deepdyed It's easiest using Node.js built-in streamConsumers.text:

import { text } from 'node:stream/consumers';

import { Readable } from 'node:stream';

const readable = Readable.from('Hello world from consumers!');

const string = await text(readable);

Easy way with the popular (over 5m weekly downloads) and lightweight get-stream library:

https://www.npmjs.com/package/get-stream

const fs = require('fs');

const getStream = require('get-stream');

(async () => {

const stream = fs.createReadStream('unicorn.txt');

console.log(await getStream(stream)); //output is string

})();

From the nodejs documentation you should do this - always remember a string without knowing the encoding is just a bunch of bytes:

var readable = getReadableStreamSomehow();

readable.setEncoding('utf8');

readable.on('data', function(chunk) {

assert.equal(typeof chunk, 'string');

console.log('got %d characters of string data', chunk.length);

})

Streams don't have a simple .toString() function (which I understand) nor something like a .toStringAsync(cb) function (which I don't understand).

So I created my own helper function:

var streamToString = function(stream, callback) {

var str = '';

stream.on('data', function(chunk) {

str += chunk;

});

stream.on('end', function() {

callback(str);

});

}

// how to use:

streamToString(myStream, function(myStr) {

console.log(myStr);

});

I had more luck using like that :

let string = '';

readstream

.on('data', (buf) => string += buf.toString())

.on('end', () => console.log(string));

I use node v9.11.1 and the readstream is the response from a http.get callback.

Even if this answer was made 10 years ago, I consider it's important to add my answer since there are a couple of popular answers that do not consider the official docs of Node.js (https://nodejs.org/api/stream.html#readablesetencodingencoding) which say:

The Readable stream will properly handle multi-byte characters delivered through the stream that would otherwise become improperly decoded if simply pulled from the stream as Buffer objects.

That's the reason I will modify the two most popular answers showing the best way to do the encoding process:

function streamToString(stream) {

stream.setEncoding('utf-8'); // do this instead of directly converting the string

const chunks = [];

return new Promise((resolve, reject) => {

stream.on('data', (chunk) => chunks.push(chunk));

stream.on('error', (err) => reject(err));

stream.on('end', () => resolve(chunks.join("")));

})

}

const result = await streamToString(stream)

or:

async function streamToString(stream) {

stream.setEncoding('utf-8'); // do this instead of directly converting the string

// input must be stream with readable property

const chunks = [];

for await (const chunk of stream) {

chunks.push(chunk);

}

return chunks.join("");

}

What about something like a stream reducer ?

Here is an example using ES6 classes how to use one.

var stream = require('stream')

class StreamReducer extends stream.Writable {

constructor(chunkReducer, initialvalue, cb) {

super();

this.reducer = chunkReducer;

this.accumulator = initialvalue;

this.cb = cb;

}

_write(chunk, enc, next) {

this.accumulator = this.reducer(this.accumulator, chunk);

next();

}

end() {

this.cb(null, this.accumulator)

}

}

// just a test stream

class EmitterStream extends stream.Readable {

constructor(chunks) {

super();

this.chunks = chunks;

}

_read() {

this.chunks.forEach(function (chunk) {

this.push(chunk);

}.bind(this));

this.push(null);

}

}

// just transform the strings into buffer as we would get from fs stream or http request stream

(new EmitterStream(

["hello ", "world !"]

.map(function(str) {

return Buffer.from(str, 'utf8');

})

)).pipe(new StreamReducer(

function (acc, v) {

acc.push(v);

return acc;

},

[],

function(err, chunks) {

console.log(Buffer.concat(chunks).toString('utf8'));

})

);

The cleanest solution may be to use the "string-stream" package, which converts a stream to a string with a promise.

const streamString = require('stream-string')

streamString(myStream).then(string_variable => {

// myStream was converted to a string, and that string is stored in string_variable

console.log(string_variable)

}).catch(err => {

// myStream emitted an error event (err), so the promise from stream-string was rejected

throw err

})

All the answers listed appear to open the Readable Stream in flowing mode which is not the default in NodeJS and can have limitations since it lacks backpressure support that NodeJS provides in Paused Readable Stream Mode. Here is an implementation using Just Buffers, Native Stream and Native Stream Transforms and support for Object Mode

import {Transform} from 'stream';

let buffer =null;

function objectifyStream() {

return new Transform({

objectMode: true,

transform: function(chunk, encoding, next) {

if (!buffer) {

buffer = Buffer.from([...chunk]);

} else {

buffer = Buffer.from([...buffer, ...chunk]);

}

next(null, buffer);

}

});

}

process.stdin.pipe(objectifyStream()).process.stdout

If your stream doesn't have methods like .on( and .setEncoding( then you have what the newer "web fetch standards-based" ReadableStream: https://github.com/nodejs/undici/blob/c83b084879fa0bb8e0469d31ec61428ac68160d5/README.md#responsebody

You can simply do this:

const str = await new Response(request.body).text();

(I 100% just copied this from another similar SO question: https://mcmap.net/q/86735/-retrieve-data-from-a-readablestream-object)

The rest of my answer here just provides additional context on my situation, which may help catch certain keywords in google.

=====

I'm trying to convert a request.body (ReadableStream) in a SolidStart api route handler to a string: https://start.solidjs.com/core-concepts/api-routes

SolidStart takes a very isomorphic approach.

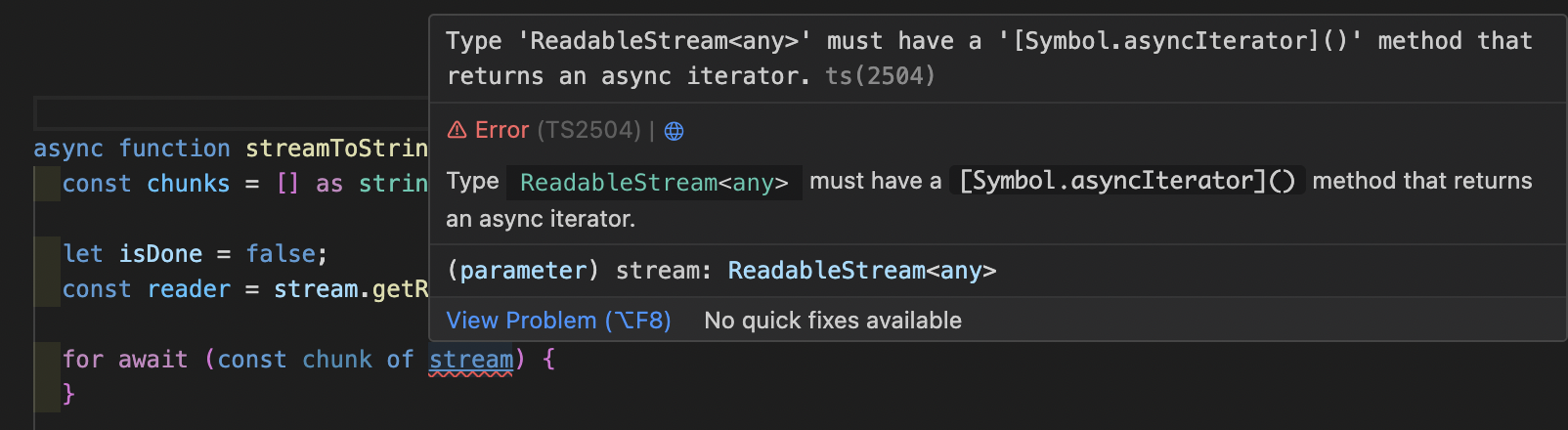

When I attempted to use the code in https://mcmap.net/q/116452/-how-do-i-read-the-contents-of-a-node-js-stream-into-a-string-variable, I got this error:

Type 'ReadableStream<any>' must have a '[Symbol.asyncIterator]()' method that returns an async iterator.ts(2504)

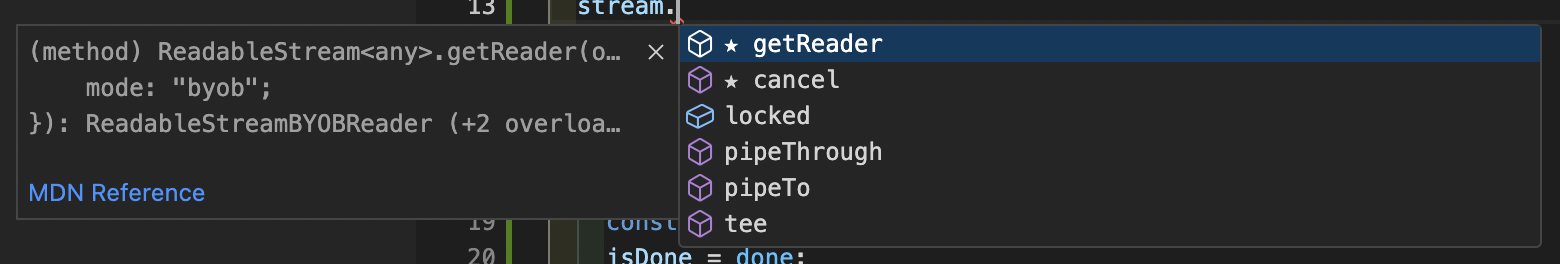

SolidStart uses undici as it's isomorphic fetch implementation, and newer versions of node have basically used this library for node's fetch implementation. I will be the first to admit, this doesn't seem like a "node.js stream" but in some sense it is, it's just a newer ReadableStream standard that comes with node's native fetch implementation.

For more insight, these are the methods/properties available on this request.body:

setEncoding('utf8');

Well done Sebastian J above.

I had the "buffer problem" with a few lines of test code I had, and added the encoding information and it solved it, see below.

Demonstrate the problem

software

// process.stdin.setEncoding('utf8');

process.stdin.on('data', (data) => {

console.log(typeof(data), data);

});

input

hello world

output

object <Buffer 68 65 6c 6c 6f 20 77 6f 72 6c 64 0d 0a>

Demonstrate the solution

software

process.stdin.setEncoding('utf8'); // <- Activate!

process.stdin.on('data', (data) => {

console.log(typeof(data), data);

});

input

hello world

output

string hello world

This worked for me and is based on Node v6.7.0 docs:

let output = '';

stream.on('readable', function() {

let read = stream.read();

if (read !== null) {

// New stream data is available

output += read.toString();

} else {

// Stream is now finished when read is null.

// You can callback here e.g.:

callback(null, output);

}

});

stream.on('error', function(err) {

callback(err, null);

})

Using the quite popular stream-buffers package which you probably already have in your project dependencies, this is pretty straightforward:

// imports

const { WritableStreamBuffer } = require('stream-buffers');

const { promisify } = require('util');

const { createReadStream } = require('fs');

const pipeline = promisify(require('stream').pipeline);

// sample stream

let stream = createReadStream('/etc/hosts');

// pipeline the stream into a buffer, and print the contents when done

let buf = new WritableStreamBuffer();

pipeline(stream, buf).then(() => console.log(buf.getContents().toString()));

In my case, the content type response headers was Content-Type: text/plain. So, I've read the data from Buffer like:

let data = [];

stream.on('data', (chunk) => {

console.log(Buffer.from(chunk).toString())

data.push(Buffer.from(chunk).toString())

});

© 2022 - 2024 — McMap. All rights reserved.