That certainly you can do.

By reading the proto file for training, there is a field called freeze_variables, this is supposed to be a list containing all variables that you want to freeze, e.g. excluding them during the training.

Supposed you want to freeze the weights from the first bottleneck in the first unit of the first block, you can do it by adding

freeze_variables: ["resnet_v1_50/block1/unit_1/bottleneck_v1/conv1/weights"]

so your config flie looks like this:

train_config: {

batch_size: 1

freeze_variables: ["resnet_v1_50/block1/unit_1/bottleneck_v1/conv1/weights"]

...

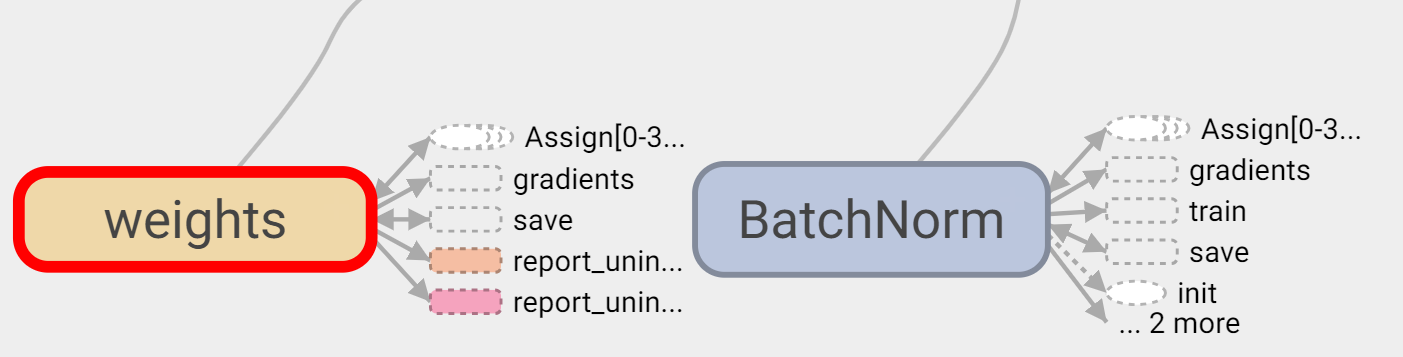

You can verify that the weights are in fact freezed by checking the tensorflow graph. ![enter image description here]()

As shown, the weights do not have train operation anymore.

By choosing specific patterns for freeze_variables, you can freeze variables very flexibly (you can get layer names from the tensorflow graph).

Btw, here is the actual filtering operation.

How to freeze layers in training using TF OD API?– Nub