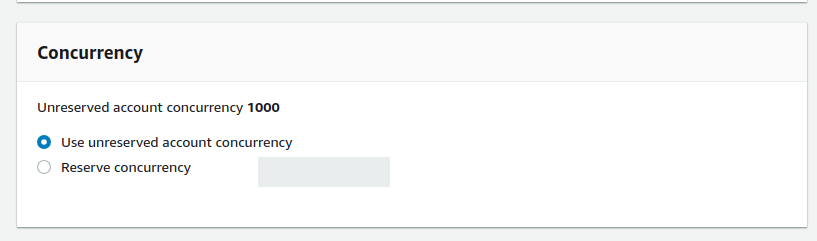

In AWS Lambda, a concurrency limit determines how many function invocations can run simultaneously in one region. You can set this limit though AWS Lambda console or through Serverless Framework.

![AWS Lambda Concurrency]()

If your account limit is 1000 and you reserved 100 concurrent executions for a specific function and 100 concurrent executions for another, the rest of the functions in that region will share the remaining 800 executions.

If you reserve concurrent executions for a specific function, AWS Lambda assumes that you know how many to reserve to avoid performance issues. Functions with allocated concurrency can’t access unreserved concurrency.

The right way to set the reserved concurrency limit in Serverless Framework is the one you shared:

functions:

hello:

handler: handler.hello # required, handler set in AWS Lambda

reservedConcurrency: 5 # optional, reserved concurrency limit for this function. By default, AWS uses account concurrency limit

I would suggest to use SQS to manage your Queue. One of the common architectural reasons for using a queue is to limit the pressure on a different part of your architecture. This could mean preventing overloading a database or avoiding rate-limits on a third-party API when processing a large batch of messages.

For example, let's think about your case where your SQS processing logic needs to connect to a database. You want to limit your workers to have no more than 5 open connections to your database at a time, with concurrency control, you can set proper limits to keep your architecture up.

In your case you could have a function, hello, that receives your requests and put them in a SQS queue. On the other side the function compute will get those SQS messages and compute them limiting the number of concurrent invocations to 5.

You can even set a batch size, that is the number of SQS messages that can be included in a single lambda.

functions:

hello:

handler: handler.hello

compute:

handler: handler.compute

reservedConcurrency: 5

events:

- sqs:

arn: arn:aws:sqs:region:XXXXXX:myQueue

batchSize: 10 # how many SQS messages can be included in a single Lambda invocation

maximumBatchingWindow: 60 # maximum amount of time in seconds to gather records before invoking the function