I'm not much experient on optimization, but I built a solution to this problem with a bisection algorithm like the question describes. I think is necessary to fix a bug in my solution because it compute tow times a root in some cases, but i think it's simple and will try it later.

EDIT: I seem the comment of jpalecek, and now I anderstand that some premises I assumed are wrong, but the methods still works on most cases. More especificaly, the zero is garanteed only if the two functions variate the signals at oposite direction, but is need to handle the cases of zero at the vertices. I think is possible to build a justificated and satisfatory heuristic to that, but it is a little complicated and now I consider more promising get the function given by f_abs = abs(f, g) and build a heuristic to find the local minimuns, looking to the gradient direction on the points of the middle of edges.

Introduction

Consider the configuration in the question:

^

| C D

y2 -+ o-------o

| | |

| | |

| | |

y1 -+ o-------o

| A B

o--+------+---->

x1 x2

There are many ways to do that, but I chose to use only the corner points (A, B, C, D) and not middle or center points liky the question sugests. Assume I have tow function f(x,y) and g(x,y) as you describe. In truth it's generaly a function (x,y) -> (f(x,y), g(x,y)).

The steps are the following, and there is a resume (with a Python code) at the end.

Step by step explanation

- Calculate the product each scalar function (f and g) by them self at adjacent points. Compute the minimum product for each one for each direction of variation (axis, x and y).

Fx = min(f(C)*f(B), f(D)*f(A))

Fy = min(f(A)*f(B), f(D)*f(C))

Gx = min(g(C)*g(B), g(D)*g(A))

Gy = min(g(A)*g(B), g(D)*g(C))

It looks to the product through tow oposite sides of the rectangle and computes the minimum of them, whats represents the existence of a changing of signal if its negative. It's a bit of redundance but work's well. Alternativaly you can try other configuration like use the points (E, F, G and H show in the question), but I think make sense to use the corner points because it consider better the whole area of the rectangle, but it is only a impression.

- Compute the minimum of the tow axis for each function.

F = min(Fx, Fy)

G = min(Gx, Gy)

It of this values represents the existence of a zero for each function, f and g, within the rectangle.

- Compute the maximum of them:

max(F, G)

If max(F, G) < 0, then there is a root inside the rectangle. Additionaly, if f(C) = 0 and g(C) = 0, there is a root too and we do the same, but if the root is in other corner we ignore him, because other rectangle will compute it (I want to avoid double computation of roots). The statement bellow resumes:

guaranteed_contain_zeros = max(F, G) < 0 or (f(C) == 0 and g(C) == 0)

In this case we have to proceed breaking the region recursively ultil the rectangles are as small as we want.

Else, may still exist a root inside the rectangle. Because of that, we have to use some criterion to break this regions ultil the we have a minimum granularity. The criterion I used is to assert the largest dimension of the current rectangle is smaller than the smallest dimension of the original rectangle (delta in the code sample bellow).

Resume

This Python code resume:

def balance_points(x_min, x_max, y_min, y_max, delta, eps=2e-32):

width = x_max - x_min

height = y_max - y_min

x_middle = (x_min + x_max)/2

y_middle = (y_min + y_max)/2

Fx = min(f(C)*f(B), f(D)*f(A))

Fy = min(f(A)*f(B), f(D)*f(C))

Gx = min(g(C)*g(B), g(D)*g(A))

Gy = min(g(A)*g(B), g(D)*g(C))

F = min(Fx, Fy)

G = min(Gx, Gy)

largest_dim = max(width, height)

guaranteed_contain_zeros = max(F, G) < 0 or (f(C) == 0 and g(C) == 0)

if guaranteed_contain_zeros and largest_dim <= eps:

return [(x_middle, y_middle)]

elif guaranteed_contain_zeros or largest_dim > delta:

if width >= height:

return balance_points(x_min, x_middle, y_min, y_max, delta) + balance_points(x_middle, x_max, y_min, y_max, delta)

else:

return balance_points(x_min, x_max, y_min, y_middle, delta) + balance_points(x_min, x_max, y_middle, y_max, delta)

else:

return []

Results

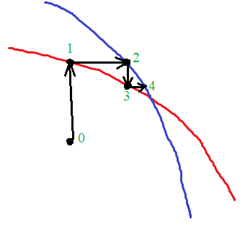

I have used a similar code similar in a personal project (GitHub here) and it draw the rectangles of the algorithm and the root (the system have a balance point at the origin):

Rectangles

It works well.

Improvements

In some cases the algorithm compute tow times the same zero. I thinh it can have tow reasons:

- I the case the functions gives exatly zero at neighbour rectangles (because of an numerical truncation). In this case the remedy is to incrise

eps (increase the rectangles). I chose eps=2e-32, because 32 bits is a half of the precision (on 64 bits archtecture), then is problable that the function don't gives a zero... but it was more like a guess, I don't now if is the better. But, if we decrease much the eps, it extrapolates the recursion limit of Python interpreter.

- The case in witch the f(A), f(B), etc, are near to zero and the product is truncated to zero. I think it can be reduced if we use the product of the signals of f and g in place of the product of the functions.

- I think is possible improve the criterion to discard a rectangle. It can be made considering how much the functions are variating in the region of the rectangle and how distante the function is of zero. Perhaps a simple relation between the average and variance of the function values on the corners. In another way (and more complicated) we can use a stack to store the values on each recursion instance and garantee that this values are convergent to stop recursion.

fandgare not shown in the diagram above. At each point on the map my functionsfandghave a value and I am trying to find which point on the map makes them both zero at the same time. – Dourine