This works by creating all the necessary libraries that are referenced by classic .NET libraries.

E.g. in .NET Core the implementation of Object or Attribute is defined in System.Runtime. When you compile code, the generated code always references the assembly and the type => [System.Runtime]System.Object. Classic .NET projects however reference System.Object from mscorlib. When trying to use a classic .NET assembly on .NET Core 1.0/1.1, this usually leads to types not being found. In .NET Core 2.0, there will be "fake" types in a mscorlib that the runtime knows how to forward to where the implementation actually is.

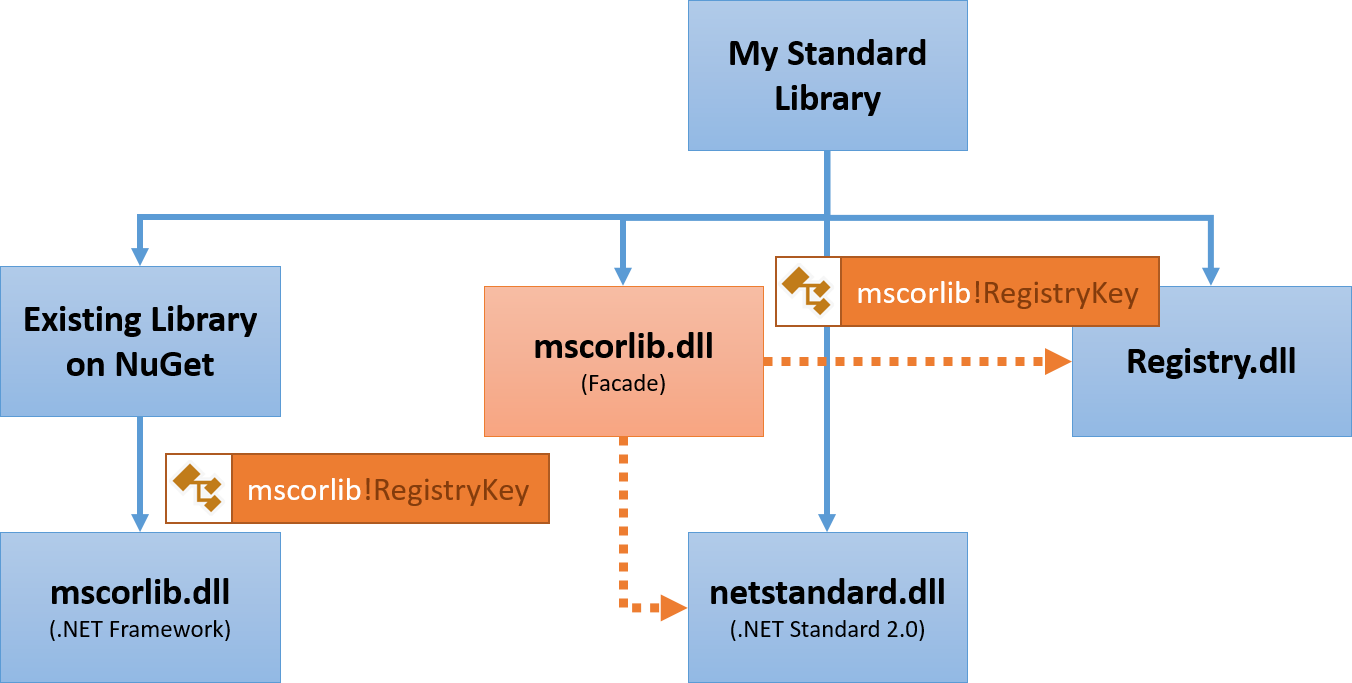

You can read more about how this assembly unification works on the dotnet/standard GitHub repo but the most important scenario is this (image taken from this repository):

![mscorlib facade]()

This shows how the scenario is supposed to work: When a 3rd party dll references [mscorlib]Microsoft.Win32.RegistryKey, there will be an mscorlib.dll that contains a type forward to [Microsoft.Win32.Registry] Microsoft.Win32.RegistryKey so it will work when a Microsoft.Win32.RegistryKey.dll is present.

This also shows the major downside: The registry is a windows-only concept and not available on Mac or Linux so this particular code may fail to run on non-windows platforms. But if you use only parts of the library that do not use this functionality, it may work for cross-platform scenarios.

Another problem is that even if API is "available" to compile against and reference, it still may throw a PlatformNotSupportedException.

For example, a library that implements a file format for serialisation / deserialisation might work without modification, even if it has been built for .NET Framework 3.5.

To find what API functions a particular library uses, the .NET Portability Analyzer can be used to scan a dll and show if the library is compatible and if not, which APIs are blocking.