Note: The code below works with the OpenAI Python SDK v0.28. It's not working with >=v1 (i.e., the latest version). Please see the migration guide to make this code work with >=v1.

Semantic search example

The following is an example of semantic search based on embeddings using the OpenAI API.

Wrong goal: OpenAI API should answer from the fine-tuning dataset if the prompt is similar to the one from the fine-tuning dataset

It's completely wrong logic. Forget about fine-tuning. As stated in the official OpenAI documentation:

Fine-tuning lets you get more out of the models available through the

API by providing:

- Higher quality results than prompt design

- Ability to train on more examples than can fit in a prompt

- Token savings due to shorter prompts

- Lower latency requests

Fine-tuning improves on few-shot learning by training on many more examples than can fit in the prompt, letting you achieve better results on a wide number of tasks.

Fine-tuning is not about answering a specific question with a specific answer from the fine-tuning dataset. In other words, a fine-tuned model doesn't know what answer it should give for a given question. It can't read your mind. You'll get an answer based on all the knowledge a fine-tuned model has, where: knowledge of a fine-tuned model = default knowledge (i.e., knowledge that the model had before the fine-tuning) + fine-tuning knowledge (i.e., knowledge that you added to the model with the fine-tuning).

Although GPT-3 models have a lot of general knowledge, sometimes we want the model to to give a specific answer (i.e., a "fact") for a given specific question. If fine-tuning is not the right approach, then what is?

Correct goal: Answer with a "fact" when asked about a "fact", otherwise answer with the OpenAI API

The right approach is semantic search based on embedding vectors, which we compare against each other using cosine similarity to find a "fact" for a given specific question. See the example with a detailed description below.

Note: For better (visual) understanding, the following code was run and tested in Jupyter.

STEP 1: Create a .csv file with "facts"

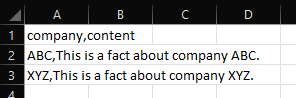

To keep things simple, let's add two companies (i.e., ABC and XYZ) with content. The content in our case will be a one-sentence description of the company.

companies.csv

![CSV]()

Run print_dataframe.ipynb to print the dataframe.

print_dataframe.ipynb

import pandas as pd

df = pd.read_csv('companies.csv')

df

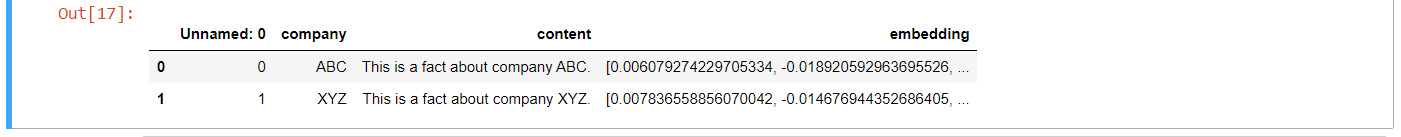

We should get the following output:

![Jupyter 1]()

STEP 2: Calculate an embedding vector for every "fact"

An embedding is a vector of numbers that helps us understand how semantically similar or different the texts are. The closer two embeddings are to each other, the more similar their contents are (source).

Let's test the Embeddings endpoint first. Run get_embedding.ipynb with an input This is a test.

Note: In the case of the Embeddings endpoint, the parameter prompt is called input.

get_embedding.ipynb

import openai

import os

openai.api_key = os.getenv('OPENAI_API_KEY')

def get_embedding(model: str, text: str) -> list[float]:

result = openai.Embedding.create(

model = model,

input = text

)

return result['data'][0]['embedding']

print(get_embedding('text-embedding-ada-002', 'This is a test'))

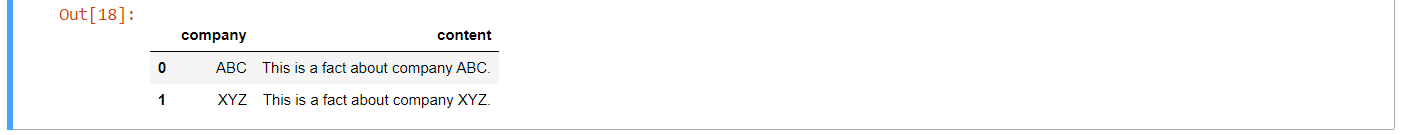

We should get the following output:

![Jupyter 2]()

What we see in the screenshot above is This is a test as an embedding vector. More precisely, we get a 1536-dimensional embedding vector (i.e., there are 1536 numbers inside). You are probably familiar with 3-dimensional space (i.e., X, Y, Z). Well, this is a 1536-dimensional space, which is very hard to imagine.

There are two things we need to understand at this point:

- Why do we need to transform text into an embedding vector (i.e., numbers)? Later on, we can compare embedding vectors and figure out how similar the two texts are. We can't compare texts as such.

- Why are there exactly 1536 numbers inside the embedding vector? Because the

text-embedding-ada-002 model has an output dimension of 1536. It's pre-defined.

Now we can create an embedding vector for each "fact". Run get_all_embeddings.ipynb.

get_all_embeddings.ipynb

import openai

from openai.embeddings_utils import get_embedding

import pandas as pd

import os

openai.api_key = os.getenv('OPENAI_API_KEY')

df = pd.read_csv('companies.csv')

df['embedding'] = df['content'].apply(lambda x: get_embedding(x, engine = 'text-embedding-ada-002'))

df.to_csv('companies_embeddings.csv')

The code above will take the first company (i.e., x), get its 'content' (i.e., "fact") and apply the function get_embedding using the text-embedding-ada-002 model. It will save the embedding vector of the first company in a new column named 'embedding'. Then it will take the second company, the third company, the fourth company, etc. At the end, the code will automatically generate a new .csv file named companies_embeddings.csv.

Saving embedding vectors locally (i.e., in a .csv file) means we don't have to call the OpenAI API every time we need them. We calculate an embedding vector for a given "fact" once, and that's it.

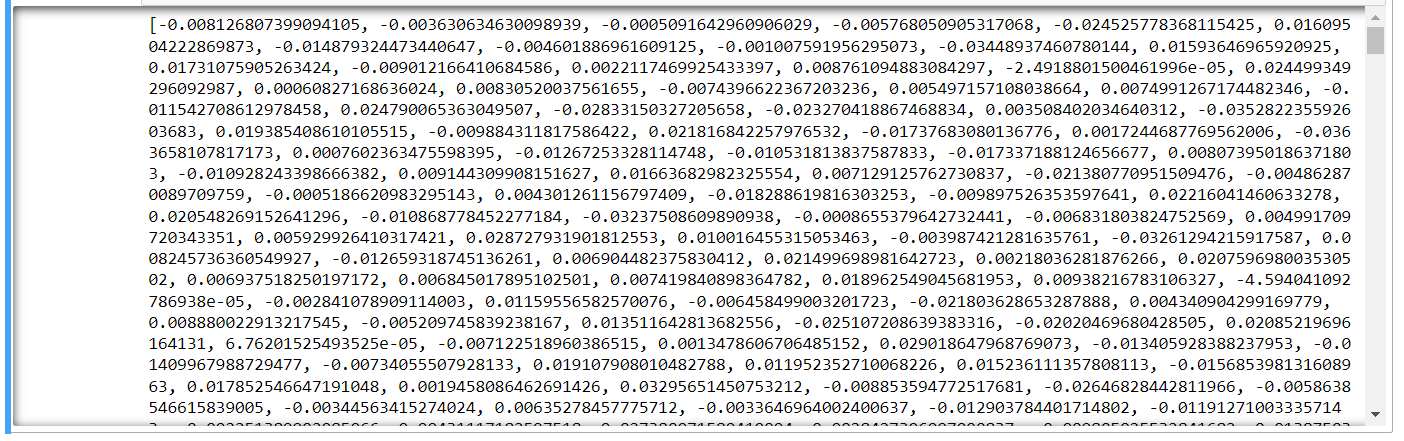

Run print_dataframe_embeddings.ipynb to print the dataframe with the new column named 'embedding'.

print_dataframe_embeddings.ipynb

import pandas as pd

import numpy as np

df = pd.read_csv('companies_embeddings.csv')

df['embedding'] = df['embedding'].apply(eval).apply(np.array)

df

We should get the following output:

![Jupyter 3]()

STEP 3: Calculate an embedding vector for the input and compare it with embedding vectors from the companies_embeddings.csv using cosine similarity

We need to calculate an embedding vector for the input so that we can compare the input with a given "fact" and see how similar these two texts are. Actually, we compare the embedding vector of the input with the embedding vector of the "fact". Then we compare the input with the second "fact", the third "fact", the fourth "fact", etc. Run get_cosine_similarity.ipynb.

get_cosine_similarity.ipynb

import openai

from openai.embeddings_utils import cosine_similarity

import pandas as pd

import os

openai.api_key = os.getenv('OPENAI_API_KEY')

my_model = 'text-embedding-ada-002'

my_input = '<INSERT_INPUT_HERE>'

def get_embedding(model: str, text: str) -> list[float]:

result = openai.Embedding.create(

model = my_model,

input = my_input

)

return result['data'][0]['embedding']

input_embedding_vector = get_embedding(my_model, my_input)

df = pd.read_csv('companies_embeddings.csv')

df['embedding'] = df['embedding'].apply(eval).apply(np.array)

df['similarity'] = df['embedding'].apply(lambda x: cosine_similarity(x, input_embedding_vector))

df

The code above will take the input and compare it with the first fact. It will save the calculated similarity of the two in a new column named 'similarity'. Then it will take the second fact, the third fact, the fourth fact, etc.

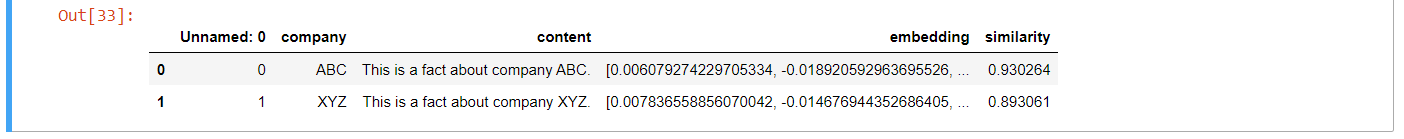

If my_input = 'Tell me something about company ABC':

![ABC]()

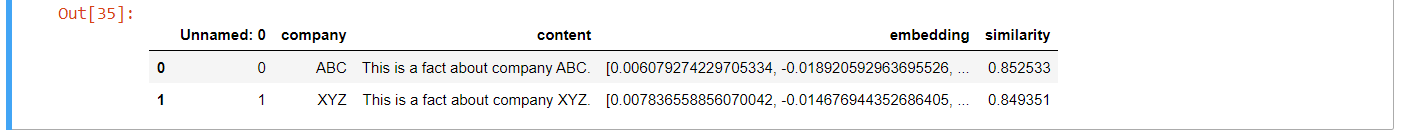

If my_input = 'Tell me something about company XYZ':

![XYZ]()

If my_input = 'Tell me something about company Apple':

![Apple]()

We can see that when we give Tell me something about company ABC as an input, it's the most similar to the first "fact". When we give Tell me something about company XYZ as an input, it's the most similar to the second "fact". Whereas, if we give Tell me something about company Apple as an input, it's the least similar to either of these two "facts".

STEP 4: Answer with the most similar "fact" if similarity is above our threshold, otherwise answer with the OpenAI API

Let's set our similarity threshold to >= 0.9. The code below should answer with the most similar "fact" if similarity is >= 0.9, otherwise answer with the OpenAI API. Run get_answer.ipynb.

get_answer.ipynb

# Imports

import openai

from openai.embeddings_utils import cosine_similarity

import pandas as pd

import numpy as np

import os

# Use your API key

openai.api_key = os.getenv('OPENAI_API_KEY')

# Insert OpenAI text embedding model and input

my_model = 'text-embedding-ada-002'

my_input = '<INSERT_INPUT_HERE>'

# Calculate embedding vector for the input using OpenAI Embeddings endpoint

def get_embedding(model: str, text: str) -> list[float]:

result = openai.Embedding.create(

model = my_model,

input = my_input

)

return result['data'][0]['embedding']

# Save embedding vector of the input

input_embedding_vector = get_embedding(my_model, my_input)

# Calculate similarity between the input and "facts" from companies_embeddings.csv file which we created before

df = pd.read_csv('companies_embeddings.csv')

df['embedding'] = df['embedding'].apply(eval).apply(np.array)

df['similarity'] = df['embedding'].apply(lambda x: cosine_similarity(x, input_embedding_vector))

# Find the highest similarity value in the dataframe column 'similarity'

highest_similarity = df['similarity'].max()

# If the highest similarity value is equal or higher than 0.9 then print the 'content' with the highest similarity

if highest_similarity >= 0.9:

fact_with_highest_similarity = df.loc[df['similarity'] == highest_similarity, 'content']

print(fact_with_highest_similarity)

# Else pass input to the OpenAI Completions endpoint

else:

response = openai.Completion.create(

model = 'text-davinci-003',

prompt = my_input,

max_tokens = 30,

temperature = 0

)

content = response['choices'][0]['text'].replace('\n', '')

print(content)

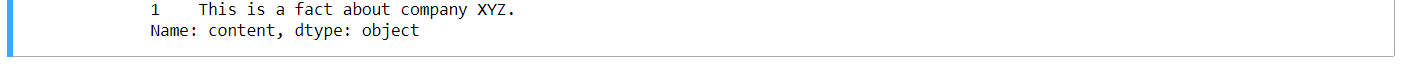

If my_input = 'Tell me something about company ABC' and the threshold is >= 0.9, we should get the following answer from the companies_embeddings.csv:

![Answer 1]()

If my_input = 'Tell me something about company XYZ' and the threshold is >= 0.9, we should get the following answer from the companies_embeddings.csv:

![Answer 2]()

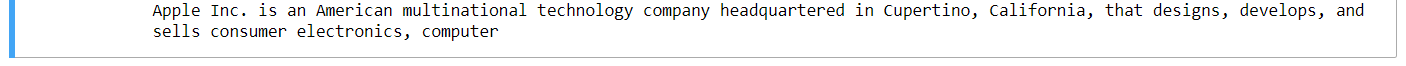

If my_input = 'Tell me something about company Apple' and the threshold is >= 0.9, we should get the following answer from the OpenAI API:

![Answer 3]()

Additional tips & tricks

You can use Pinecone for storing embedding vectors, as stated in the official Pinecone article:

Embeddings are generated by AI models (such as Large Language Models)

and have a large number of attributes or features, making their

representation challenging to manage. In the context of AI and machine

learning, these features represent different dimensions of the data

that are essential for understanding patterns, relationships, and

underlying structures.

That is why we need a specialized database designed specifically for

handling this type of data. Vector databases like Pinecone fulfill

this requirement by offering optimized storage and querying

capabilities for embeddings. Vector databases have the capabilities of

a traditional database that are absent in standalone vector indexes

and the specialization of dealing with vector embeddings, which

traditional scalar-based databases lack.

![Screenshot Pinecone]()