Profiling Bash (four answers, and more...)

Reading this and because profiling is an important step, I've done some test and research about this whole Stack Overflow question and already posted answers.

Here are more than four answers:

The first is based on @DennisWilliamson's idea, but with a lot less of resource consumption

The second was my own (before this;)

The third is based on @fgm's answer, but more accurate.

The last uses script, scriptreplay and timing file.

Finally, a little comparison of performance at end.

1. Using set -x and date but with limited forks

Take from @DennisWilliamson's idea, but with the following syntax, there will only one initial fork to three commands:

exec 3>&2 2> >(tee /tmp/sample-time.$$.log |

sed -u 's/^.*$/now/' |

date -f - +%s.%N >/tmp/sample-time.$$.tim)

set -x

Doing this will run date only once. There is a quick demo/test to show how it work:

for i in {1..4};do echo now;sleep .05;done| date -f - +%N

Sample script:

#!/bin/bash

exec 3>&2 2> >( tee /tmp/sample-$$.log |

sed -u 's/^.*$/now/' |

date -f - +%s.%N >/tmp/sample-$$.tim)

set -x

for ((i=3;i--;));do sleep .1;done

for ((i=2;i--;))

do

tar -cf /tmp/test.tar -C / bin

gzip /tmp/test.tar

rm /tmp/test.tar.gz

done

set +x

exec 2>&3 3>&-

By running this script, you make 2 files: /tmp/sample-XXXX.log and /tmp/sample-XXXX.tim (where XXXX is process id of running script).

You could present them by using paste:

paste tmp/sample-XXXX.{tim,log}

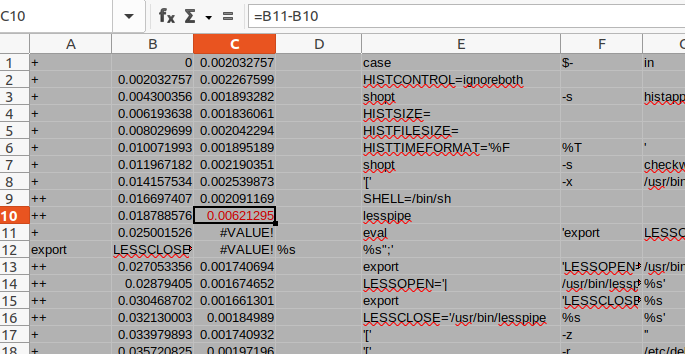

Or you may even compute diff time:

paste <(

while read tim ;do

crt=000000000$((${tim//.}-10#0$last))

printf "%12.9f\n" ${crt:0:${#crt}-9}.${crt:${#crt}-9}

last=${tim//.}

done < sample-time.24804.tim

) sample-time.24804.log

1388487534.391309713 + (( i=3 ))

0.000080807 + (( i-- ))

0.000008312 + sleep .1

0.101304843 + (( 1 ))

0.000032616 + (( i-- ))

0.000007124 + sleep .1

0.101251684 + (( 1 ))

0.000033036 + (( i-- ))

0.000007054 + sleep .1

0.104013813 + (( 1 ))

0.000026959 + (( i-- ))

0.000006915 + (( i=2 ))

0.000006635 + (( i-- ))

0.000006844 + tar -cf /tmp/test.tar -C / bin

0.022655107 + gzip /tmp/test.tar

0.637042668 + rm /tmp/test.tar.gz

0.000823649 + (( 1 ))

0.000011314 + (( i-- ))

0.000006915 + tar -cf /tmp/test.tar -C / bin

0.016084482 + gzip /tmp/test.tar

0.627798263 + rm /tmp/test.tar.gz

0.001294946 + (( 1 ))

0.000023187 + (( i-- ))

0.000006845 + set +x

or on two columns:

paste <(

while read tim ;do

[ -z "$last" ] && last=${tim//.} && first=${tim//.}

crt=000000000$((${tim//.}-10#0$last))

ctot=000000000$((${tim//.}-10#0$first))

printf "%12.9f %12.9f\n" ${crt:0:${#crt}-9}.${crt:${#crt}-9} \

${ctot:0:${#ctot}-9}.${ctot:${#ctot}-9}

last=${tim//.}

done < sample-time.24804.tim

) sample-time.24804.log

May render:

0.000000000 0.000000000 + (( i=3 ))

0.000080807 0.000080807 + (( i-- ))

0.000008312 0.000089119 + sleep .1

0.101304843 0.101393962 + (( 1 ))

0.000032616 0.101426578 + (( i-- ))

0.000007124 0.101433702 + sleep .1

0.101251684 0.202685386 + (( 1 ))

0.000033036 0.202718422 + (( i-- ))

0.000007054 0.202725476 + sleep .1

0.104013813 0.306739289 + (( 1 ))

0.000026959 0.306766248 + (( i-- ))

0.000006915 0.306773163 + (( i=2 ))

0.000006635 0.306779798 + (( i-- ))

0.000006844 0.306786642 + tar -cf /tmp/test.tar -C / bin

0.022655107 0.329441749 + gzip /tmp/test.tar

0.637042668 0.966484417 + rm /tmp/test.tar.gz

0.000823649 0.967308066 + (( 1 ))

0.000011314 0.967319380 + (( i-- ))

0.000006915 0.967326295 + tar -cf /tmp/test.tar -C / bin

0.016084482 0.983410777 + gzip /tmp/test.tar

0.627798263 1.611209040 + rm /tmp/test.tar.gz

0.001294946 1.612503986 + (( 1 ))

0.000023187 1.612527173 + (( i-- ))

0.000006845 1.612534018 + set +x

2. Using trap debug and /proc/timer_list on recent GNU/Linux kernels, without forks.

Under GNU/Linux's recent kernels, you may find a /proc file named timer_list:

grep 'now at\|offset' /proc/timer_list

now at 5461935212966259 nsecs

.offset: 0 nsecs

.offset: 1383718821564493249 nsecs

.offset: 0 nsecs

Where current time is the sum of 5461935212966259 + 1383718821564493249, but in nanoseconds.

So for computing elapsed time, there is no need of knowing offset.

For this kind of jobs, I wrote elap.bash (V2), that be sourced by the following syntax:

source elap.bash-v2

or

. elap.bash-v2 init

(See comments for the full syntax)

So you could simply add this line at top of your script:

. elap.bash-v2 trap2

A little sample:

#!/bin/bash

. elap.bash-v2 trap

for ((i=3;i--;));do sleep .1;done

elapCalc2

elapShowTotal \\e[1mfirst total\\e[0m

for ((i=2;i--;))

do

tar -cf /tmp/test.tar -C / bin

gzip /tmp/test.tar

rm /tmp/test.tar.gz

done

trap -- debug

elapTotal \\e[1mtotal time\\e[0m

Do render on my host:

0.000947481 Starting

0.000796900 ((i=3))

0.000696956 ((i--))

0.101969242 sleep .1

0.000812478 ((1))

0.000755067 ((i--))

0.103693305 sleep .1

0.000730482 ((1))

0.000660360 ((i--))

0.103565001 sleep .1

0.000719516 ((1))

0.000671325 ((i--))

0.000754856 elapCalc2

0.316018113 first total

0.000754787 elapShowTotal \e[1mfirst total\e[0m

0.000711275 ((i=2))

0.000683408 ((i--))

0.075673816 tar -cf /tmp/test.tar -C / bin

0.596389329 gzip /tmp/test.tar

0.006565188 rm /tmp/test.tar.gz

0.000830217 ((1))

0.000759466 ((i--))

0.024783966 tar -cf /tmp/test.tar -C / bin

0.604119903 gzip /tmp/test.tar

0.005172940 rm /tmp/test.tar.gz

0.000952299 ((1))

0.000827421 ((i--))

1.635788924 total time

1.636657204 EXIT

Using trap2 instead of trap as an argument to the source command:

#!/bin/bash

. elap.bash-v2 trap2

...

Will render two columns last command and total:

0.000894541 0.000894541 Starting

0.001306122 0.002200663 ((i=3))

0.001929397 0.004130060 ((i--))

0.103035812 0.107165872 sleep .1

0.000875613 0.108041485 ((1))

0.000813872 0.108855357 ((i--))

0.104954517 0.213809874 sleep .1

0.000900617 0.214710491 ((1))

0.000842159 0.215552650 ((i--))

0.104846890 0.320399540 sleep .1

0.000899082 0.321298622 ((1))

0.000811708 0.322110330 ((i--))

0.000879455 0.322989785 elapCalc2

0.322989785 first total

0.000906692 0.323896477 elapShowTotal \e[1mfirst total\e[0m

0.000820089 0.324716566 ((i=2))

0.000773782 0.325490348 ((i--))

0.024752613 0.350242961 tar -cf /tmp/test.tar -C / bin

0.596199363 0.946442324 gzip /tmp/test.tar

0.003007128 0.949449452 rm /tmp/test.tar.gz

0.000791452 0.950240904 ((1))

0.000779371 0.951020275 ((i--))

0.030519702 0.981539977 tar -cf /tmp/test.tar -C / bin

0.584155405 1.565695382 gzip /tmp/test.tar

0.003058674 1.568754056 rm /tmp/test.tar.gz

0.000955093 1.569709149 ((1))

0.000919964 1.570629113 ((i--))

1.571516599 total time

0.001723708 1.572352821 EXIT

Yes, strace could do the job:

strace -q -f -s 10 -ttt sample-script 2>sample-script-strace.log

But it could make a lot of stuff!

wc sample-script-strace.log

6925 57637 586518 sample-script-strace.log

Using a more restricted command:

strace -f -s 10 -ttt -eopen,access,read,write ./sample-script 2>sample-script-strace.log

Will dump a lighter log:

4519 36695 374453 sample-script-strace.log

Depending on what you're searching for, you may be more restrictive:

strace -f -s 10 -ttt -eaccess,open ./sample-script 2>&1 | wc

189 1451 13682

Reading them will be a little harder:

{

read -a first

first=${first//.}

last=$first

while read tim line;do

crt=000000000$((${tim//.}-last))

ctot=000000000$((${tim//.}-first))

printf "%9.6f %9.6f %s\n" ${crt:0:${#crt}-6}.${crt:${#crt}-6} \

${ctot:0:${#ctot}-6}.${ctot:${#ctot}-6} "$line"

last=${tim//.}

done

} < <(

sed </tmp/sample-script.strace -e '

s/^ *//;

s/^\[[^]]*\] *//;

/^[0-9]\{4\}/!d

')

0.000110 0.000110 open("/lib/x86_64-linux-gnu/libtinfo.so.5", O_RDONLY) = 4

0.000132 0.000242 open("/lib/x86_64-linux-gnu/libdl.so.2", O_RDONLY) = 4

0.000121 0.000363 open("/lib/x86_64-linux-gnu/libc.so.6", O_RDONLY) = 4

0.000462 0.000825 open("/dev/tty", O_RDWR|O_NONBLOCK) = 4

0.000147 0.000972 open("/usr/lib/locale/locale-archive", O_RDONLY) = 4

...

0.000793 1.551331 open("/etc/ld.so.cache", O_RDONLY) = 4

0.000127 1.551458 open("/lib/x86_64-linux-gnu/libc.so.6", O_RDONLY) = 4

0.000545 1.552003 open("/usr/lib/locale/locale-archive", O_RDONLY) = 4

0.000439 1.552442 --- SIGCHLD (Child exited) @ 0 (0) ---

The original bash script is not so easy to follow in this...

4. Using script, scriptreplay and timing file

For debugging scripts, I often use:

script -t script.log 2>script.tim -c 'bash -x /path/script.sh'

As part of BSD Utils, script (and scriptreplay) is a very old tool which can be used to profile bash, with a very small footprint.

Little demo:

script -t script.log 2>script.tim -c 'bash -x -c "

for ((i=3;i--;));do sleep .1;done

for ((i=2;i--;)) ;do

tar -cf /tmp/test.tar -C / bin

gzip /tmp/test.tar

rm /tmp/test.tar.gz

done

"'

Will produce:

Script started on Fri Mar 25 08:29:37 2016

+ (( i=3 ))

+ (( i-- ))

+ sleep .1

+ (( 1 ))

+ (( i-- ))

+ sleep .1

+ (( 1 ))

+ (( i-- ))

+ sleep .1

+ (( 1 ))

+ (( i-- ))

+ (( i=2 ))

+ (( i-- ))

+ tar -cf /tmp/test.tar -C / bin

+ gzip /tmp/test.tar

+ rm /tmp/test.tar.gz

+ (( 1 ))

+ (( i-- ))

+ tar -cf /tmp/test.tar -C / bin

+ gzip /tmp/test.tar

+ rm /tmp/test.tar.gz

+ (( 1 ))

+ (( i-- ))

Script done on Fri Mar 25 08:29:39 2016

and generate two files:

ls -l script.*

-rw-r--r-- 1 user user 450 Mar 25 08:29 script.log

-rw-r--r-- 1 user user 177 Mar 25 08:29 script.tim

File script.log contain all traces and script.tim is the timing file:

head -n 4 script.*

==> script.log <==

Script started on Fri Mar 25 08:29:37 2016

+ (( i=3 ))

+ (( i-- ))

+ sleep .1

==> script.tim <==

0.435331 11

0.000033 2

0.000024 11

0.000010 2

You could see the total time execution with the first and last lines of the logfile and/or by summarizing times in the timing file:

head -n1 script.log ;tail -n1 script.log

Script started on Fri Mar 25 08:29:37 2016

Script done on Fri Mar 25 08:29:39 2016

sed < script.tim 's/ .*$//;H;${x;s/\n/+/g;s/^\+//;p};d' | bc -l

2.249755

In the timing file, the second value is the number of the next bytes in corresponding logfile. This let you have the ability of replaying the log file optionally with an acceleration factor:

scriptreplay script.{tim,log}

or

scriptreplay script.{tim,log} 5

or

scriptreplay script.{tim,log} .2

Showing times and commands side-by-side is a little more complex too:

exec 4<script.log

read -u 4 line

echo $line ;while read tim char;do

read -u 4 -N $char -r -s line

echo $tim $line

done < script.tim &&

while read -u 4 line;do

echo $line

done;exec 4<&-

Script started on Fri Mar 25 08:28:51 2016

0.558012 + (( i=3 ))

0.000053

0.000176 + (( i-- ))

0.000015

0.000059 + sleep .1

0.000015

+ sleep .1) + (( 1 ))

+ sleep .1) + (( 1 ))

+ tar -cf /tmp/test.tar -C / bin

0.035024 + gzip /tmp/test.tar

0.793846 + rm /tmp/test.tar.gz

+ tar -cf /tmp/test.tar -C / bin

0.024971 + gzip /tmp/test.tar

0.729062 + rm /tmp/test.tar.gz

+ (( i-- )) + (( 1 ))

Script done on Fri Mar 25 08:28:53 2016

Further work around scriptReplay: How to manipulate timing and typescript files created by "script" command?

Tests and conclusion

To make tests, I've downloaded second sample at Bash complex Hello, World!. This script take approx 0.72 seconds to complete on my host.

I've add at the top of the script one of:

by elap.bash function

#!/bin/bash

source elap.bash-v2 trap2

eval "BUNCHS=(" $(perl <<EOF | gunzip

...

by set -x and PS4

#!/bin/bash

PS4='+ $(date "+%s.%N")\011 '

exec 3>&2 2>/tmp/bashstart.$$.log

set -x

eval "BUNCHS=(" $(perl <<EOF | gunzip

...

by set -x and initial fork to long exec command

#!/bin/bash

exec 3>&2 2> >(tee /tmp/sample-time.$$.log |

sed -u 's/^.*$/now/' |

date -f - +%s.%N >/tmp/sample-time.$$.tim)

set -x

eval "BUNCHS=(" $(perl <<EOF | gunzip

by script (and set +x)

script -t helloworld.log 2>helloworld.tim -c '

bash -x complex_helloworld-2.sh' >/dev/null

Times

And compare execution times (on my host):

- Direct 0.72 sec

- elap.bash 13.18 sec

- set + date@PS4 54.61 sec

- set + 1 fork 1.45 sec

- script and timing file 2.19 sec

- strace 4.47 sec

Outputs

0.000950277 0.000950277 Starting

0.007618964 0.008569241 eval "BUNCHS=(" $(perl <<EOF | gunzi

0.005259953 0.013829194 BUNCHS=("2411 1115 -13 15 33 -3 15 1

0.010945070 0.024774264 MKey="V922/G/,2:"

0.001050990 0.025825254 export RotString=""

0.004724348 0.030549602 initRotString

0.001322184 0.031871786 for bunch in "${BUNCHS[@]}"

0.000768893 0.032640679 out=""

0.001008242 0.033648921 bunchArray=($bunch)

0.000741095 0.034390016 ((k=0))

++ 1388598366.536099290 perl

++ 1388598366.536169132 gunzip

+ 1388598366.552794757 eval 'BUNCHS=(' '"2411' 1115 -13 15 33 -3 15 1

++ 1388598366.555001983 BUNCHS=("2411 1115 -13 15 33 -3 15 13111 -6 1

+ 1388598366.557551018 MKey=V922/G/,2:

+ 1388598366.558316839 export RotString=

+ 1388598366.559083848 RotString=

+ 1388598366.560165147 initRotString

+ 1388598366.560942633 local _i _char

+ 1388598366.561706988 RotString=

- by

set -x and initial fork to long exec command (and my second paste sample script)

0.000000000 0.000000000 ++ perl

0.008141159 0.008141159 ++ gunzip

0.000007822 0.008148981 + eval 'BUNCHS=(' '"2411' 1115 -13 15 33 -3

0.000006216 0.008155197 ++ BUNCHS=("2411 1115 -13 15 33 -3 15 13111

0.000006216 0.008161413 + MKey=V922/G/,2:

0.000006076 0.008167489 + export RotString=

0.000006007 0.008173496 + RotString=

0.000006006 0.008179502 + initRotString

0.000005937 0.008185439 + local _i _char

0.000006006 0.008191445 + RotString=

0.000213 0.000213 brk(0) = 0x17b6000

0.000044 0.000257 access("/etc/ld.so.nohwcap", F_OK) = -1 ENOENT (No such file or directory)

0.000047 0.000304 mmap(NULL, 8192, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7faf1c0dc000

0.000040 0.000344 access("/etc/ld.so.preload", R_OK) = -1 ENOENT (No such file or directory)

0.000040 0.000384 open("/etc/ld.so.cache", O_RDONLY) = 4

...

0.000024 4.425049 close(10) = 0

0.000042 4.425091 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0

0.000028 4.425119 read(255, "", 4409) = 0

0.000058 4.425177 exit_group(0) = ?

Le script a débuté sur ven 25 mar 2016 09:18:35 CET

0.667160 ++ gunzip

0.000025

0.000948 ++ perl

0.000011

0.005338 + eval 'BUNCHS=(' '"2411' 1115 -13 15 33 -3 15 13111 -6 1 111 4

0.000044 1223 15 3311 121121 17 3311 121121 1223 3311 121121 17 3311 121

0.000175 ++ BUNCHS=("2411 1115 -13 15 33 -3 15 13111 -6 15 1114 15 12211

0.000029 1 1321 12211 412 21211 33 21211 -2 15 2311 11121 232 121111 122

0.000023 4 3311 121121 12221 3311 121121 12221 3311 121121 1313 -6 15 33

Conclusion

Well! If my pure Bash script is quicker than forking to date on each command, using this implies some operations on each command.

The way of dedicating an independent process for logging and storing is clearly more efficient.

strace is an interesting way, more detailed, but hard to read.

script, with scriptreplay and acceleration factor is very nice too, but not the same precision as this is based on a console exchange instead of process execution, but very light and efficient (not same goal, not same usage).

Finally, I think that the more efficient, in readability and performances is set + 1 fork, The first of this answer, but in fine, depending on the specific case, I use sometimes strace and/or script too.

Some more tricks

Which part of script are currently running?

As you are asking about what's currently happen in your machine (backup server or else), you could use ps -C command, but for showing currently elapsed time:

ps -C rsync ho lstart,etime

Fri Aug 11 09:57:41 2023 01:38:49

or better:

ps --sid $(ps ho sid -C rsync) fwho pid,lstart,cmd

2778 Fri Aug 11 09:08:00 2023 /bin/sh -c ps -C backup.sh >/dev/null || /path/to/backup.sh -q

2780 Fri Aug 11 09:08:00 2023 \_ /bin/bash /path/to/backup.sh -q

7688 Fri Aug 11 09:57:41 2023 \_ rsync -ax --delete --exclude '*/cache/*' --excl

This show the root cron process with his currents childs and all start times.

Which files or path are currently in progress

On linux, you could browse /proc pseudo filesystem, for showing file descriptors currently open by any process:

ls -l /proc/$(($(ps ho pid -C rsync)))/fd |

sed -ne 's|.* -> /path/to/source||p'

Of course all this have to be done with root's rights (in a root session or by using sudo).

time bash -c 'exit'andtime bash -i -c 'exit'and may play with--norcand--noprofile. – Loveecho $EPOCHREALTIME(for bash > version 5) before/after commands that seem expensive (anything to do with bash_completion, pyenv, etc). https://mcmap.net/q/65394/-linux-command-to-get-time-in-milliseconds – Infidelity